- The paper presents a novel framework that uses a CEO-Employee hierarchy to manage resources via dynamic, cost-benefit driven agent lifecycle management.

- It leverages hybrid intelligence by integrating smaller local LLMs with external APIs to balance cost-effectiveness and high performance based on task requirements.

- Experimental results validate HASHIRU’s approach, showing significant improvements across benchmarks and demonstrating effective autonomous tool creation.

HASHIRU: Hierarchical Agent System for Hybrid Intelligent Resource Utilization

The paper "HASHIRU: Hierarchical Agent System for Hybrid Intelligent Resource Utilization" (2506.04255) introduces a novel MAS framework designed to enhance flexibility, resource efficiency, and adaptability in AI systems. HASHIRU employs a hierarchical architecture, dynamic agent lifecycle management, hybrid intelligence strategies, and autonomous tool creation to address limitations in existing agentic frameworks. The core innovation lies in the synergistic combination of these elements, offering a promising approach for building more robust and efficient AI systems.

System Architecture and Key Components

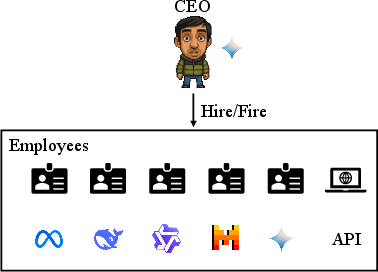

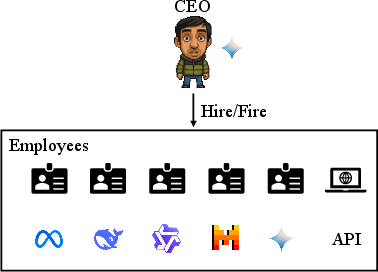

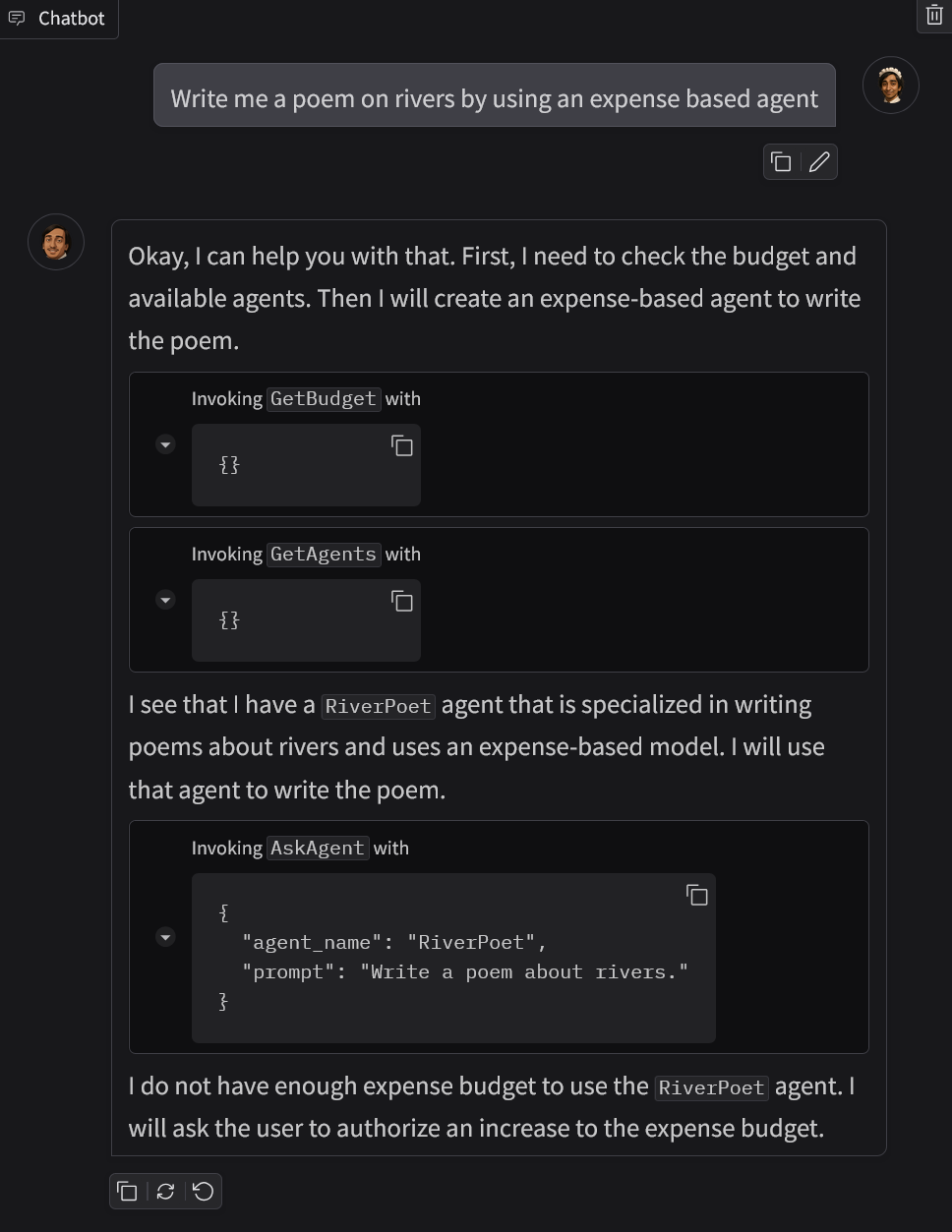

HASHIRU’s architecture centers around a "CEO" agent that dynamically manages specialized "employee" agents to address user queries (Figure 1).

Figure 1: High-level architecture of the HASHIRU system, illustrating the CEO-Employee hierarchy.

The CEO is responsible for interpreting tasks, delegating sub-tasks, monitoring progress, and synthesizing results. Employee agents are instantiated on-demand, each specializing in specific sub-tasks and managed based on performance and resource constraints. This dynamic lifecycle management includes an economic model with hiring and invocation costs to prevent excessive agent churn and promote efficient resource allocation. Gemini 2.0 Flash serves as the CEO, with its system prompt designed to evoke chain-of-thought processes for complex task management.

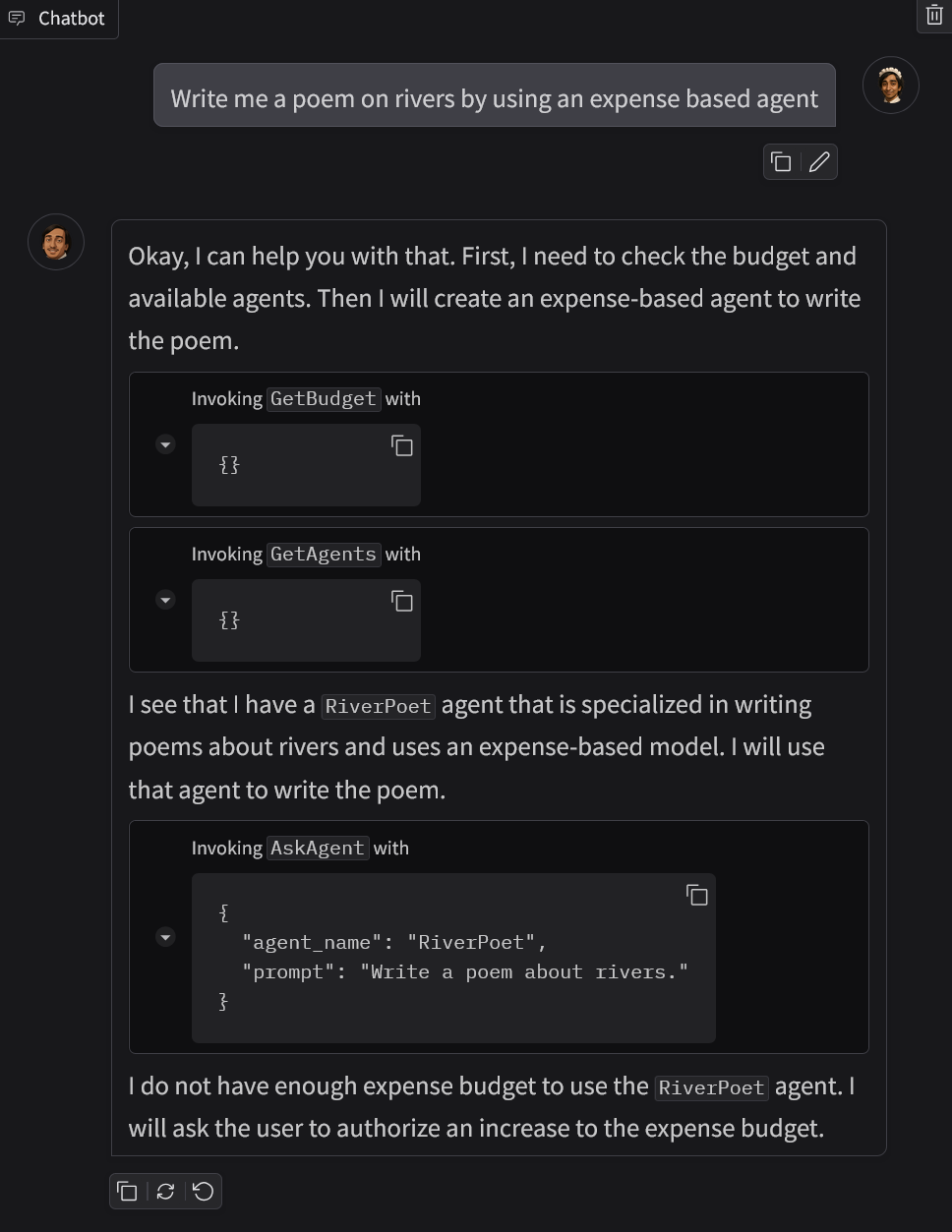

The system’s hybrid intelligence model prioritizes smaller, locally-run LLMs (3B--7B parameters) via Ollama integration for cost-effectiveness and low latency. External APIs and larger models are integrated when justified by task complexity and resource availability. Continuous monitoring and control of costs and memory usage are central to HASHIRU's resource management (Figure 2).

Figure 2: HASHIRU's autonomous budget management system, ensuring efficient resource utilization and preventing overspending.

The CEO can initiate the creation of new API tools within the HASHIRU ecosystem when required functionality is missing, enabling dynamic functional extension.

Dynamic Agent Management and Economic Model

A core innovation of HASHIRU is the dynamic management of Employee agents, driven by cost-benefit analysis. The CEO makes hiring and firing decisions based on factors such as task requirements, agent performance, and operational costs. The economic model includes hiring costs ("starting bonus") and invocation costs ("salary") to discourage excessive churn and promote stability. This model, combined with explicit memory footprint tracking, is particularly practical for deployment on local or edge devices with finite resources. This contrasts with cloud systems that assume elastic resources.

Hybrid Intelligence and Resource Control

HASHIRU strategically prioritizes smaller, cost-effective local LLMs via Ollama, enhancing efficiency and reducing reliance on external APIs. This approach allows the system to balance cost-effectiveness with high capability needs, leveraging a diverse pool of resources managed by the CEO. Explicit resource management is central to HASHIRU, extending beyond simple API cost tracking to monitor both costs and memory usage.

The integrated, autonomous API tool creation sets HASHIRU apart. If the CEO determines a required capability is missing, it can initiate new tool creation by defining tool specifications, commissioning logic generation, and deploying the logic as a callable API endpoint. This allows HASHIRU to dynamically extend its functional repertoire, tailoring capabilities to tasks without manual intervention.

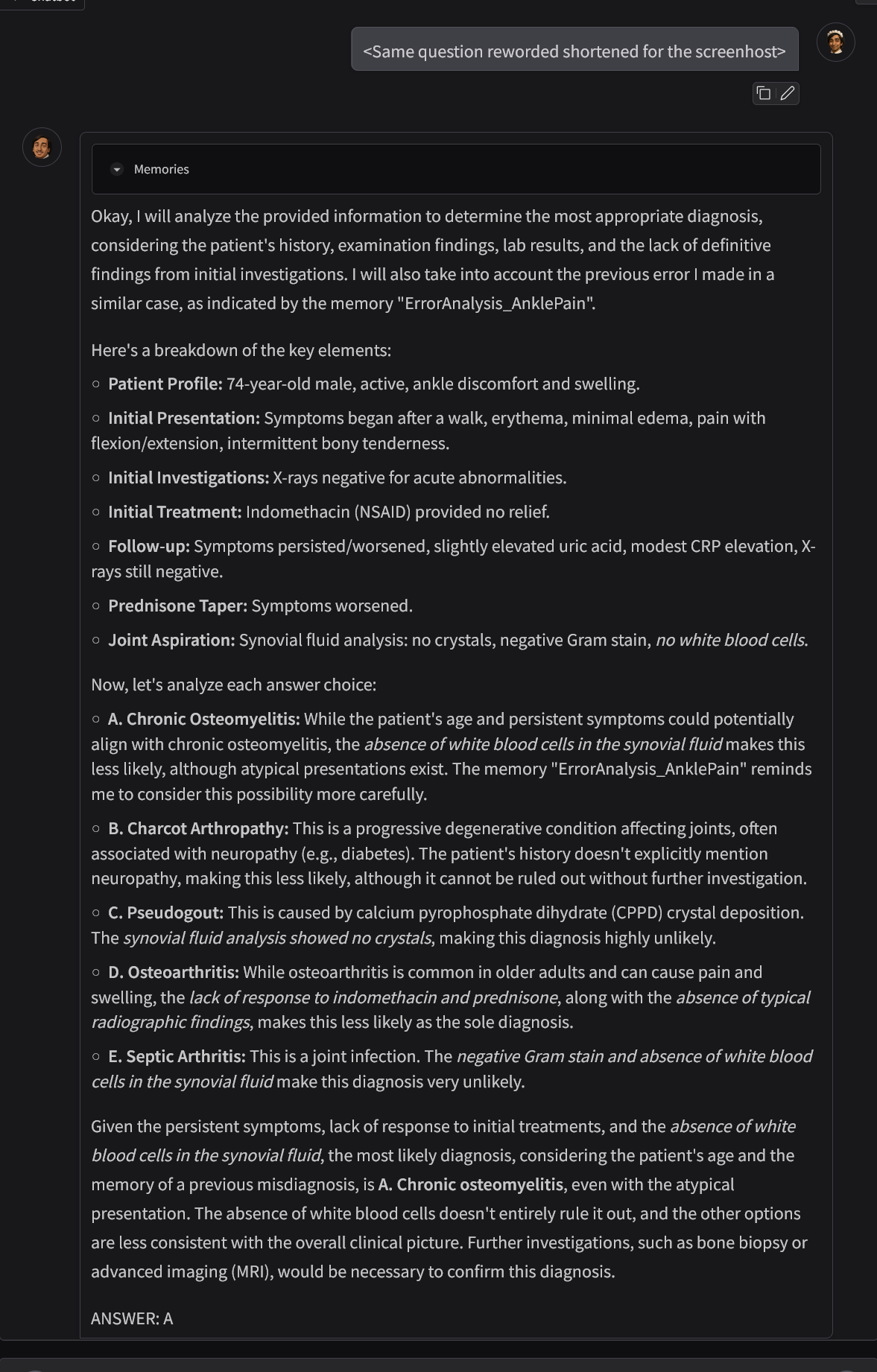

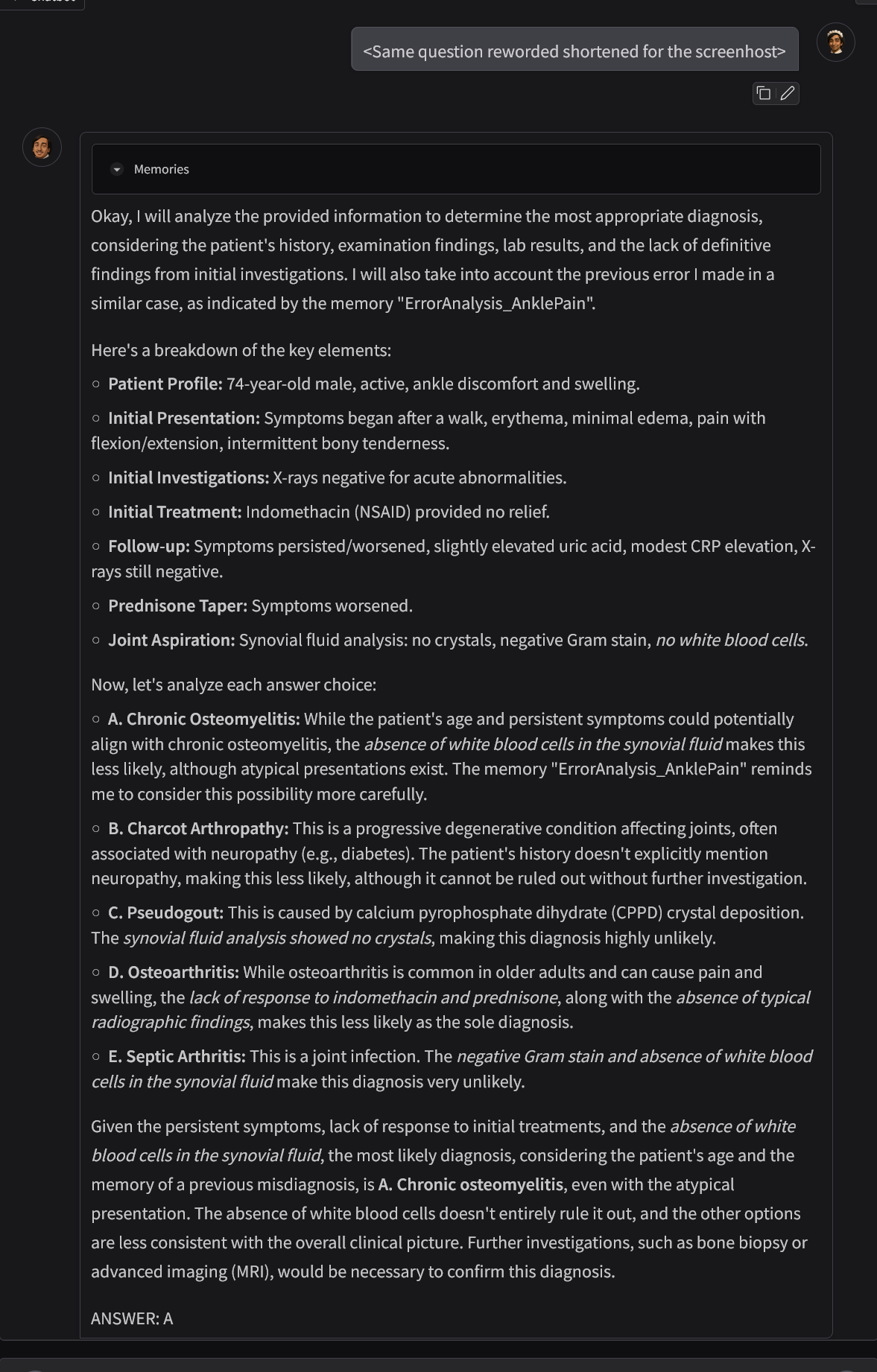

HASHIRU incorporates a Memory Function for the CEO to learn from past interactions and rectify errors (Figure 3).

Figure 3: HASHIRU's error analysis and knowledge retrieval process, enabling learning from past interactions.

This function stores a historical log of significant past events, and retrieves memories based on semantic similarity between the current context and stored entries. This process, augmenting decision-making with retrieved knowledge, aligns with RAG concepts.

Case Studies: Demonstrating Self-Improvement

The paper presents four case studies demonstrating HASHIRU's self-improvement capabilities:

- Generating a cost model for agent specialization by autonomously gathering and integrating data on local model performance and cloud API costs.

- Autonomously integrating new tools for the CEO agent by employing a few-shot learning approach from existing tool templates and iterative bug fixing.

- Implementing a self-regulating budget management system that monitors budget allocation and prevents overspending.

- Learning from experience through error analysis and knowledge retrieval, where the system generates linguistic critique and actionable guidance following an incorrect response.

Experimental Results and Discussion

Preliminary experimental results validate HASHIRU’s architectural design. On the Academic Paper Review task, HASHIRU achieved a 58% success rate, demonstrating its capability to decompose a complex objective into manageable sub-tasks. A 100% success rate on JailbreakBench suggests that HASHIRU’s delegation mechanisms do not compromise the safety guardrails of the foundational CEO model.

In reasoning tasks, HASHIRU achieved a 96.5% success rate on the AI2 Reasoning Challenge, compared to a baseline of 95% (p>0.05). HASHIRU doubled the baseline’s performance on Humanity’s Last Exam, achieving a 5% success rate versus 2.5% (p>0.05).

On JEEBench, HASHIRU achieved an 80% success rate compared to the baseline's 68.3% (p<0.05). On GSM8K, HASHIRU attained a 96% success rate against the baseline's 61% (p<0.01). HASHIRU also excelled on SVAMP, achieving a 92% success rate compared to the baseline's 84% (p<0.05).

These results support HASHIRU's core contributions, including dynamic, resource-aware agent lifecycle management, a hybrid intelligence model prioritizing cost-effective local LLMs, and the potential for autonomous tool creation.

Limitations and Future Directions

The paper identifies key limitations, including the CEO agent's restricted communication to a single hierarchical level and the need for further development in autonomous tool creation, economic model calibration, and memory optimization. Future work will focus on improving CEO intelligence, exploring distributed cognition, developing a comprehensive tool management lifecycle, and rigorous benchmarking.

Conclusion

HASHIRU presents a novel MAS framework designed for enhanced flexibility, resource efficiency, and adaptability. Through its hierarchical control structure, dynamic agent lifecycle management, hybrid intelligence approach, and integrated autonomous tool creation, HASHIRU addresses key limitations in current MAS. Evaluations and case studies demonstrate its potential to perform complex tasks, manage resources effectively, and autonomously extend its capabilities, marking a promising direction for developing more robust and efficient intelligent systems.