Overview of ModelScope-Agent Framework

The paper introduces ModelScope-Agent, an adaptable agent framework leveraging open-source LLMs as controllers to build real-world applications. It addresses the need to enhance LLMs like ChatGPT with tool-use abilities, facilitating interaction with numerous external APIs. ModelScope-Agent supports model training on diverse open-source LLMs and integrates these models with APIs, enabling them to perform complex tasks by using tools.

Technical Contributions

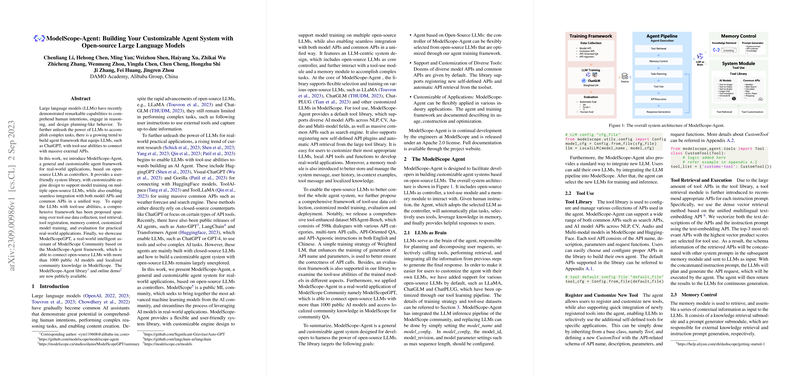

ModelScope-Agent provides a user-friendly system library with a customizable engine design. It integrates with both model APIs and common APIs seamlessly. The framework features several essential components:

- Tool-Use Framework: Consists of data collection, retrieval, registration, memory control, customized model training, and evaluation processes.

- Comprehensive API Integration: Includes more than 1000 public AI models and localized community knowledge.

- Open-Source Accessibility: The library and a demonstrative online platform, ModelScopeGPT, are publicly available for community use.

Model Architecture

The architecture revolves around open-source LLMs such as LLaMA and ChatGLM, which act as central controllers. ModelScope-Agent includes:

- Tool-Use Module: Configures and manages API collections, supporting diverse model and common APIs across NLP, CV, and Audio domains.

- Memory Module: Manages system messages, user history, and contextual information.

Training and Dataset

The paper introduces MSAgent-Bench, a tool-enhanced dataset containing 598k dialogues in English and Chinese. It covers a wide array of scenarios to train the models for accurate API usage and response generation. The Weighted LLM (LM) training strategy focuses on improving API call precision.

Evaluation

ModelScope-Agent includes both automated and human evaluation frameworks:

- Automated Evaluation: Measures the accuracy of API requests with metrics like Action EM and Argument F1.

- Human Evaluation: Through Agent Arena, users can compare agents' performances in handling API-based tasks.

Numerical Results

The evaluation demonstrates the trained models' proficiency with significant scores in automated tests. Qwen, one of the models, showed superior performance, indicating the effectiveness of the finetuned open-source models in executing API calls accurately.

Implications and Future Directions

The ModelScope-Agent framework extends the capabilities of LLMs beyond traditional boundaries, paving the way for customizable AI agents in practical applications. Its modular design and comprehensive dataset support diverse tool integration, promising advancements in AI agent development.

The future trajectory of this research could involve refining tool-use strategies and enhancing multilingual capabilities. Open-source collaboration may lead to more versatile and robust agent systems, addressing complex real-world challenges through efficient AI-API integration.