- The paper demonstrates that theory-guided training significantly reduces hallucinations in generative AI outputs.

- It employs confidence-based error screening, such as reliability scoring, to validate model predictions in scientific contexts.

- Case studies with AlphaFold and GenCast illustrate practical strategies for integrating AI error management into research.

Summary of "Hallucination, reliability, and the role of generative AI in science"

This paper explores the challenges posed by "hallucinations," which are distinctive errors in generative AI, and their impact on the reliability of AI when utilized in scientific contexts. The author distinguishes between epistemically benign and corrosive hallucinations, the latter being misrepresentations that are both scientifically misleading and difficult to anticipate. Through case studies of AlphaFold and GenCast, the paper argues that thoughtful integration of theoretical constraints and strategic error screening can mitigate the detrimental effects of hallucinations, thus making generative AI a reliable tool for scientific discovery.

Hallucination and Reliability in Generative AI

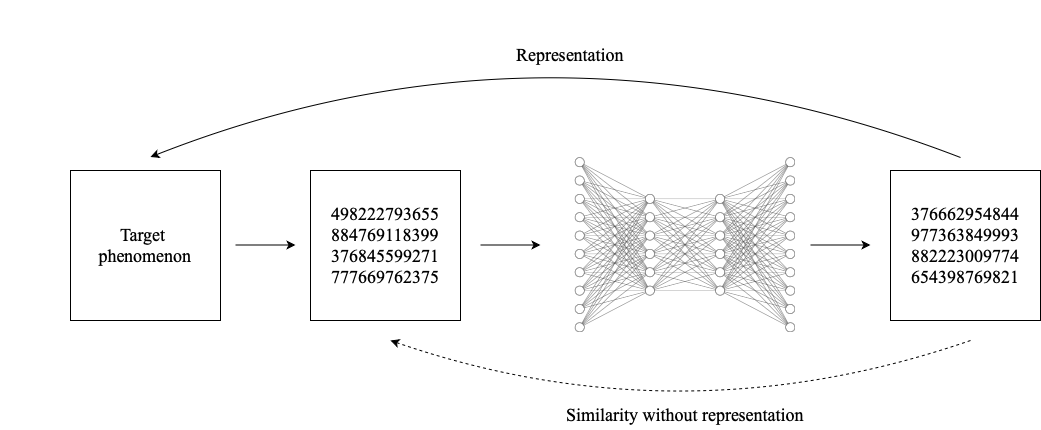

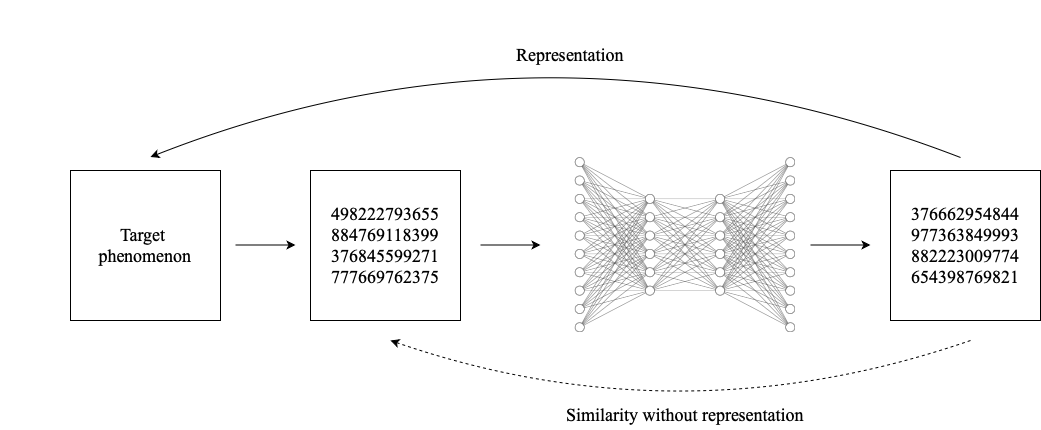

Hallucinations in generative AI become particularly problematic in scientific endeavors where the AI's outputs are used to inform and guide decisions. These hallucinations are not mere data noise, as they can present plausible yet incorrect results that are difficult to detect. The concern arises from the fact that these errors could potentially mislead research or clinical practices if left unaddressed. The author also raises the concern of whether hallucinations are an unavoidable consequence of how generative models function, highlighting the trade-off between improving novel insights and maintaining reliability.

The argument is made that these errors are partly due to the high-dimensional nature of the data and the compression of such data into limited parameter spaces. High-dimensional outputs that interpolate between data points may lead to erroneous outputs that seem statistically plausible but are actually misleading.

Figure 1: A generative DNN produces outputs that resemble training data samples, but are not necessarily used as representations of the training data itself.

Mitigating Corrosive Hallucinations

To counteract the corrosive effects of hallucinations, the paper discusses two major strategies that can be seen in the applications of AlphaFold and GenCast:

- Theory-Guided Training: By embedding theoretical knowledge into the AI model's training process, as seen in AlphaFold through constraints like bond lengths and torsional angles, AI models can significantly reduce incidences of hallucination. This strategy leverages constraints that reflect independent scientific knowledge, providing a layer of robustness to the model outputs beyond empirical training data alone.

- Confidence-Based Error Screening: Both AlphaFold and GenCast incorporate measures to estimate the accuracy of their predictions, employing methods such as residue-level reliability scores in AlphaFold and stochastic variation for ensemble predictions in GenCast. Such measures allow researchers to discern which model outputs are reliable for downstream analysis, thus effectively neutralizing potential epistemic threats posed by hallucinations.

Broader Implications and Applications

The paper emphasizes that, despite these mitigation strategies, integrating generative AI into scientific practice is far from straightforward. These approaches do not resolve all issues related to AI infallibility but provide a critical framework to manage hallucinations while maximizing beneficial outcomes from AI systems.

The conceptual shift from relying solely on empirical outcomes to incorporating robust error-management protocols highlights a nuanced balance of discovery and justification in using AI in scientific settings. As seen with AlphaFold and GenCast, the practical application of these strategies not only positions AI as a tool for discovery but also underlines its role in supporting justified scientific inference.

Conclusion

The discussion in the paper underlines that the reliability of generative AI in scientific applications hinges on embedding it within a solid framework of error mitigation and theoretical guidance. While generative AI presents potential risks through hallucination, the paper convincingly lays out a pathway to manage these risks effectively, ensuring that AI continues to serve as a promising adjunct in advancing scientific knowledge. Thus, despite existing philosophical and practical challenges, the structured application of AI can indeed elevate its role from mere data-driven novelty to a reliable contributor in scientific inquiry.