Detecting and Mitigating Hallucinations of LLMs: A Systematic Approach

The resilience of LLMs in generating coherent and fluent text is well-documented. However, a critical roadblock to their reliability is the persistence of hallucinations—instances where the model outputs factually incorrect or nonsensical text. The paper under discussion presents an approach focused on actively detecting and mitigating these hallucinations in real-time, leveraging logit output values and external knowledge validation.

Methodology

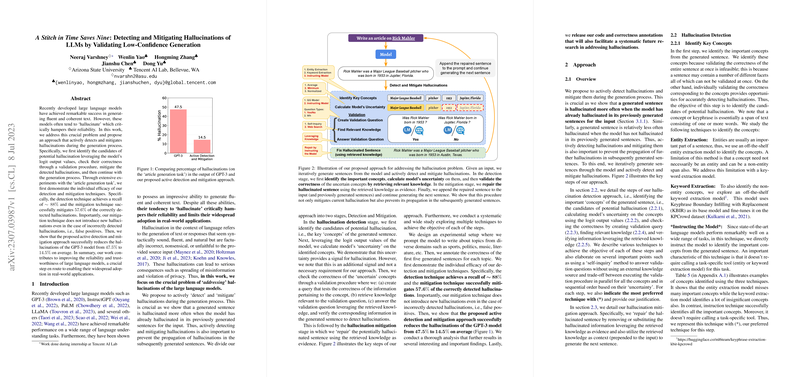

The proposed approach is bifurcated into two main stages: Detection and Mitigation. This active approach prevents the propagation of hallucinations in subsequently generated text, a common issue in post-hoc systems.

Hallucination Detection

- Concept Identification: The first step involves identifying key concepts in the generated text. The recommended technique uses direct model instructions to identify these concepts, which avoids the reliance on external keyword extraction tools.

- Logit-Based Uncertainty Calculation: By examining the softmax probabilities of token outputs, the approach calculates a probability score for each identified concept, identifying concepts with scores indicating high uncertainty as potential hallucinations.

- Validation Query Creation: The next step generates validation questions for uncertain concepts. This step is performed by instructing the model to create Yes/No questions to check the factual accuracy of concepts.

- Knowledge Retrieval: The validation questions are then used to search for relevant information via a web search. This retrieved knowledge contextualizes the validation process.

- Question Answering and Validation: The model answers the validation questions using the retrieved knowledge. If the answer contradicts the generated content, the concept is flagged as hallucinated.

Hallucination Mitigation

Upon identifying hallucinations, the system instructs the model to repair the hallucinated text using the retrieved knowledge as evidence. This active mitigation corrects the current hallucinations and adjusts for coherent and factual future text generation.

Experimental Setup

Article Generation Task

The paper's primary experimental setup involves generating articles on diverse topics using GPT-3.5 (text-davinci-003). Manual annotation evaluates the correctness of the first five sentences of each article, providing both sentence-level and concept-level hallucination annotations.

Detection Performance

The approach showcases a recall of ~88% for hallucination detection using web search, outperforming the self-inquiry method. The preference for high recall ensures that most hallucinations are correctly identified, albeit at the expense of higher false positives, which are addressed in the mitigation stage.

Mitigation Performance

The mitigation technique effectively rectifies 57.6% of detected hallucinations while causing minimal deterioration (3.06%) when handling false positives. This performance highlights the robustness of the mitigation process.

Additional Studies

To illustrate the wide applicability of the approach, the paper includes studies involving another LLM, Vicuna-13B, and tasks like multi-hop question answering and false premise questions.

- Vicuna-13B Model: The method proves effective in reducing hallucinations from 47.4% to 14.53%, demonstrating the generalizability of the approach across different LLMs.

- Multi-hop Questions: The approach significantly reduces hallucinations in multi-hop bridge questions by applying its validated active detection and mitigation steps iteratively.

- False Premise Questions: The technique identifies and rectifies false premise questions, substantially improving the correctness of generated answers.

Implications

Practical Implications

The main practical implication is the enhanced reliability of LLMs in real-world applications. By reducing hallucinations, models can be more trustworthy in generating informative and accurate texts, essential for applications in content creation, automated reporting, and conversational agents.

Theoretical Implications

The research suggests that uncertainty derived from logit outputs can be a reliable signal for detecting hallucinations. This insight can further theoretical understanding of LLMs' behavior and guide future improvements in model architectures and training paradigms to inherently reduce hallucinations.

Future Directions

Potential future developments include:

- Efficiency Improvements: Parallel processing during concept validation can make the approach more computationally efficient.

- Broader Knowledge Integration: Incorporating specialized knowledge bases alongside web search can further enhance validation accuracy.

- Real-Time Applications: Adapting this approach for real-time applications in dynamic environments such as live customer support or automated moderation systems.

Conclusion

This research addresses a vital challenge in the deployment of LLMs by providing a systematic approach for detecting and mitigating hallucinations. By integrating logit-based uncertainty measures and knowledge retrieval, the method significantly enhances the factual accuracy of LLMs' outputs. This advancement is a crucial step towards more reliable and trustworthy AI applications, paving the way for broader adoption and integration of LLMs in various domains.