- The paper reviews key methodologies for enabling time series models to generalize under unseen distribution shifts.

- It analyzes decoupling-based, invariant, and ensemble methods that isolate robust features for improved prediction and evaluation.

- Practical applications in finance, healthcare, and public safety underscore the survey's focus on real-world model adaptability.

Out-of-Distribution Generalization in Time Series: A Survey

Introduction

The paper "Out-of-Distribution Generalization in Time Series: A Survey" presents a comprehensive review of methodologies for time series out-of-distribution (OOD) generalization. As time series data often experience distribution shifts, diverse latent features, and non-stationary dynamics, they pose significant challenges for OOD generalization. The survey is structured around three foundational dimensions: data distribution, representation learning, and OOD evaluation. The authors emphasize the importance of improving time series models' generalization capabilities to adapt to unseen data distributions, crucial for applications in fields such as finance, healthcare, and industrial fault diagnosis.

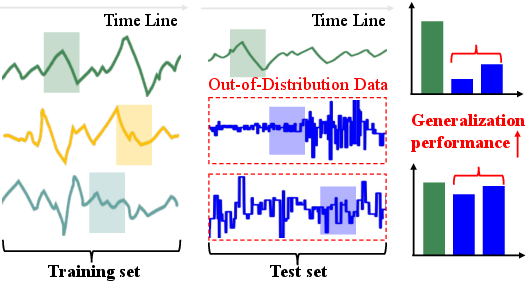

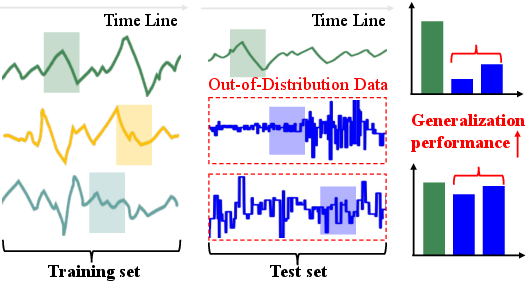

Figure 1: An example of the TS-OOG problem illustrating significant performance degradation on OOD data due to unaddressed distribution shifts.

Data Distribution

Covariate Shift: This refers to changes in the distribution of input features between training and testing phases while maintaining consistent conditional distributions. Techniques such as generative models and data augmentation are employed to enhance model robustness against such shifts. For instance, advancements include using soft Brownian offset sampling with autoencoders to generate realistic OOD samples, which fortify classifiers during training [20].

Concept Shift: Concept shift occurs when there's a change in the relationship between output labels and input features, attributable to evolving environments or dynamics. Techniques like contrastive learning frameworks and ensemble learning are important to enhance robustness against dynamically changing conceptual shifts [31].

Representation Learning

Representation learning methods focus on extracting features that remain stable across different environments. The paper identifies major approaches including decoupling-based methods, invariant-based methods, and ensemble-based learning.

Decoupling-based Methods: These methods address distribution shifts by separating relevant features from irrelevant ones. Examples include using structured component neural networks (SCNN) for multivariate time series forecasting by decomposing data into structured and unstructured components, thus enhancing robustness and interpretability [35].

Invariant-based Methods: Invariant risk minimization (IRM) aims to discover feature representations common across all environments, reducing reliance on potentially spurious correlations. Combining invariant and domain-invariant learning techniques ensures robust performance under distribution shifts [46].

Ensemble-based Methods: Ensemble-based methods leverage multiple model predictions, either homogeneously or heterogeneously, to improve generalization. They reduce variance and bias by integrating predictions across various weak learners with complementary strengths [60, 64].

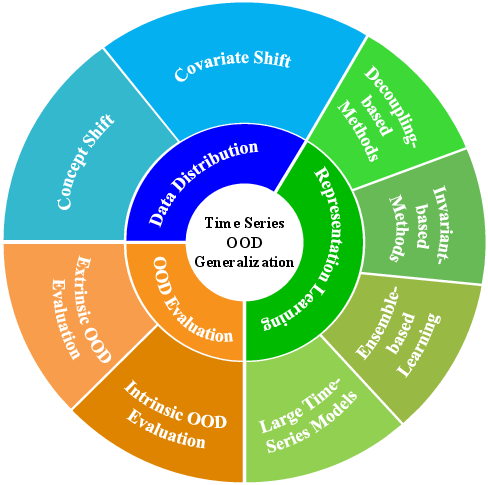

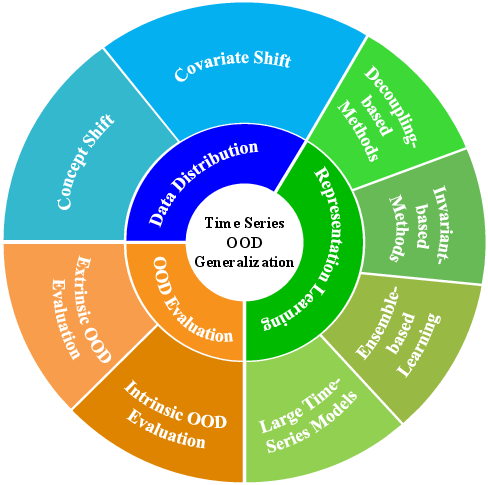

Figure 2: A comprehensive taxonomy of TS-OOG methods outlining approaches like covariate shift adaptation and invariant learning.

OOD Evaluation

The effective evaluation of OOD performance is crucial. Evaluations can be extrinsic, using OOD test data with real or pseudo-labels, or intrinsic, relying on model characteristics to infer generalization capabilities.

Extrinsic Evaluation: Methods such as synthetic data generation and pseudo-labeling are pivotal for assessing performance on unlabeled OOD data. For instance, quasi-verifiable evaluations employ simulators to generate test data under varying conditions to measure robustness [87].

Intrinsic Evaluation: Distributional robustness, feature smoothness, and invariance extraction are intrinsic properties used to hypothesize a model's generalization capabilities without explicit test data. Causal inference and contrastive learning aid in preserving stable internal representations against external perturbations [45, 42].

Applications

TS-OOG methodologies find applications in diverse fields requiring high adaptability and robustness:

- Transportation: TS-OOG models enhance decision-making in autonomous vehicles and smart city infrastructures where sensor data is prone to variations due to external conditions like weather [44].

- Environment: Predicting air quality, remote sensing, and energy consumption leveraging models that generalize well to temporal data shifts enhancing environmental monitoring [87, 93].

- Public Health: In healthcare, models for drug discovery and mental health monitoring thrive by generalizing well across evolving data distributions, providing early insights for patient conditions [58].

- Public Safety: Cybersecurity applications use TS-OOG methods to detect anomalies in surveillance and network data, improving detection of potential threats under distribution drifts [88].

Challenges and Future Directions

Dynamic Distribution Shifts: Continual adaptation mechanisms are essential for handling gradual changes and dynamic distribution shifts, requiring advances in online learning algorithms to efficiently detect and adapt to new data distributions [147, 149].

Invariant Representation Construction: Advancements in delineating robust invariant representations improve model generalization by capturing stable patterns amidst distribution shifts using feature-separation strategies grounded in causal inference [179].

Large-Scale Time Series Models: Towards constructing unified frameworks combining cross-domain and cross-modal capabilities, enhancing LLM utility for diverse time-series tasks is vital [183].

Uncertainty Quantification and Benchmark OOD Evaluation: Developing comprehensive, adaptive OOD evaluation systems that quantify uncertainties while assessing robustness against unseen data distributions will bolster model trustworthiness [104, 184].

Explainable AI: Balancing robustness with interpretability is critical, especially in high-stakes domains. Lightweight, explainable models tailored for complex time-series data require further development to ensure transparent decision-making [204].

Conclusion

The survey serves as a detailed guideline for researchers, synthesizing existing methodologies, challenges, and future research directions in time series OOD generalization. By elucidating core dimensions such as data distribution and representation learning, it aims to promote the development of robust, adaptive systems capable of tackling complex real-world distribution shifts in diverse applications.