- The paper introduces MAD-MAX, a novel red teaming framework that automates attack style clustering to efficiently generate adversarial exploits.

- It employs multi-style merging combined with cosine similarity filtering to integrate diverse jailbreak strategies and enhance cost-efficiency.

- Empirical evaluations demonstrate a 97% attack success rate on GPT-4o and Gemini-Pro, highlighting its scalability for real-world LLM security audits.

MAD-MAX: Modular And Diverse Malicious Attack MiXtures for Automated LLM Red Teaming

Introduction

The paper introduces MAD-MAX, a novel approach to automated red teaming for LLMs, designed to address vulnerabilities that arise as models are frequently updated or fine-tuned. The method improves upon existing frameworks such as Tree of Attacks with Pruning (TAP) by offering a more modular and diverse strategy for generating adversarial attacks. The security implications of these improvements are significant given the increased integration of LLMs in real-world applications, where ensuring robust guardrails against malicious exploits is critical.

Methodology

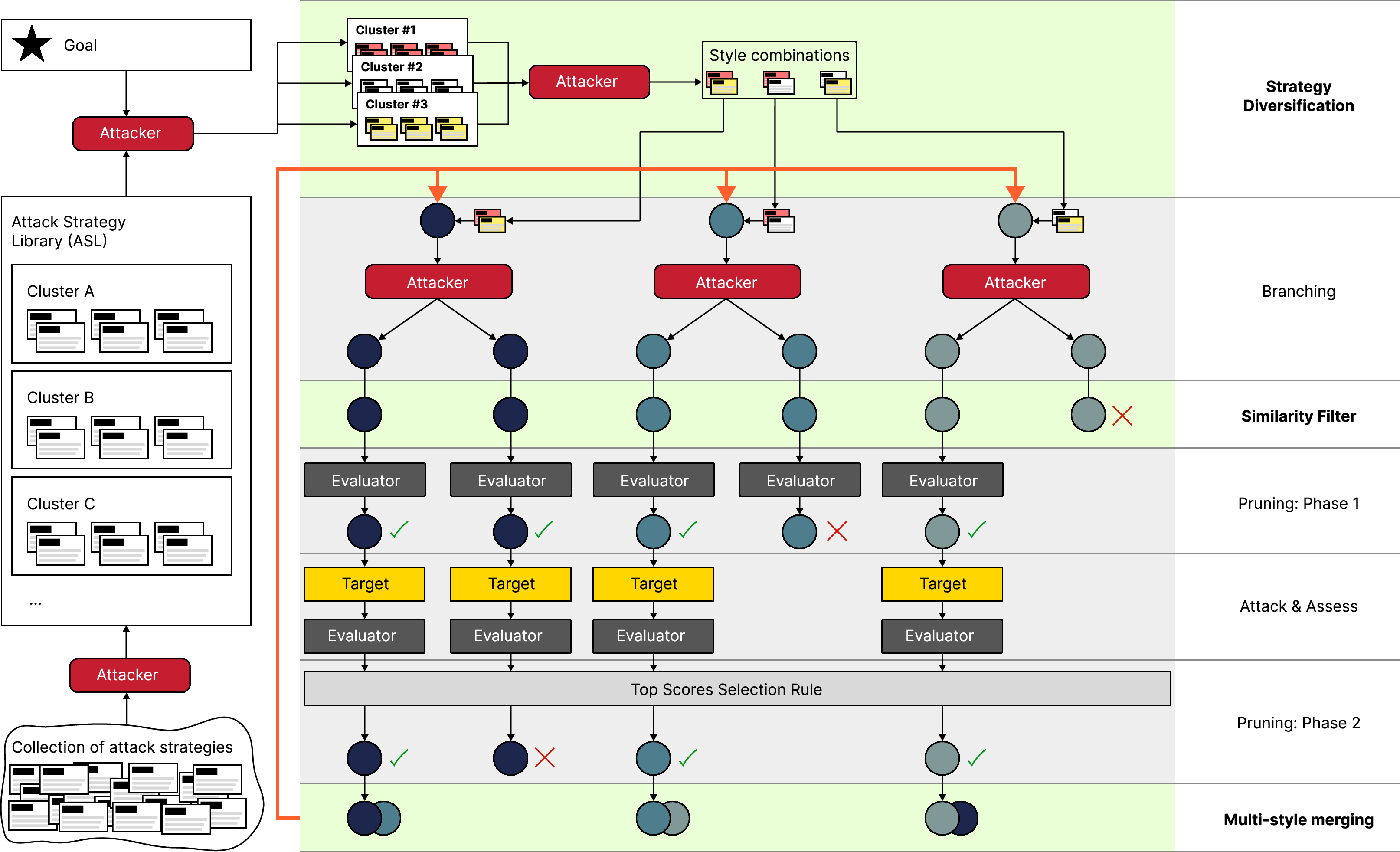

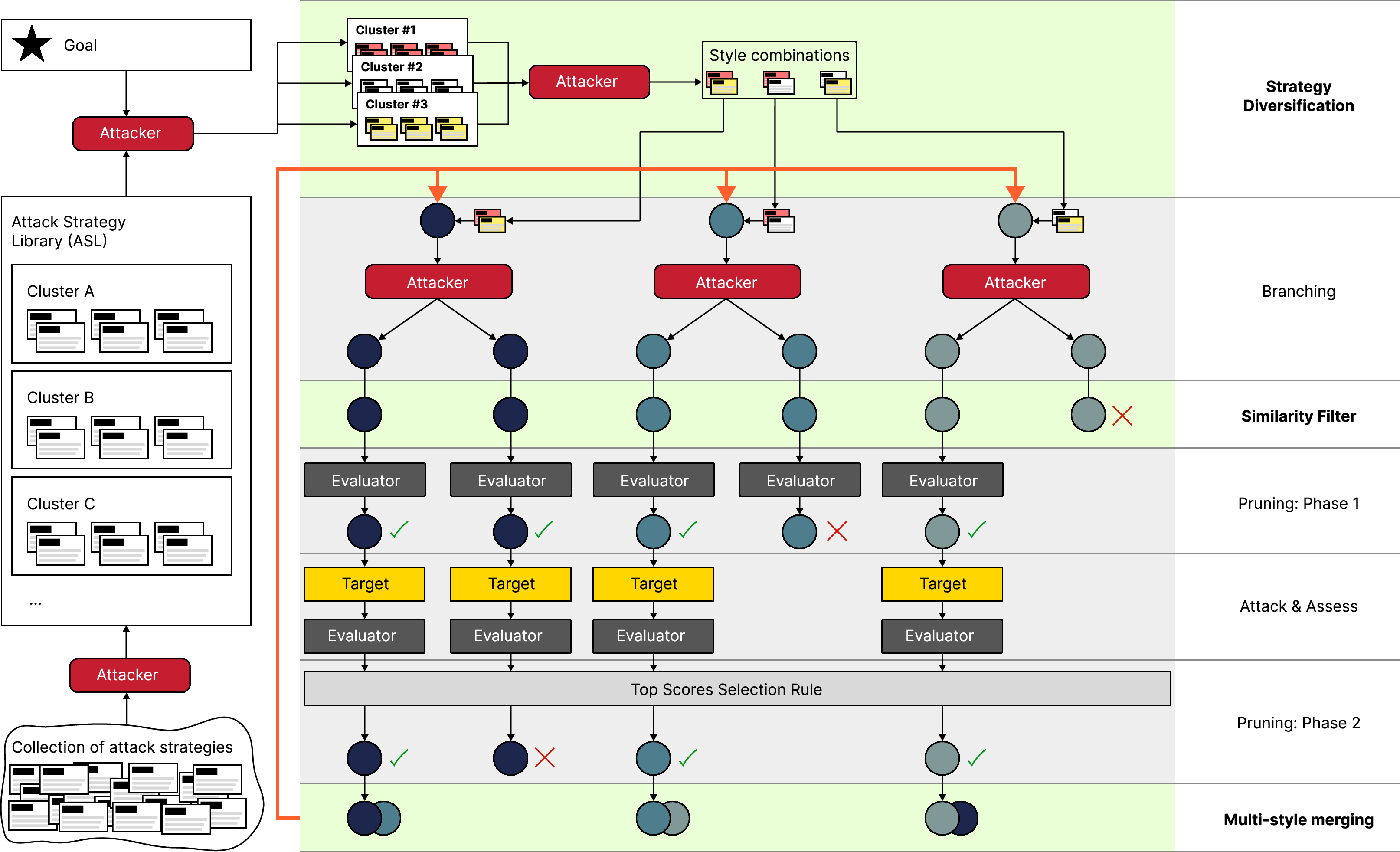

MAD-MAX is distinguished by several key features: a modular attack style library (ASL), automatic clustering using LLM agents, and multi-style merging mechanisms that collectively enhance the diversity and effectiveness of jailbreak attempts.

Figure 1: Illustration of Modular And Diverse Malicious Attack MiXtures (MAD-MAX) for Automated LLM Red Teaming.

Attack Style Library and Clustering

The ASL component automates the categorization of existing jailbreak strategies into clusters, allowing for dynamic extension as new strategies are identified. The two-step selection process significantly reduces the token processing load and enhances the system's ability to focus on the most promising attack clusters. Moreover, this modularity allows practitioners to seamlessly integrate new attack vectors without the need for extensive manual reconfiguration.

Multi-Style Merging and Diversity

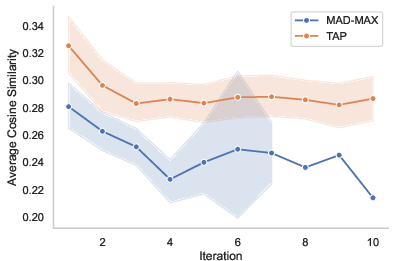

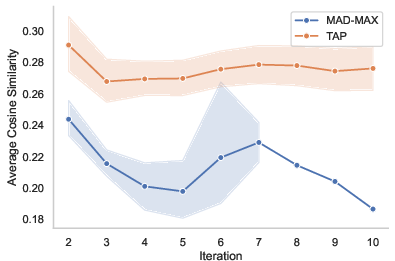

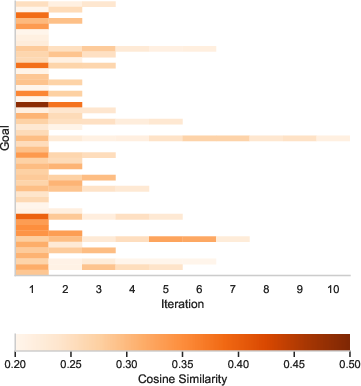

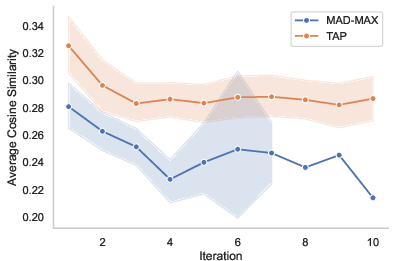

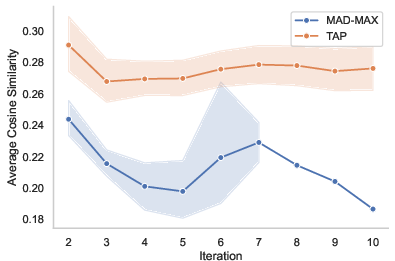

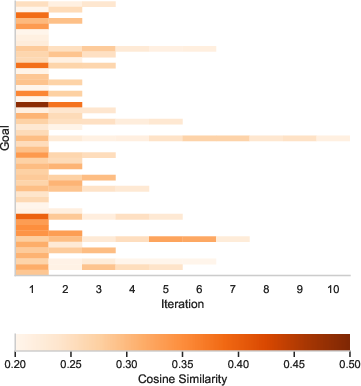

A core feature of MAD-MAX is its use of multi-style merging, which combines successful strategies from various attack branches to innovate new exploit pathways. This approach counteracts the current limitations of TAP, where diversity in attack strategies was notably lacking. The method strategically employs cosine similarity filtering to eliminate prompt redundancies, optimizing computational resources.

Figure 2: Average cosine similarity per method on 50 adversarial goals with GPT-4o as target.

Performance Evaluation

Empirical evaluations against competitive benchmarks such as TAP demonstrate MAD-MAX's superior performance, achieving a 97% attack success rate (ASR) on GPT-4o and Gemini-Pro. This is coupled with a marked reduction in the number of queries required to achieve effective jailbreaks, underscoring the cost-efficiency benefits of the method.

Experimental Setup

MAD-MAX was tested using a standardized set of adversarial goals that span a broad range of potential misuse scenarios. The setup was designed to closely replicate real-world conditions wherein LLMs must resist a spectrum of malicious inputs. The framework was validated using advanced models from OpenAI and Google, with a focus on balancing attack efficacy with practicality in computational expense.

Figure 3: Average cosine similarity among prompts in each iteration per goal with GPT-4o as target.

Discussion

The results highlight the robustness of MAD-MAX in diversifying attack strategies and lowering query costs, which is critical in scalable AI security audits. The system's extensibility and efficacy in rapidly evolving threat landscapes position it as a viable alternative to traditional red-teaming methodologies. Additionally, the method's adaptability could be leveraged for continuous learning frameworks, where progressive improvements in attack libraries directly translate to enhanced model fortification measures.

Conclusion

MAD-MAX offers significant advancements in automated red teaming for LLMs. Its modular framework and enhanced attack diversification strategy not only improve upon existing methodologies but also provide a foundation for future research into adaptive security testing mechanisms. This approach not only addresses current deficiencies but also equips models with the resilience needed to confront emerging threats in an ever-evolving digital environment.