LongBench v2: Advancing Evaluations in Long-Context LLMs

The paper "LongBench v2: Towards Deeper Understanding and Reasoning on Realistic Long-context Multitasks" addresses a critical gap in the evaluation of LLMs by introducing a comprehensive benchmark specifically designed to test LLM capabilities in handling long-context problems. This benchmark, LongBench v2, is a robust advancement aimed at evaluating models in real-world multitasks that require intricate reasoning and deep understanding across extensive text lengths, ranging from 8,000 to 2 million words.

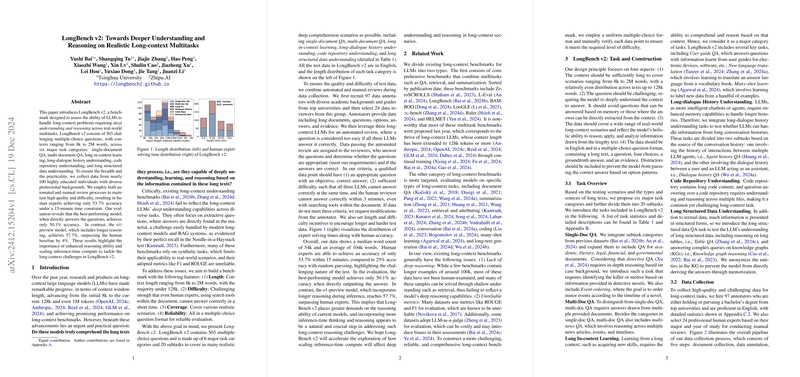

The LongBench v2 project involves the formulation of 503 challenging multiple-choice questions categorized into six primary task types: single-document QA, multi-document QA, long in-context learning, dialogue history understanding, code repository understanding, and structured data understanding. The benchmark’s scope and realism are ensured through data sourced from a diverse group of nearly 100 highly educated professionals. The benchmark employs both automated and manual review processes, resulting in the fact that even human experts achieve only a 53.7% accuracy rate within a stringent 15-minute time frame. This is a crucial finding, highlighting the complexity and depth required to navigate the tasks presented.

Prominent results from the evaluation process demonstrate that the best-performing model, o1-preview, achieved a 57.7% accuracy rate, surpassing the human benchmark by 4%. This points to the potential of LLMs to exceed human performance in specific long-context reasoning tasks when inference-time compute is sufficiently scaled. However, many models performed significantly below this benchmark. This wide variability in model performance underscores the importance of further research into scaling inference-time reasoning capabilities.

LongBench v2’s design contrasts sharply with existing benchmarks that often focus on extractive questioning and utilize synthetic tasks. Such limitations can result in an overstatement of a model’s true capabilities to comprehend and reason with long contextual information. In particular, LongBench v2 seeks to test models through multiple-choice formats, which offer a more reliable metric for model evaluation, avoiding issues associated with unreliable metrics like F1 or ROUGE scores which are common in some other benchmarks.

The structured approach used to curate LongBench v2's tasks warrants close examination. These tasks are designed to stress-test capabilities beyond mere data retrieval, pushing the threshold towards what genuine comprehension and reasoning entail over long textual inputs. For example, multi-document QA tasks test the model’s ability to synthesize information from multiple sources, while long-dialogue history tasks evaluate a model's memories and understanding over sequential exchanges.

From a practical and theoretical standpoint, this benchmark holds significant implications for the future development of LLMs. The results point towards the importance of enhancing test-time reasoning abilities and also suggest that retrieval-augmented generation (RAG) could be leveraged more effectively across various context lengths. Furthermore, the findings underscore the necessity for continued research into improving models' abilities to maintain reasoning efficacy despite increased context length, an aspect that remains challenging as demonstrated by the disparity in performance over shorter versus longer text contexts.

In conclusion, LongBench v2 not only provides an essential tool for gauging the current state of long-context LLMs but also sets a standard that future developments can build upon, urging advancements both in model architecture and evaluation strategies. It paves the way for developing models that maintain or even improve their reasoning capabilities with increasing input lengths, steps that are crucial in the pursuit of AI systems that can handle real-world information complexity efficiently and accurately.