Open-RAG: Enhanced Retrieval-Augmented Reasoning with Open-Source LLMs

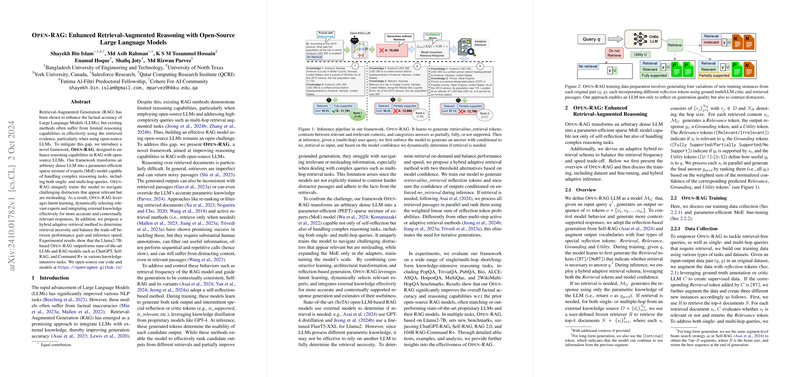

This essay examines a paper detailing Open-RAG, a framework aimed at enhancing retrieval-augmented reasoning using open-source LLMs. The approach concentrates on transforming dense LLMs into parameter-efficient sparse mixture of experts (MoE) models that effectively handle complex reasoning tasks, including single- and multi-hop queries.

Overview

Retrieval-Augmented Generation (RAG) techniques are instrumental in improving the factual accuracy of LLMs by integrating retrieval mechanisms with LLMing. However, existing methods often exhibit limitations in reasoning capabilities, particularly with open-source LLMs. The proposed Open-RAG addresses these gaps by introducing contrastive learning to navigate misleading but relevant-seeming information, converting dense architectures into MoE models, and implementing hybrid adaptive retrieval based on model confidence.

Key Contributions

- MoE Transformation: Open-RAG transforms dense LLMs into PEFT MoE models, enhancing their ability to handle multi-hop reasoning by dynamically activating relevant experts. This transformation maintains computational efficiency while scaling reasoning capacity.

- Contrastive Learning: The framework integrates contrastive learning to help models distinguish between relevant and distractor content during multi-hop retrieval tasks. This approach addresses challenges faced by traditional RAG models in accurately using retrieved evidence.

- Adaptive Retrieval: Open-RAG proposes a novel hybrid adaptive retrieval mechanism, leveraging both reflection tokens and model confidence to dynamically determine the necessity of retrieval. This mechanism improves retrieval efficiency and accuracy while balancing speed.

Evaluation and Results

The paper provides a comprehensive evaluation of Open-RAG across various single- and multi-hop tasks. The results demonstrate significant improvements over existing open-source and proprietary models. Key findings include:

- Performance Gains: Open-RAG often outperforms both proprietary (e.g., ChatGPT) and open-source (e.g., Alpaca, Llama2) RAG models, particularly in complex multi-hop settings such as HotpotQA, MuSiQue, and 2WikiMultihopQA.

- Retrieval Efficiency: The hybrid adaptive retrieval mechanism successfully balances performance improvements with retrieval frequency, demonstrating a more effective determination of when retrieval is necessary.

Implications and Future Directions

Open-RAG's advancements hold significant implications for the development of more robust RAG systems using open-source LLMs. By integrating MoE architectures and contrastive learning, the framework enhances the reasoning capabilities of LLMs, which can be especially beneficial for domains requiring complex factual reasoning.

Future research may explore the scalability of Open-RAG with newer LLM architectures, potentially extending the framework's applicability across various domains. Additionally, further studies could investigate the integration of Open-RAG with domain-specific knowledge bases to refine retrieval capabilities.

In summary, Open-RAG represents a significant step forward in retrieval-augmented reasoning for open-source LLMs, providing a foundation for continued advancements and applications in the field of AI-driven knowledge extraction and reasoning.