- The paper provides a comprehensive framework integrating planning, memory, tools, and control flow to bridge research and deployment of LLM agents.

- It details methodologies like explicit planning, retrieval augmented generation, and structured tool invocation to enhance performance and error handling.

- The research emphasizes strategic model selection and control flow management to create robust and autonomous LLM systems for practical applications.

Practical Considerations for Agentic LLM Systems

Introduction

The paper "Practical Considerations for Agentic LLM Systems" (2412.04093) addresses the inherent challenges and practical considerations associated with deploying LLMs as autonomous agents in real-world applications. LLMs possess emergent abilities across various natural language domains; however, their unpredictability creates a significant gap between research and practical deployment. This paper provides actionable insights and organizes relevant research into four primary components—Planning, Memory, Tools, and Control Flow—to aid in constructing robust LLM agents for real-world applications.

Figure 1: A typical application-focused depiction of LLM agents.

Planning in LLM Agents

Planning is a fundamental component of agentic systems, enabling LLMs to handle complex tasks through manageable steps. Despite numerous anecdotal instances, research indicates that LLMs are not inherently adept at planning. The paper categorizes planning methodologies into implicit and explicit forms. Implicit planning relies on LLMs to decide immediate next steps without formalizing a plan, while explicit planning involves creating multi-step plans that are executed in stages. Effective planning in LLM systems often involves decomposing tasks into smaller, manageable units that LLMs can handle and integrating feedback loops for plan adherence and error handling.

Memory: RAG and Long-Term Memory

Memory in LLM agents can be augmented through Retrieval Augmented Generation (RAG) and long-term memory mechanisms. RAG provides grounded, timely, and aligned knowledge to the LLM, thereby reducing hallucinations and filling knowledge gaps by supplying relevant context. Long-term memory mechanisms store useful information over time, enhancing the agent's ability to remember user preferences or repeated queries. The paper emphasizes the need for selective memory storage to ensure relevance and applicability over time and outlines approaches to extracting, storing, and utilizing long-term memory.

Figure 2: An example scenario featuring a pescetarian meal assistant LLM agent.

Tools enable LLM agents to interact with their environments beyond textual exchange. Tools should be clearly defined with methods of invocation, such as JSON schemas or function signatures, to ensure seamless integration. Explicit tool usage, where the agent invokes tools as part of its output, is contrasted with implicit usage, where tools are triggered by system events or conditions. Managing an expanding toolset involves categorizing tools into distinct functional groups or toolkits, allowing for efficient resource utilization.

Control Flow in LLM Agents

Control flow encompasses the decision-making process that allows agents to manage multi-step reasoning and task execution autonomously. The paper discusses the importance of structured output formats for inter-step communication, alongside strategies for error handling through static, informed, and external retries. Moreover, the paper emphasizes the importance of setting clear stopping criteria and the use of multiple personas to enhance the agent's task-specific performance.

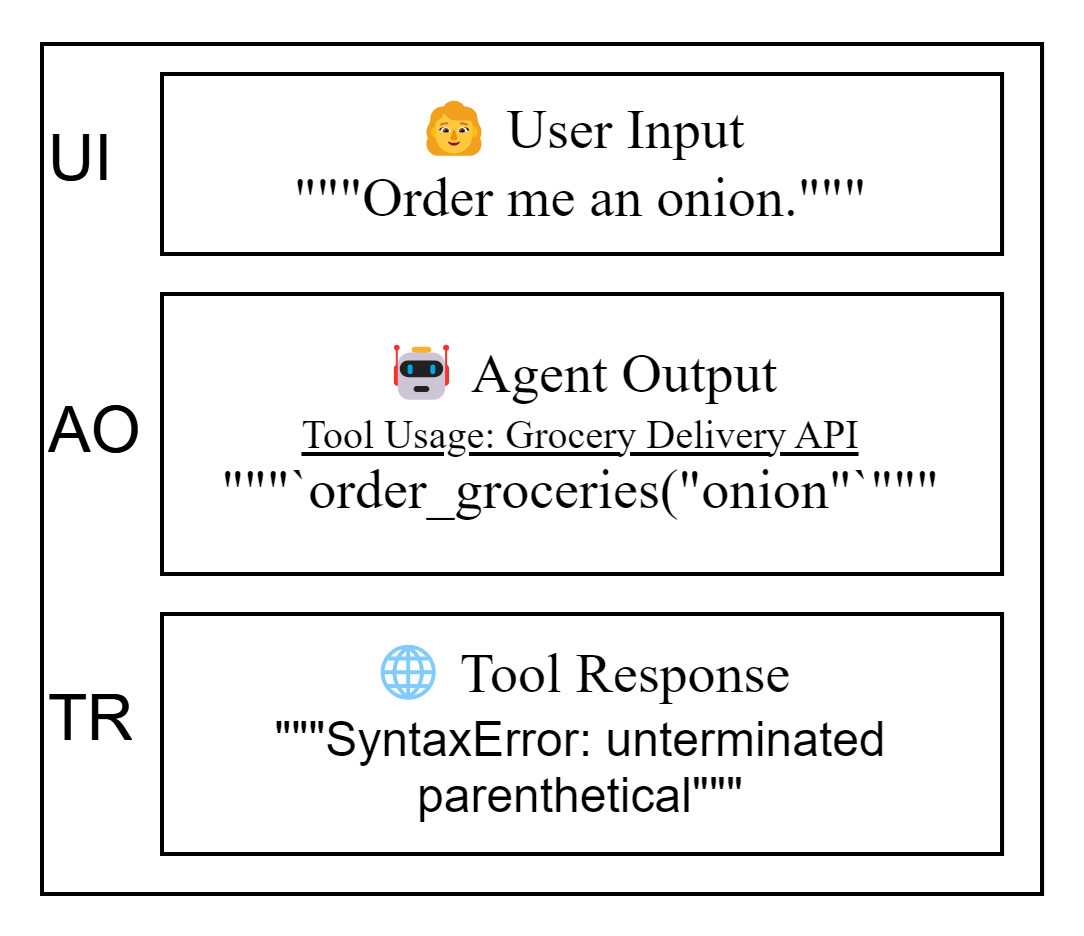

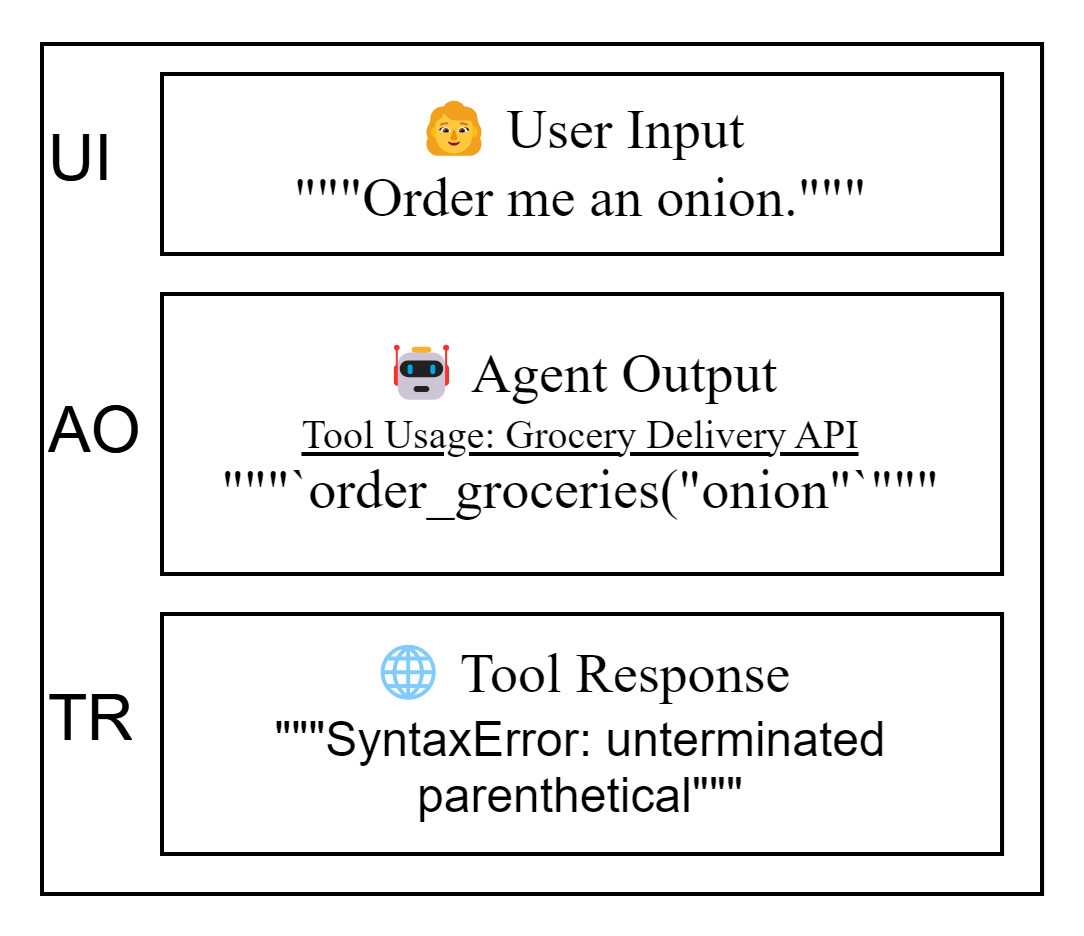

Figure 3: An example of an erroneous tool call, following the scenario presented in Figure 2.

Additional Considerations

Model selection plays a pivotal role, balancing cost, speed, and performance. The paper recommends starting with robust models to establish a performance baseline before downgrading to smaller models. Evaluation of agentic LLM systems should include holistic measures that check overall system efficacy and piecemeal metrics that diagnose specific failures. Integration with traditional engineering practices can enhance the system's determinism by offloading stochasticity onto tried-and-tested engineering solutions.

Conclusion

The synthesis of practical research insights into agentic LLM systems facilitates informed construction and deployment in real-world applications, bridging the gap between academic research and industry practices. By addressing Planning, Memory, Tools, and Control Flow, and acknowledging additional considerations such as model selection and evaluation practices, the research offers a comprehensive framework for understanding and implementing LLM agents. This foundation paves the way for further exploration of agentic LLM systems and their evolving role in autonomous applications.