Overview of the X-Prompt Framework for In-Context Image Generation in Auto-Regressive Models

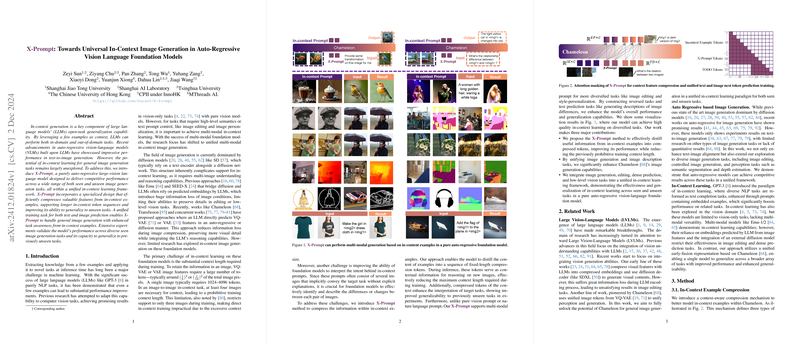

The paper presents an advanced framework, referred to as X-Prompt, which enhances the versatility of auto-regressive vision-LLMs (VLMs) for comprehensive and generalized image generation tasks. Built upon the foundational structure of LLMs, the research leverages in-context learning to offer a unified approach that accommodates both seen and unseen image generation tasks. This framework underscores a critical shift towards integrating vision and LLMs to broaden the application and effectiveness of AI in complex, multi-modal contexts.

Key Contributions

- In-Context Feature Compression: The X-Prompt model introduces a novel method for processing in-context examples by compressing valuable features into a reduced sequence of tokens. This allows for greater context-length handling efficiencies during training and supports output prediction with improved generalization capabilities.

- Unified Task Perspective: By aligning both image and text prediction tasks, the framework effectively bridges image generation and image description. This dual alignment enhances the model's task awareness, allowing for better contextual understanding and prediction accuracy in diverse scenarios.

- Diverse Task Integration: X-Prompt encapsulates a wide array of tasks, including image editing, enhancement, segmentation, and style-personalization. The integration shows promising results in generalizing across different functional requirements and unseen tasks in an auto-regressive modeling context.

Methodology

The model architecture draws upon VQ-VAE or VAE encoded visual features, employing a comprehensive token prediction paradigm reminiscent of LLM approaches. Key to this method is the compression of in-context example data into informative tokens that maintain the integrity of image details, thereby enabling versatile image generation.

Moreover, a task augmentation pipeline further supports the model's competence through difference description tasks and task reversal strategies. This augmentation, in collaboration with retrieval-augmented image editing (RAIE), equips the model with enhanced detail prediction and manipulative capabilities, which transcend traditional generative systems.

Experimental Validation

Experiments demonstrate the model's competence across a suite of tasks:

- It performs competitively in text-to-image generation within the GenEval benchmark, showcasing its ability to align with textual prompts accurately.

- The unified model demonstrates solid results in dense prediction tasks such as semantic segmentation and surface normal estimation—domains traditionally dominated by specialized models.

- Image editing is notably enhanced through RAIE, where in-context retrieval refines the generative output.

- Critically, the model’s generalization to unseen tasks through in-context learning is validated, marking a substantive accomplishment in AI's adaptive learning capabilities.

Implications and Future Directions

X-Prompt's methodological contributions emphasize a promising trajectory towards achieving "multi-modal GPT-3 moments." By effectively compressing and leveraging context within a unified model, the framework provides a foundational methodology applicable to a variety of AI-enhanced workflows. Future directions largely hinge on enhancing VQ-VAE's resolution capabilities to mitigate information loss during image reconstruction and further investigating the potential of comprehensive multi-modal pretraining to close the gaps between distinct task paradigms.

This research serves as a pivotal step towards harnessing the synergy of vision-LLMs, setting the stage for future innovations that could redefine AI's role in image generation and beyond.