End-to-End Factuality Evaluation with LLM-OASIS

The paper, "Truth or Mirage? Towards End-To-End Factuality Evaluation with LLM-OASIS" by Alessandro Scirè et al., introduces LLM-OASIS, a substantial dataset crafted to advance research in evaluating the factuality of outputs generated by LLMs. In the context of natural language generation, these models often generate text containing hallucinations, which are segments not grounded in reality. This paper effectively positions its contribution by addressing the gaps in existing tools for factuality evaluation, particularly in resources that are not limited by domain specificity, size constraints, or inapplicability to real-world scenarios.

Contributions and Approach

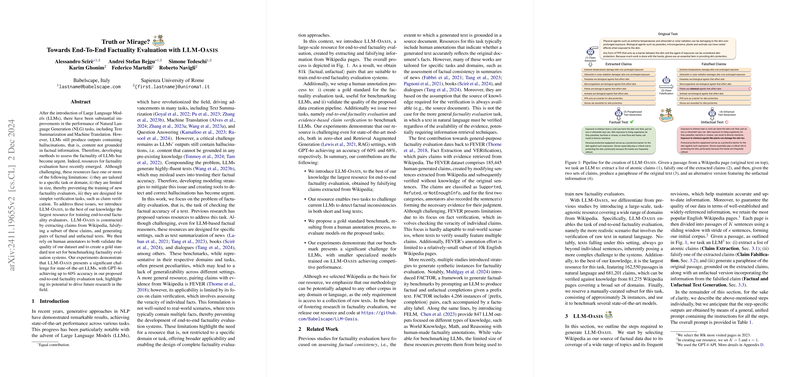

LLM-OASIS, as posited by the authors, stands as the largest existing dataset explicitly designed for end-to-end factuality evaluation. The dataset creation process is systematic and methodical: claims are extracted from Wikipedia, with a subset intentionally falsified to simulate non-factuality. Subsequently, they generate ⟨factual, unfactual⟩ pairs of texts, validated by human annotators to ensure quality, thus also establishing a gold standard benchmark for factuality evaluation systems.

The authors delineate four key steps in constructing this resource: claim extraction, claim falsification, factual text generation, and unfactual text generation. Their approach hinges on leveraging generative models to paraphrase and subtly manipulate extracted claims, ensuring each output text challenges LLMs' factuality detection capabilities.

Experimental Insights

The paper presents comprehensive experiments demonstrating that the LLM-OASIS dataset indeed poses significant challenges to state-of-the-art LLMs. Notably, the authors report that GPT-4o achieves up to 60% accuracy in discerning factual from non-factual content when evaluated against their benchmark, underscoring the dataset's difficulty and its potential to push the boundaries of current research in factuality evaluation.

Moreover, the resource supports dual tasks—end-to-end factuality evaluation and evidence-based claim verification—extending its applicability across various research contexts. The paper emphasizes the inadequacy of existing resources like FEVER, which focuses solely on claim verification, whereas LLM-OASIS facilitates holistic end-to-end evaluations reflective of real-world data.

Implications and Future Directions

The introduction of LLM-OASIS has substantial implications for the development of more reliable factuality evaluators. In practical terms, such systems are crucial for applications in content generation, where ensuring factual accuracy is paramount. Theoretically, advancing factuality evaluation addresses one of the critical weaknesses in contemporary LLM architectures—the mitigation of hallucinations.

Looking forward, the authors highlight the adaptability of their methodology to other languages and domains beyond Wikipedia, suggesting future expansions that could substantially broaden the utility and scope of LLM-OASIS. By facilitating more nuanced and scalable approaches to factuality evaluation, the dataset lays the groundwork for significant advancements in the evaluation metrics and architectures employed in NLP systems.

In conclusion, the work by Scirè et al. provides a valuable resource and robust framework for tackling the factuality evaluation challenges inherent in LLM outputs. Their methodical approach, combined with comprehensive validation and benchmarking, makes LLM-OASIS a pivotal contribution to ongoing research efforts aiming to enhance the factual reliability of LLM-generated text.