Assessing Factual Accuracy and Geographical Bias in Multilingual LLMs through the Multi-FAct Framework

Introduction to Multi-FAct

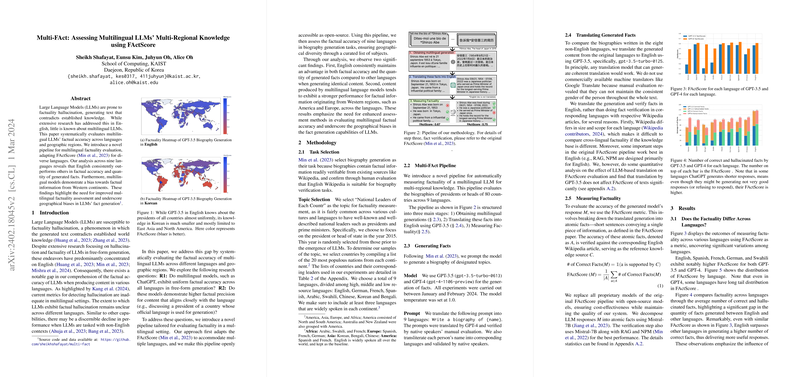

LLMs, while impressive in their capabilities, have raised concerns regarding their accuracy, particularly in the field of factual content generation. This paper navigates the often overlooked domain of multilingual factuality assessment in LLMs, shedding light on models' performance across different languages and geographical contexts. It frames its analysis by introducing Multi-FAct, a derivative of the FActScore metric adapted for multilingual use, to systematically evaluate the quality and bias of factual output from models like GPT-3.5 and GPT-4 when tasked with generating biographies across nine languages. The fundamental takeaway is the notable variance in factual accuracy and the presence of geographical biases, with a pronounced skew towards Western-centric content.

Methodological Framework

The researchers embarked on their investigation by selecting the biography generation task, focusing on national leaders as a universally identifiable subject that spans cultures and languages. The paper meticulously chose leaders from the year 2015 across twenty populous nations from each continent, ensuring a broad representation. The Multi-FAct pipeline itself is composed of three stages: generating multilingual content, translating these outputs into English for uniform evaluation, and then applying the FActScore to assess factuality. The models used spanned both GPT-3.5 and GPT-4, with a comprehensive approach to translate prompts and verify the generated content's accuracy against English Wikipedia.

Empirical Insights

The analysis revealed two core insights:

- Language-Dependent Factual Accuracy: English emerged as consistently superior in both the accuracy and quantity of generated facts, outstripping other languages. This points towards a significant disparity in model performance that veers towards high-resource languages.

- Geographical Bias: A clear pattern of bias was observed, favoring factual information pertaining to Western regions regardless of the input language. This geographical bias underscores an inherent Western-centric skew in the knowledge bases of multilingual LLMs.

Through a meticulous assessment, the paper presents detailed statistical comparisons across languages and continents, showcasing the variability in factual performance and highlighting the confluence of language resource availability and geographical bias.

Analysis of Geographical Biases

Further granularity was provided through a sub-regional analysis, bringing to light how certain languages showed predilection towards accuracy in specific global regions. For instance, Chinese and Korean displayed a higher factual precision for Eastern Asia, while English maintained relatively uniform accuracy across various regions, albeit with a slight preferential tilt towards North America.

Correlational Examination of FActScore

A correlational matrix was constructed to visualize the intersecting performances across languages, showcasing a high correlation in FActScore among Western languages (English, Spanish, French, and German). This element of the paper hints at a possible shared underpinning in factual representation within these languages, contrasted against lesser-resourced languages which did not exhibit a similar correlation pattern.

Discussing Limitations and Future Directions

The paper does not shy away from acknowledging its limitations; these include a reliance on a constrained sample size and the potential effects of automated factuality scoring tools like FActScore which may not fully capture the nuance of informational value among facts. Future avenues for research suggested include enhancing the fidelity of factuality assessment by differentiating between the quality of facts and expanding the scope to include more diverse and non-political figures.

Reflections and Implications

This paper opens up critical discussions around the need for improved accuracy in multilingual LLMs and calls attention to the geographical biases inherent in these models. It underscores the importance of developing more nuanced methodologies for evaluating LLM outputs, especially in non-English and low-resource languages, to ensure a fair and equitably distributed representation of global knowledge.

The implications of this research are vast, encompassing both the enhancement of LLM capabilities and a reconsideration of how bias and fairness are addressed in the development of AI technologies. As the field progresses, it will be crucial to incorporate these findings into the refinement of LLMs, ensuring they not only achieve high levels of linguistic proficiency but also embody a balanced and accurate representation of the diverse world we inhabit.