Understanding Retrieval Accuracy and Prompt Quality in RAG Systems

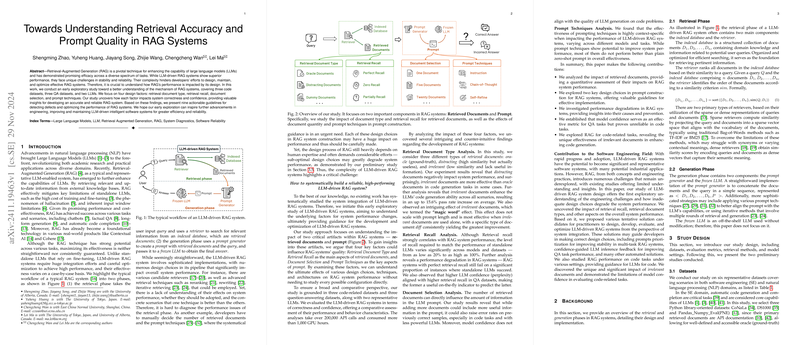

This paper presents an exploratory paper focused on the performance contours of Retrieval-Augmented Generation (RAG) systems, which extend the capabilities of LLMs. The research investigates the impact of various factors on the stability and reliability of RAG systems, assessing four key design parameters: retrieval document type, retrieval recall, document selection, and prompt techniques.

Summary of Findings

- Impact of Retrieval Document Type: The paper identifies three primary types of retrieved documents: oracle (ground-truth), distracting, and irrelevant. Distracting documents consistently degrade performance across both QA and code datasets. Interestingly, irrelevant documents unexpectedly improve LLM code generation ability compared to oracle documents. This enhancement, most pronounced with "diff" documents, is termed a "magic word" effect.

- Retrieval Recall Analysis: There is a strong, albeit varied, dependence of RAG system performance on retrieval recall. Retrieval recall requirements for RAG systems to outperform standalone LLMs range from 20% to 100%, particularly demanding in simpler tasks. Even with perfect retrieval recall, such systems fail on issues that standalone LLMs resolve, highlighting latent system degradations.

- Document Selection Effects: Increasing the number of retrieved documents can initially maintain correctness but later introduce performance declines, notably in code tasks. High numbers sometimes correlate with retrieval recall but exacerbate error rates among previously correctly solved instances, complicating allocation decisions.

- Prompt Technique Variability: The benefit of integrating advanced prompt techniques varies widely depending on the task and model. Even techniques that universally aim to improve LLM outcomes show inconsistent efficacy. Most fail to enhance performance over baseline prompts, pointing to their context-specific strengths and inherent inefficiencies in straightforward tasks.

Implications and Future Directions

This work unveils insights critical for the engineering of RAG systems, offering nine practical guidelines for designers to optimize their reliability and accuracy. These include harnessing model perplexity as a metric for document quality in QA tasks and recognizing the unexpected benefits of irrelevant documents in coding contexts.

However, several challenges remain unaddressed, particularly the need for generalized prompts that balance task specificity with multi-task versatility. Current prompt optimization efforts often falter outside their designed contexts, underscoring the complexity of RAG system engineering.

Moreover, fundamental differences between QA and code retrieval tasks within RAG systems require more tailored approaches to enhance oracle document usage efficiency and to mitigate distracting content. This gap signals further research opportunities, emphasizing the necessity for novel techniques in evaluating retrieval effectiveness and adapting systems flexibly to varied recall demands.

In essence, as LLM-driven RAG systems continue to evolve and expand across domains, this paper serves as a foundational exploration, prompting deeper inquiry and refinement of the techniques and methodologies needed to enhance their performance and reliability in diverse application scenarios.