An Expert Overview of "ShowUI: One Vision-Language-Action Model for GUI Visual Agent"

The paper focuses on the development of a vision-language-action model termed "ShowUI," specifically designed to enhance the functionality of graphical user interface (GUI) visual agents. This line of research addresses the challenge of designing GUI assistants that perceive user interfaces as humans do, which is a capability still underexplored given that traditional language-based agents rely heavily on closed-source APIs and text-rich metadata. ShowUI seeks to integrate visual, language, and action processing into a single model, which offers several structural and computational innovations aimed at improving performance for interaction with graphical user interfaces.

Key Innovations

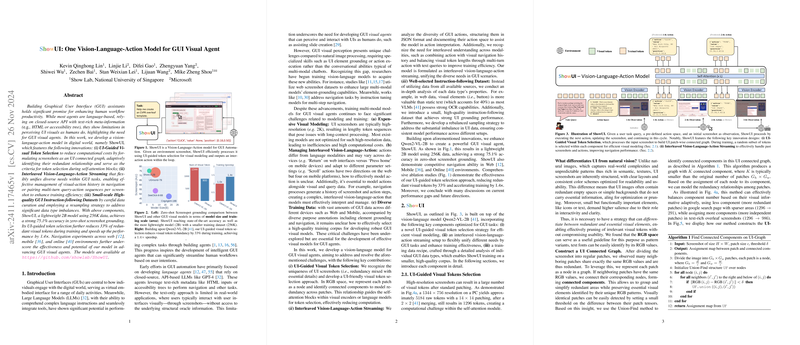

ShowUI introduces three central innovations:

- UI-Guided Visual Token Selection: The method utilizes UI-specific visual properties to construct a graph-based model for token selection in screenshots. By treating each screenshot patch as a node in a graph based on RGB similarity, the model reduces redundant components. This allows efficient token selection strategies within self-attention layers of visual encoders, thereby curtailing unnecessary computation without losing spatial information. This approach significantly decreases the number of visual tokens and expedites training by 1.4× while maintaining positional relevance crucial for UI element grounding.

- Interleaved Vision-Language-Action Streaming: The model unites different modalities to manage GUI tasks flexibly. This setup allows effective organization of visual-action histories through navigation and association of multi-turn query-action sequences for better training efficiency. The model employs action histories in conjunction with visual content to understand complex GUI navigation tasks better, especially when dealing with diverse action modalities responding to visual or textual prompts.

- Curated GUI Instruction-following Datasets: ShowUI includes a carefully curated, small-scale yet high-quality dataset tailored for GUI tasks. By leveraging resampling for data balance, the model achieves superior zero-shot grounding accuracy (75.1%) using a lightweight architecture. This dataset promises a robust representation of GUI tasks by capturing diverse data across web, desktop, and mobile platforms.

Experimental Evaluation and Implications

The empirical evaluation of ShowUI involves various tasks, ranging from zero-shot grounding to navigation across multiple digital environments such as web-based interfaces, mobile applications, and online platforms. The model demonstrates remarkable efficiency and effectiveness, achieving outstanding accuracy despite its smaller model size and reduced training data. This showcases the efficacy of the UI-guided token selection and the interleaved modality handling strategies. ShowUI paves the way for progressive improvements in GUI agents, highlighting the capability to perform complex task sequences previously unattainable by purely language-based counterparts.

From a broader perspective, ShowUI represents a strategic advancement in GUI agent design, making practical strides in synthesizing visual comprehension with action execution in multi-modal learning frameworks. It seeks to address a key research gap, aiming to unify vision, language, and interaction mechanisms.

Future Directions

ShowUI offers substantial potential for further exploration. Notably, integrating this system into adaptive, real-time online environments through reinforcement learning could be a promising future direction. Further research could investigate expanding the dataset to cover even more UI states, promoting the model's ability to generalize across unpredictable GUI scenarios. Additionally, enhancing the model's interpretability and fine-tuning its action prediction mechanisms within diverse interfaces constitutes a challenging yet desirable path forward.

In conclusion, the ShowUI framework introduces an effective methodology for developing robust, efficient GUI visual agents that bridge the existing gap between LLMs and interactive digital tasks, setting a novel and substantial benchmark in this domain.