An Overview of "VideoGUI: A Benchmark for GUI Automation from Instructional Videos"

The paper "VideoGUI: A Benchmark for GUI Automation from Instructional Videos" introduces a novel benchmark designed to enhance the evaluation of Graphical User Interface (GUI) automation systems. This benchmark, named VideoGUI, is derived from high-quality instructional videos and focuses on complex tasks that necessitate sophisticated procedural knowledge and advanced visual understanding.

Key Contributions

Benchmark Design

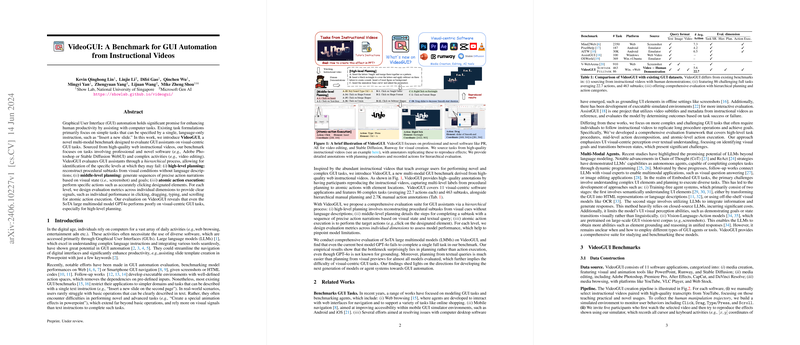

VideoGUI sets itself apart by targeting complex and professional software environments, including but not limited to Adobe Photoshop, Premiere Pro, and Stable Diffusion WebUI. The task formulations extend beyond simple text-based instructions, requiring substantial visual reasoning. The benchmark is constructed using a three-tier hierarchical framework:

- High-level Planning: This involves reconstructing the sequence of procedural subtasks from visual contexts alone, without linguistic descriptions. This level reinforces the need for visual understanding in planning GUI tasks.

- Middle-level Planning: At this stage, the task is to generate detailed action instructions based on visual states and specific goals.

- Atomic Action Execution: The final tier evaluates the accuracy of executing basic GUI actions such as clicking, dragging, typing, and scrolling.

Each level of the hierarchy is meticulously designed to capture distinct aspects of GUI automation, from general planning to fine-grained action execution.

Evaluation and Metrics

The benchmark introduces comprehensive evaluation metrics tailored for each hierarchical level:

- High-level Planning: Assessed by the ability to deduce procedural task steps purely from visual input.

- Middle-level Planning: Evaluates the precision in generating step-by-step action descriptions.

- Atomic Action Execution: Detailed metrics measure the accuracy of clicking (

DistandRecall@d), dragging, typing precision, and correct scrolling actions.

Empirical Results

In evaluating state-of-the-art large multimodal models (LMMs) like GPT-4o, the benchmark reveals several key insights:

- Performance Gaps: Even the most advanced LMMs exhibit notably poor performance on visually intensive tasks, particularly at the high-level planning stage. For instance, GPT-4o struggles to complete a single full task proficiently.

- Bottlenecks in Planning: The empirical results indicate that planning, rather than action execution, presents the primary challenge. Models perform comparatively better when working off textual queries than visual ones, underscoring the complexity of visual-centric tasks.

Practical and Theoretical Implications

Practically, the findings from VideoGUI suggest a need for more sophisticated models that can handle detailed visual reasoning and task planning. The benchmark's focus on professional software tasks highlights the potential efficiency gains through improved GUI automation in real-world applications like video editing and graphic design.

Theoretically, VideoGUI provides a fertile ground for advancing research in multimodal learning, particularly in integrating visual and textual cues for complex task completion. The rich, multi-level annotations and the diverse task set push the boundaries of current AI capabilities, suggesting directions for future research focusing on enhancing visual understanding and hierarchical planning in AI systems.

Future Directions

VideoGUI sets a new standard for evaluating GUI automation and provides a comprehensive dataset that can spur future research in AI. Possible future developments include:

- Enhanced Visual Understanding: Research could focus on more robust models capable of understanding and planning from visual previews, potentially integrating techniques from computer vision and symbolic AI.

- Improved Multimodal Fusion: Developing models that can effectively balance visual and textual information should improve performance on benchmarks like VideoGUI.

- Application-Specific Optimization: Tailoring models to excel in specific applications such as video editing or digital painting could yield more practical automation tools and enhance user productivity.

In conclusion, the introduction of VideoGUI marks a significant milestone in the evaluation of GUI automation systems. By focusing on instructional videos and professional tasks that require a blend of visual and procedural understanding, the benchmark offers a rigorous platform for testing and advancing the frontiers of multimodal AI capabilities.