Introduction to Visual LLMs

The development of AI has led to the creation of Visual LLMs (VLMs) that can interpret and navigate Graphic User Interfaces (GUIs), an essential part of digital interaction today. These AI agents provide a new way to assist users in interacting with computers and smartphones through screens.

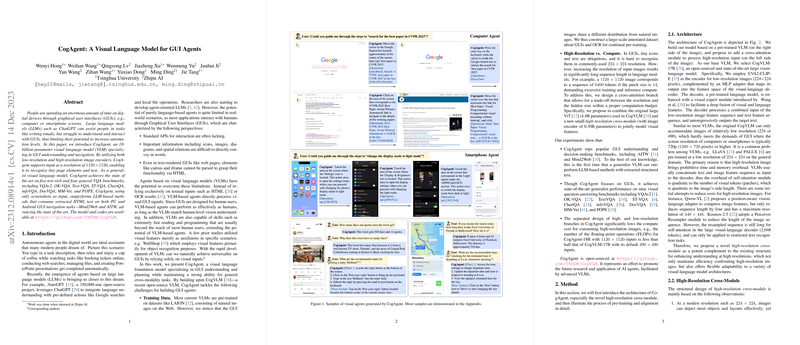

The Rise of CogAgent

Introducing CogAgent, an 18-billion-parameter VLM that specializes in understanding and automating tasks within GUI environments. Unlike standard models that struggle with image resolution constraints and limited textual input, CogAgent is engineered to operate with high-resolution input, allowing it to recognize small GUI elements and interpret text within images more effectively.

Architectural Advancements

CogAgent builds upon a foundation of VLMs but introduces a novel high-resolution cross-module. This allows the model to work with higher image resolutions without exponentially increasing computational costs. By incorporating both low-resolution and high-resolution image encoders, CogAgent is optimized to handle detailed visual features found in GUIs, like icons and embedded text.

Training and Evaluation

To train CogAgent, researchers constructed large-scale datasets for pre-training, focusing on recognizing various text fonts and sizes, as well as specific GUI elements and layouts. CogAgent was evaluated across several benchmarks, including text-rich visual question-answering (VQA) tasks and GUI navigation tests on both PC and Android platforms, showcasing leading performance.

The Future of AI Agents and VLMs

CogAgent represents a significant stride in the field of AI agents and VLMs. With its high-resolution input capabilities and efficient architecture, CogAgent holds promise for future research and applications in increasingly automated and AI-assisted digital interactions across various devices.