Overview of Multimodal Autoregressive Pre-training of Large Vision Encoders

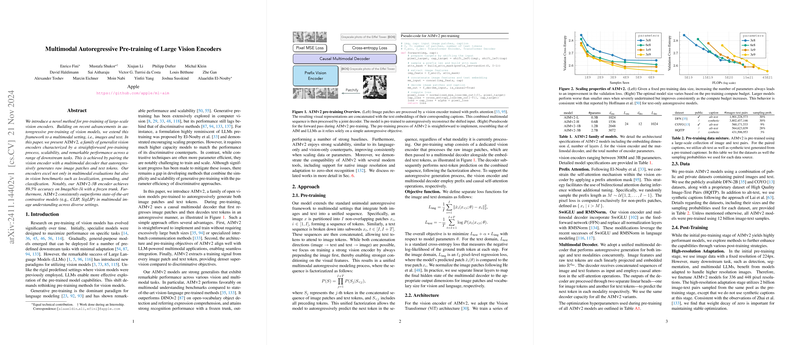

The research paper introduces a method for pre-training large-scale vision encoders by extending autoregressive pre-training techniques to multimodal settings, involving both images and text. Specifically, it focuses on integrating concepts from recent developments in autoregressive frameworks for vision models into a multimodal context to create a family of generalist vision encoders. These encoders are paired with a multimodal decoder capable of autoregressive generation of both image patches and text tokens, providing a streamlined approach to pre-training with scalability and significant performance capabilities across a wide spectrum of downstream tasks.

Methodology and Technical Contributions

The authors propose an autoregressive framework where images and corresponding captions are processed simultaneously. In this approach, an image is first divided into non-overlapping patches, which are then sequentially processed alongside text tokens using a multimodal decoder. This decoder not only enhances the text generation process but simultaneously predicts the next image patch, establishing a dense supervision mechanism over both modalities. The paper illustrates the method by detailing a pre-training algorithm that aligns closely with leading techniques used in LLMs, emphasizing its simplicity and ease of implementation.

The paper provides strong numerical results, indicating that the proposed method outperforms state-of-the-art contrastive models like CLIP and SigLIP in tasks related to multimodal image understanding. A notable achievement is the reported 89.5% accuracy on the ImageNet-1k benchmark with a frozen trunk, highlighting the efficiency and power of the introduced approach.

Implications and Future Perspectives

From a theoretical standpoint, the pre-training framework delineated in this paper exemplifies a significant advancement in leveraging autoregressive models for multimodal inputs, showcasing the benefits of unified, simultaneous training objectives for both image and text modalities. This stands in contrast to many existing models that utilize separate modalities, often requiring complex alignment and integration strategies.

Practically, the paper demonstrates how vision models pre-trained with this framework can be readily adapted for an array of detailed downstream vision and multimodal tasks, with applications ranging from open-vocabulary detection to comprehensive visual question answering benchmarks. This positions the approach as a versatile and efficient option for endeavors aiming to synthesize text understanding with intricate visual inputs.

The research acknowledges the growing importance of scalability in model design, advocating for architectures that not only offer improved performance metrics but also can be efficiently scaled across both data and model size dimensions. This scalability is evidenced by consistent performance gains when data and model parameters were expanded.

Future work could further explore optimizing the balance between text and image objectives to refine autoregressive pre-training's efficacy, alongside investigating adaptations for even higher image resolutions and broader datasets. The framework's potential to seamlessly integrate with LLM-powered multimodal ecosystems as an efficient and robust vision encoder lays promising groundwork for continuing advancements in artificial intelligence applications that bridge vision and language capabilities.

In conclusion, by embracing a straightforward yet effective autoregressive multimodal pre-training strategy, the work provides substantial contributions to both the theoretical understanding and practical deployment of integrated AI systems that combine vision and linguistics in innovative ways.