VALOR: Vision-Audio-Language Omni-Perception Pretraining Model and Dataset

Introduction

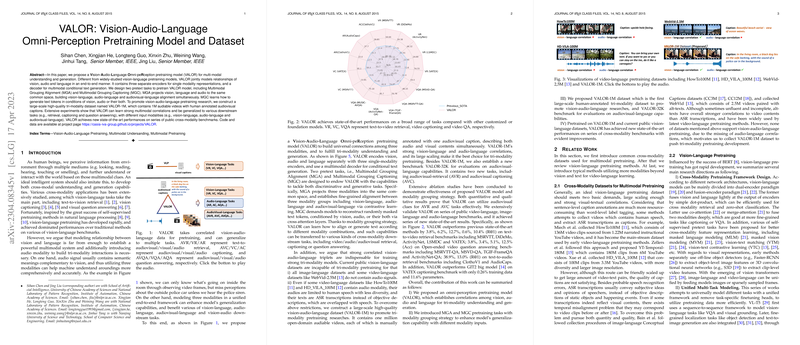

The progression of multimedia understanding tasks, particularly those requiring comprehension across multiple modalities such as vision, audio, and language, necessitates models capable of intricate cross-modality interpretation and generation. Recent endeavors predominantly focus on vision-language pretraining (VLP), neglecting the rich semantic nuances audio can provide. This gap motivates the introduction of a novel Vision-Audio-Language Omni-peRception (VALOR) pretraining model designed for tri-modality learning, offering an end-to-end framework for learning multimodal correlations and producing text conditioned on visual and/or auditory inputs.

VALOR Model and Pretraining Tasks

VALOR innovatively incorporates three single-modality encoders for vision, audio, and language with a multimodal decoder focusing on conditional text generation. The model employs Multimodal Grouping Alignment (MGA) and Multimodal Grouping Captioning (MGC) as pretraining tasks. MGA aims at projecting vision, audio, and language modalities into a shared space to establish alignment across modality pairs (vision-language, audio-language, audiovisual-language) through contrastive learning. Conversely, MGC conditions on randomly masked text tokens, demanding the generation of these tokens based on vision, audio, or both, thus fostering generative capabilities across modalities.

VALOR-1M and VALOR-32K Datasets

To facilitate research in vision-audio-language pretraining, a large-scale dataset named VALOR-1M is constructed, consisting of 1M videos with manually annotated audiovisual captions to capture both auditory and visual content descriptions. Simultaneously, the VALOR-32K benchmark set is introduced for evaluating audiovisual-language capabilities, including novel tasks like audiovisual retrieval (AVR) and audiovisual captioning (AVC). These datasets collectively provide a robust foundation for examining the cross-modality learning efficacy of models like VALOR.

Experimental Results

Extensive experiments demonstrate VALOR's capability to learn strong multimodal correlations and generalize across various cross-modality tasks, including retrieval, captioning, and question answering, with diverse input modalities. Notably, VALOR achieves substantial improvements over state-of-the-art methods on public benchmarks, outperforming previous best-performing models by significant margins in tasks such as text-video retrieval and video question answering.

Conclusion and Future Work

The VALOR model, accompanied by the VALOR-1M and VALOR-32K datasets, sets a new standard for vision-audio-language pretraining research. The proposed model and datasets address the critical need for integrating audio modality into multimodal understanding and generation, underscoring the importance of audio-visual-textual alignment for comprehensive multimedia analysis. Future work may explore extending the VALOR framework to cover more diverse modalities and complex tasks, potentially incorporating unsupervised methods for expanding the VALOR-1M dataset or integrating generative modeling for vision and audio modalities.