Broadening the Visual Capabilities of Vision-LLMs Through BRAVE

Introduction to BRAVE

Recent advancements in Vision-LLMs (VLMs) have significantly improved their performance across a variety of tasks requiring both visual and textual understanding, such as image captioning and visual question answering (VQA). These improvements are primarily due to enhancements in vision encoders and LLMs, which are then combined using various bridging techniques. Despite these achievements, VLMs continue to face challenges arising from the limitations of vision encoders, including their "blindness" to certain image features and susceptibility to visual hallucinations.

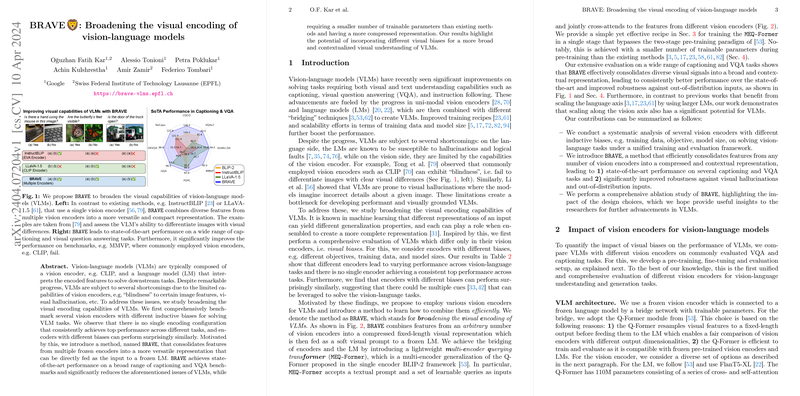

In response to these limitations, this paper introduces BRAVE (Broadening the Visual Encoding of Vision-LLMs), a method designed to leverage the diverse features of multiple vision encoders. BRAVE consolidates these features into a versatile representation that can be directly fed to a frozen LLM (LM), achieving state-of-the-art performance on a range of captioning and VQA benchmarks, while requiring fewer trainable parameters and offering a more compact representation.

Key Contributions

- A comprehensive benchmarking of several vision encoders with different inductive biases, highlighting the varying performance across vision-language tasks and indicating no single encoder consistently delivers top performance.

- The introduction of BRAVE, an effective approach to aggregate features from multiple vision encoders into a singular, compressed, and contextual representation. BRAVE demonstrates improved performance and robustness against various benchmarks, signifying a more generalized and versatile visual understanding.

- A detailed ablation paper of BRAVE, shedding light on the impact of its design choices, and offering insights potentially beneficial for future research in VLMs.

Broadening Visual Encoding in VLMs

The paper commences with a comprehensive evaluation of VLMs configured with different vision encoders, revealing that no single encoder uniformly excels across diverse tasks. This observation, along with the realization that encoders with differing biases can yield surprisingly similar outcomes, paves the way for the development of BRAVE. BRAVE combines the strengths of various vision encoders to produce a more comprehensive visual representation, addressing the vision encoder limitations.

Methodology: BRAVE in Detail

BRAVE operates by integrating features from an arbitrary set of vision encoders using a Multi-Encoder Querying Transformer (MEQ-Former). This mechanism resamples and refines visual features into a compact form, effectively bridging the gap between vision encoders and the LM. The innovation lies in its efficient handling of diverse visual signals and its ability to maintain a smaller footprint in terms of trainable parameters compared to previous methods.

Empirical Validation and Analysis

Extensive experimentation demonstrates BRAVE's superior performance across various captioning and VQA tasks. Notably, it enhances the robustness of VLMs against challenges such as out-of-distribution inputs and visual hallucinations, areas where existing models have historically struggled. The paper also explores an ablation paper that explores the impact of design choices within BRAVE, confirming the method's effectiveness and efficiency.

Implications and Future Research Directions

The findings underscore the potential of broadening the visual encoding within VLMs as a means to enhance their capability and performance. The success of BRAVE suggests that future work could explore incorporating a wider array of vision encoders, further diversifying the visual representations that VLMs can understand and interpret. Moreover, the approach highlights the significance of scaling along the vision axis, encouraging future research to balance the scaling across both vision and language components to achieve optimal VLM performance.

In conclusion, BRAVE represents a significant step forward in addressing the limitations of current VLMs, offering a more generalized and robust method for integrating visual and linguistic information. This work lays the foundation for further advancements in the field, pointing towards a future where VLMs can achieve even greater understanding and interpretation of the visual world.