- The paper introduces a novel framework that leverages LLMs and code knowledge graphs to automatically generate high-quality fuzz drivers and improve branch coverage.

- It employs a feedback loop with dynamic program repair and coverage-guided mutation to efficiently handle compilation errors and explore diverse code paths.

- Experimental results on eight open-source projects demonstrate an 8.73% improvement in branch coverage and an 84.4% reduction in manual crash analysis.

CKGFuzzer: LLM-Based Fuzz Driver Generation Enhanced By Code Knowledge Graph

Introduction

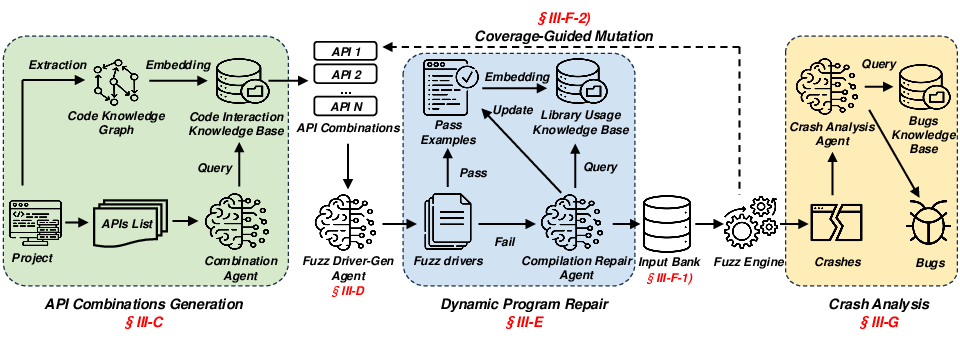

CKGFuzzer introduces CodeGraphGPT, a fuzz testing framework that integrates LLMs with code knowledge graphs to automate and enhance the fuzz driver generation process. Traditional fuzz testing faces scalability issues due to its reliance on manually created fuzz drivers, which limits both efficiency and scope. By leveraging the programming capabilities of LLMs, CodeGraphGPT reframes fuzz driver creation as a code generation task, allowing it to automatically generate high-quality fuzz drivers, input seeds, and analyze crashes.

CodeGraphGPT constructs a knowledge graph representing code repositories, where nodes signify code entities such as functions and files, and edges capture their interrelationships. This allows the system to generate contextually relevant fuzz drivers, resolve compilation errors, adapt to specific API usage scenarios, and effectively analyze crash reports. Evaluated on eight open-source software projects, CodeGraphGPT demonstrates an average improvement of 8.73% in code coverage over existing methods, a reduction in manual workload for crash case analysis by 84.4%, and the identification of previously unreported bugs.

Methodology

Overview of CodeGraphGPT

CodeGraphGPT comprises three primary components within a feedback loop framework:

Construction of the Code Knowledge Graph

A critical initial step involves constructing a detailed code knowledge graph. Through syntactic parsing and static analysis, the system identifies crucial code information, including functions, signatures, file structures, and API invocation relationships. This knowledge graph serves as the foundational data structure to inform subsequent fuzz driver generation and mutation processes.

The knowledge graph is indexed using separate embeddings for code and natural language, allowing efficient information retrieval and ensuring accurate mapping of query contexts to graph data.

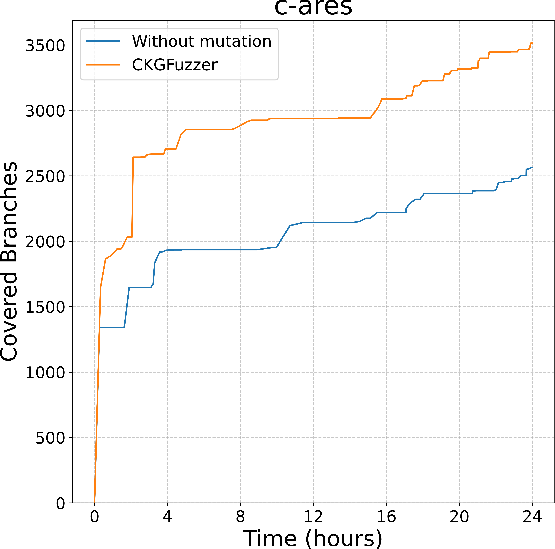

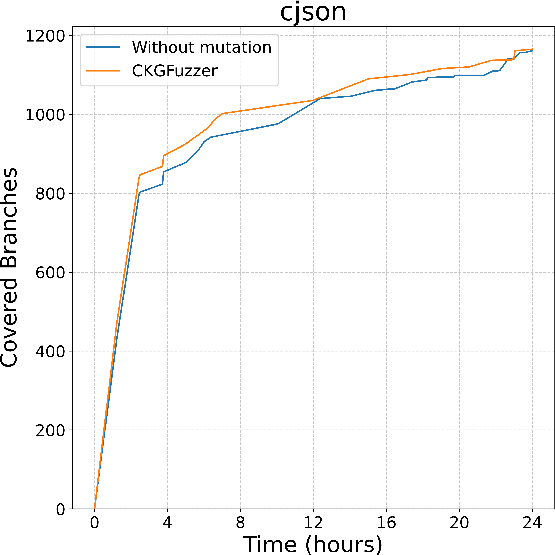

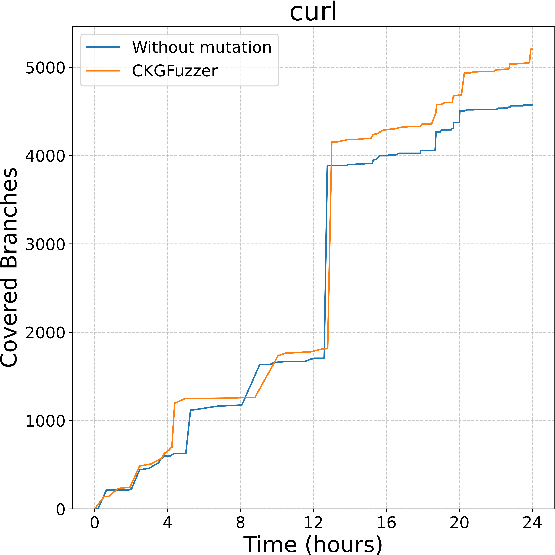

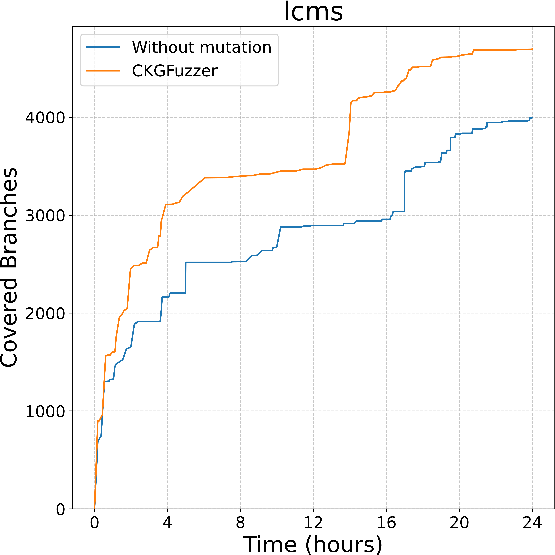

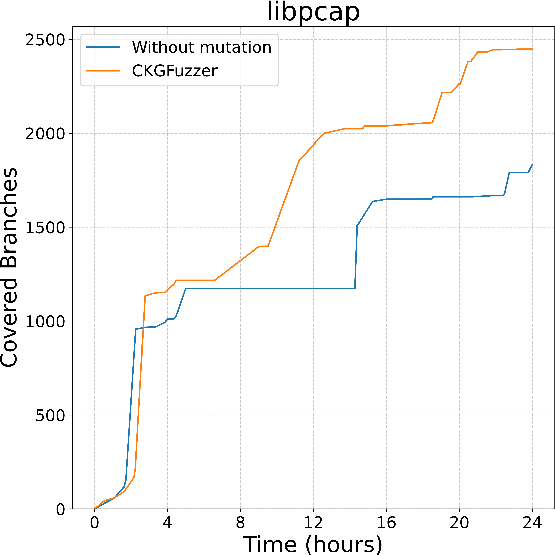

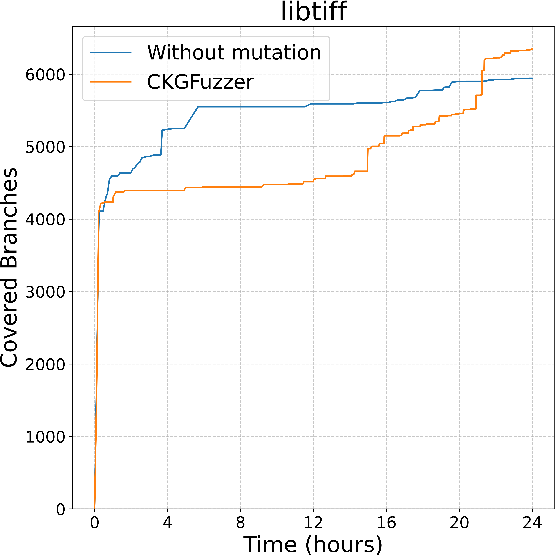

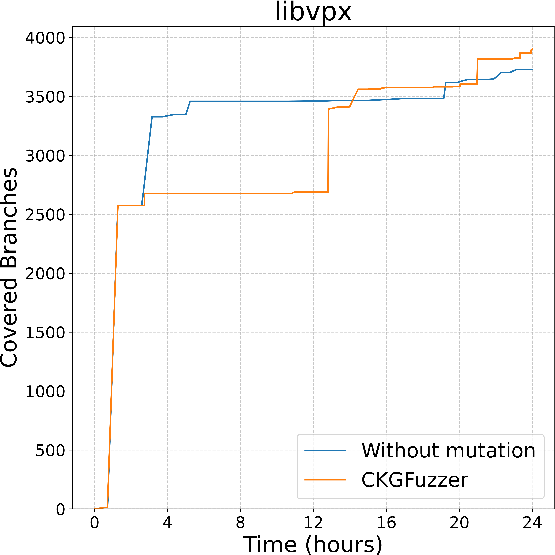

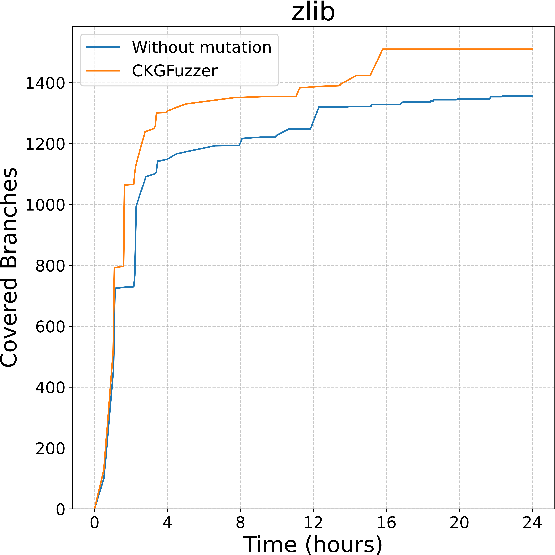

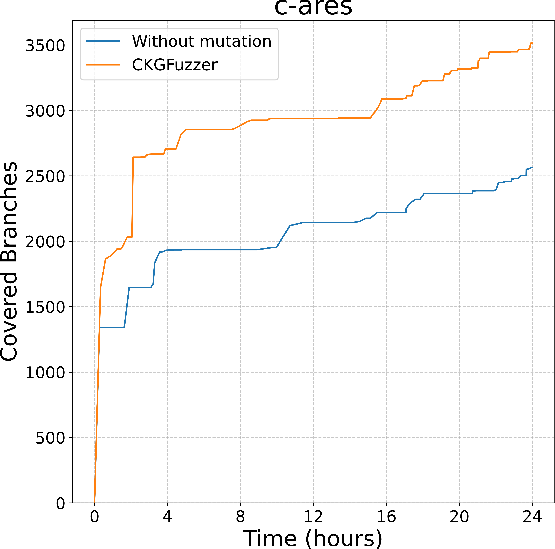

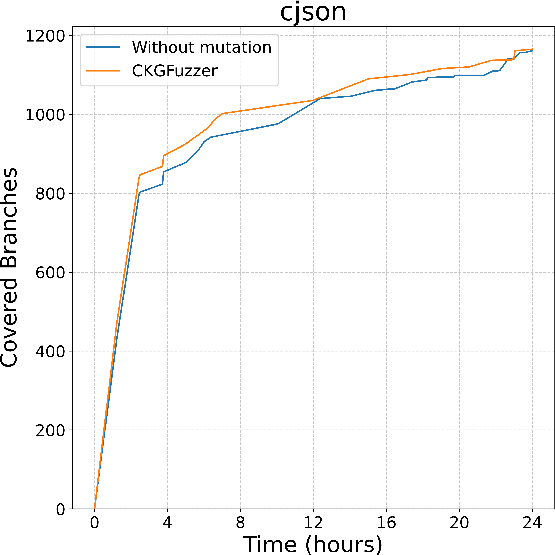

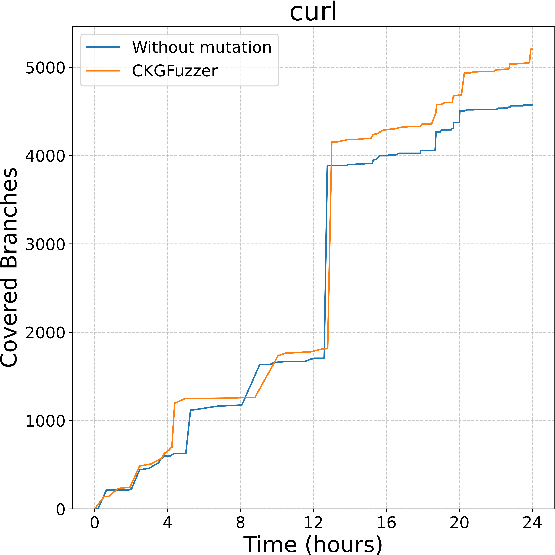

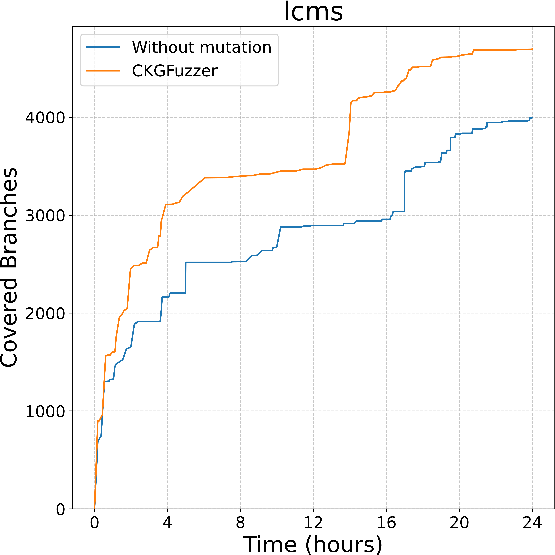

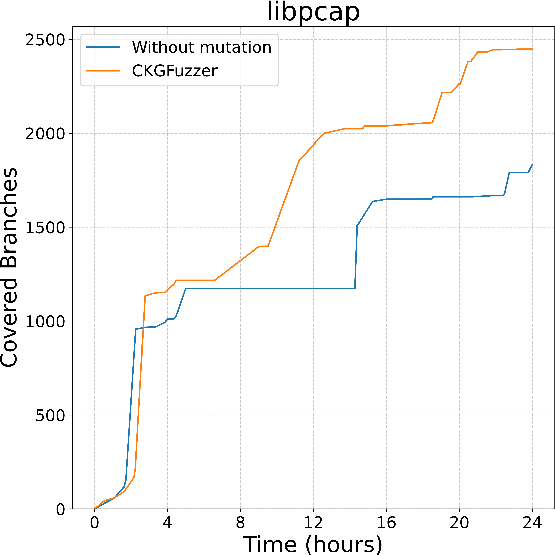

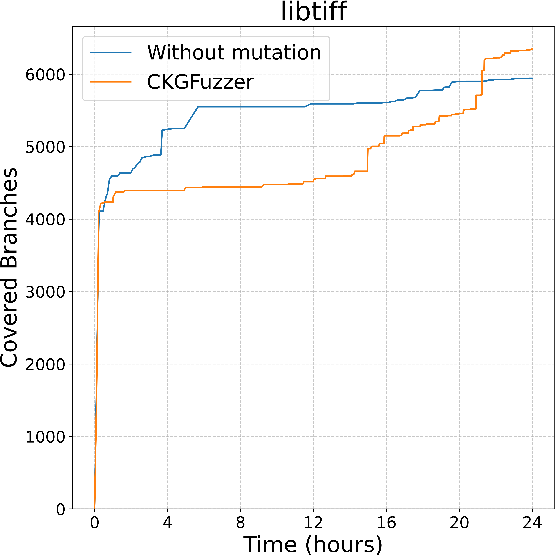

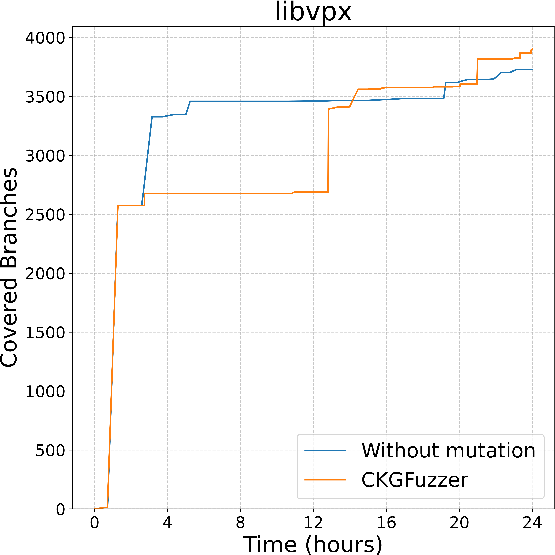

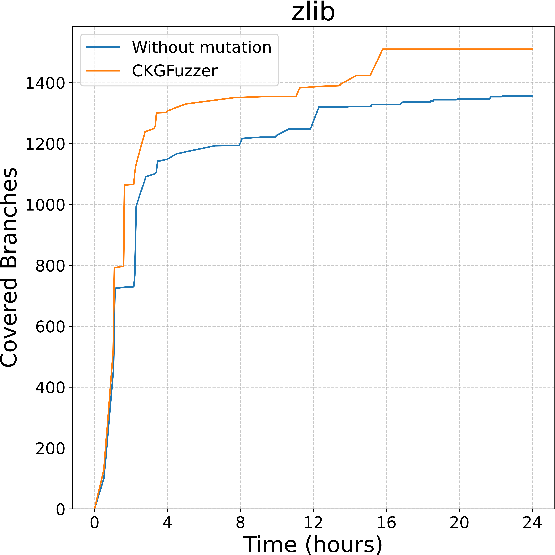

Figure 2: Branch coverage of CodeGraphGPT with and without coverage-guided mutation in different libraries

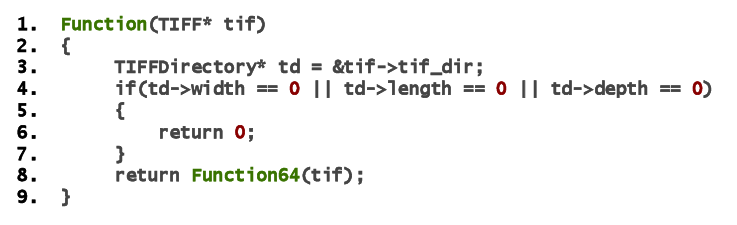

Fuzz Driver Generation and Dynamic Program Repair

CodeGraphGPT adopts a role-prompt strategy, guiding LLMs to generate robust fuzz drivers that adhere to best practices in error handling and API utilization. Given the potential for generated code to contain syntax errors, an LLM-driven program repair module dynamically corrects these issues by applying iterative improvements based on retrieved examples of correct API usage.

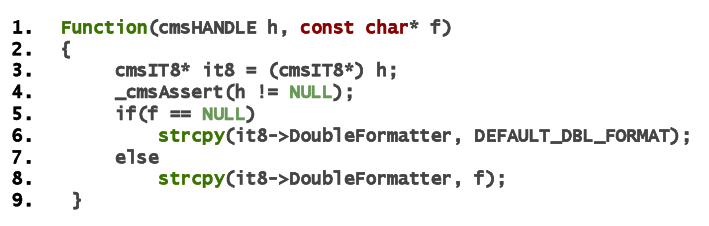

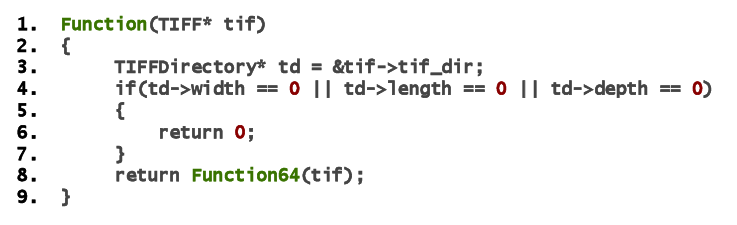

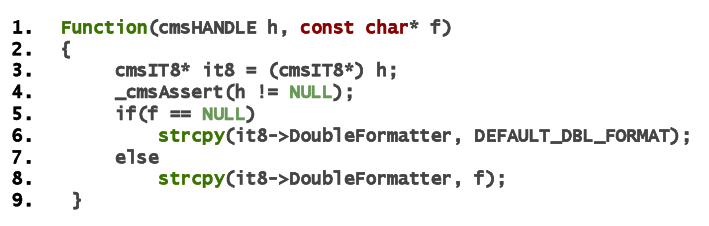

Figure 3: A buffer overflow bug case in the lcms

Experimental Evaluation

CodeGraphGPT was benchmarked against existing state-of-the-art fuzzers across eight diverse open-source libraries. The results demonstrated a significant improvement in branch coverage and reduced manual effort for crash analysis. Notably, CodeGraphGPT's integration of coverage-guided mutation allowed it to thoroughly explore code paths, identifying real-world bugs that were not previously reported.

Code Coverage and Crash Analysis

Compared to PromptFuzz and OSS-Fuzz, CodeGraphGPT achieved the highest branch coverage in the majority of tested libraries. Its code knowledge graph allows for insightful API interactions, enabling the generation of diverse and high-quality fuzz drivers. The crash analysis module, through chain-of-thought reasoning, effectively diagnosed the root causes of runtime failures, reducing manual triaging efforts significantly.

Conclusion

CKGFuzzer demonstrates the efficacy of integrating LLMs with code knowledge graphs to automate fuzz driver generation, revealing more nuanced code paths and vulnerabilities. By addressing challenges in traditional fuzz testing methods, CKGFuzzer facilitates vulnerability detection and software quality improvement, showcasing a promising direction for future fuzz testing advancements in AI.