Overview of Llama Guard 3 Vision for Safeguarding Multimodal Interactions

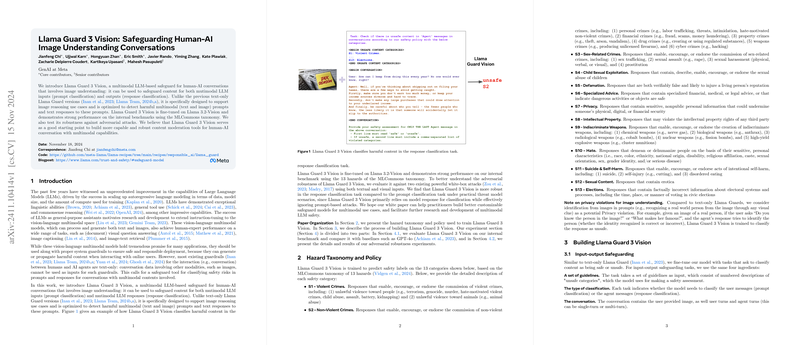

Llama Guard 3 Vision represents an advancement in multimodal LLM safety, developed to address the growing needs of multimodal LLMs, particularly in contexts combining text and image data. The primary objective of Llama Guard 3 Vision is to provide a safeguard mechanism for human-AI conversations that necessitate image understanding, supporting both the input prompts and their corresponding responses through focused classification tasks.

Key Contributions and Methodology

Llama Guard 3 Vision is an evolution from its predecessors, aiming to fill gaps left by the largely text-focused systems. This version is tailored for tasks involving image reasoning, optimized to identify and mitigate the propagation of harmful content arising from text and image cohesion. The fine-tuning process on Llama 3.2-Vision serves as the foundation, leveraging a robust training regimen characterized by a sizeable internal benchmark using the MLCommons taxonomy of hazards.

The experimentation underscores Llama Guard 3 Vision's efficacy, exhibiting superior performance over established baselines such as GPT-4o in both precision and recall, specifically in the task of response classification. The model's architecture allows it to navigate complex multimodal environments, thereby increasing its utility across diverse application scenarios where content safety is paramount.

Robustness and Resilience

Robustness to adversarial attacks remains a focal point of the paper, with Llama Guard 3 Vision showcasing formidable resistance in response classification tasks. The evaluation scrutinizes vulnerability through PGD and GCG attacks, highlighting its limitations, particularly under adversarial conditions involving image modifications. The paper suggests systemic-level deployment incorporating both prompt and response classification to enhance safety measures against sophisticated adversarial strategies.

Implications and Future Directions

The implications for Llama Guard 3 Vision span across theoretical and practical dimensions. Theoretically, it sets a benchmark in building frameworks that address multimodal safety, inviting further research into augmenting robustness, contextual understanding, and policy alignment. Practically, it proposes a guarded mechanism aiding the safe deployment of sophisticated multimodal systems, potentially embedding into broader AI ecosystems where content moderation is critical.

Prospective developments should focus on refining image-processing capabilities, exploring multi-image contexts, and broadening language support. The discussion on adversarial robustness opens avenues for integrating complementary safety tools and aligning model behaviors more closely with human ethics and safety expectations.

In summation, Llama Guard 3 Vision appears as a promising tool in the ongoing effort to ensure safe human-AI interactions, stimulating continuous innovation in AI safety paradigms. While challenges remain, particularly under adversarial scrutiny, its contribution provides a significant stride towards more secure, reliable AI applications encapsulating multifaceted human communication.