- The paper presents Conversational Prompt Engineering as an innovative method to simplify prompt creation using a conversational interface.

- It employs iterative feedback and unlabeled data to transition from zero-shot to few-shot prompts, enhancing task-specific instructions.

- User studies validate CPE’s effectiveness, showing comparable results to traditional prompt engineering while improving usability in enterprise applications.

Conversational Prompt Engineering

Conversational Prompt Engineering (CPE) represents an innovative approach to facilitate the process of crafting prompts for LLMs, aiming to alleviate the burdens associated with traditional Prompt Engineering (PE). This paper delineates CPE as a user-friendly tool designed to generate personalized prompts without the necessity of labeled data or initial seed prompts. By employing chat models as mediators, users are guided through a conversational interface to articulate their requirements, simplifying prompt creation tasks across diverse applications.

Motivation and Core Insights

Prompt engineering has become an essential aspect of utilizing LLMs effectively across various domains such as customer support automation, retrieval augmented generation, and text summarization. The challenge lies in the intricate nature of designing prompts that can capture nuanced preferences and desired outcomes without meticulous expertise, which often prohibits widespread adoption. CPE aims to streamline this process through three foundational insights:

- Task Clarification: Users often struggle to precisely define the task and expectations due to vague objectives. Advanced chat models are positioned to assist users in better articulating these expectations, enhancing prompt clarity for desired outputs.

- Data-specific Dimensions: Unlabeled input texts serve as fertile ground for LLMs to suggest facets of potential output preferences, thus helping users refine requirements.

- Feedback Utilization: User feedback on model-generated outputs is leveraged to iteratively refine both the instruction and the outputs themselves, aligning the generated results with user preferences.

These insights collectively empower CPE to transition from zero-shot (ZS) prompts to few-shot (FS) prompts that encapsulate user-approved examples, thereby catering to enterprise scenarios involving repetitive tasks with large datasets.

System Design and Implementation

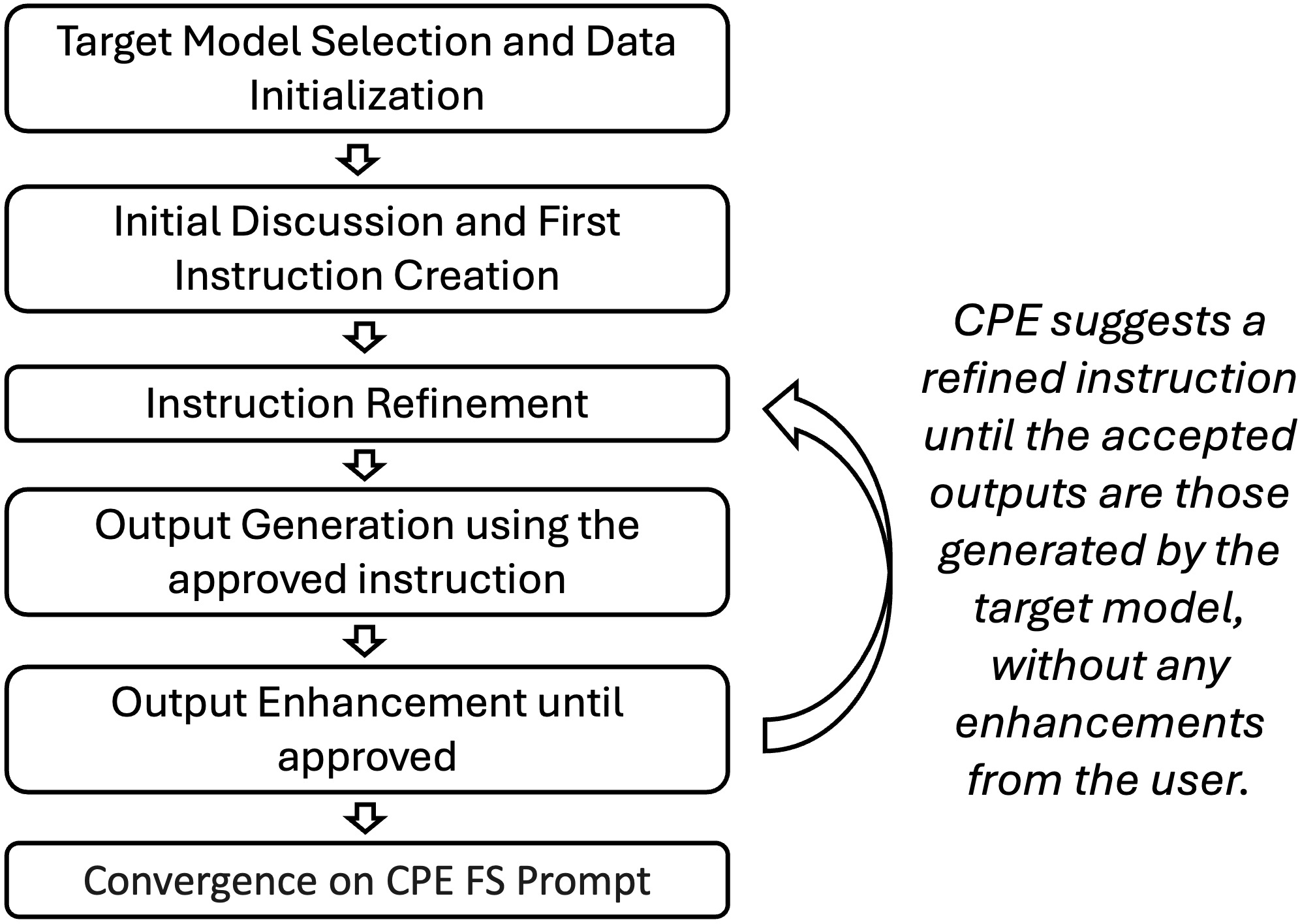

CPE operates through a three-party chat system comprising the user, the chat model, and the orchestration system. The process involves several iterative stages:

The system is characterized by dynamic context management, where context filtering helps manage interactions efficiently despite LLM context length limitations. Chain of Thought (CoT) prompting is employed during complex phases, ensuring nuanced understanding of user comments leading to refined instructions through API calls.

Figure 2: Messages exchanged between the different actors in a chat with CPE, starting from (a) the user approves a suggested prompt, until (b) user approves the output of the first example. System messages are abbreviated.

User Study and Evaluation

To evaluate CPE's efficacy, a user study focused on summarization tasks involving 12 well-versed PE practitioners was conducted. The study underscored CPE's potential in crafting prompts that effectively meet user requirements, validated through user ratings on various dimensions such as satisfaction with instruction, conversational benefits, chat pleasantness, and convergence time.

Participants found value in the intuitive conversational structure, despite a noted convergence time average of 25 minutes per session. Evaluation results reveal that both CPE ZS and FS prompts had comparable rankings, suggesting the effective integration of user preferences into the prompt, eliminating the necessity for FS examples.

Figure 3: Evaluation results. The frequency of the three generated summaries across the three ranking categories: Best/Middle/Worst.

Implications and Future Directions

The implications of CPE are profound, offering enterprises a mechanism to effortlessly generate task-specific prompts, thereby enhancing productivity. However, questions remain regarding the potential synergy between CPE-generated prompts and existing automatic PE methods. Future research could explore extending CPE into agentic workflows and leveraging LLM capabilities to autonomously craft complex task-oriented prompts.

Conclusion

Conversational Prompt Engineering signifies an essential advancement in the field of prompt design for LLMs. By facilitating user interaction and feedback within a structured chat infrastructure, CPE aids in the creation of finely tuned prompts suited to individual user needs. This paper validates CPE's effectiveness through empirical studies, marking it as a promising toolkit for enterprises navigating repeated task execution on expansive data volumes.