Introduction

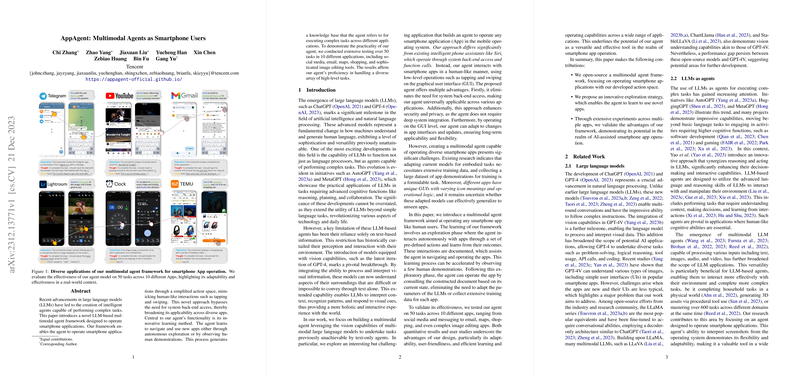

The integration of AI into our daily lives has seen a new development with the creation of intelligent agents that can operate smartphone applications as humans do. Leveraging advances in LLMs, which have greatly expanded the capabilities of AI in understanding and generating human language, a new framework has been presented for a multimodal agent. This agent operates directly through a smartphone's graphical user interface (GUI), engaging in typical user actions such as tapping and swiping.

Methodological Insights

The agent's framework is two-fold, comprising an exploration phase and a deployment phase. During the exploration phase, the agent learns app functionalities either autonomously, through trial and error, or by observing human demonstrations. Information from these interactions is gathered into a document, enriching the agent's knowledge base. In autonomous learning, the agent focuses on elements crucial to app operation and avoids unrelated content like advertisements.

In the deployment phase, the agent employs this knowledge to perform complex tasks. It interprets screenshots of the current app state and references its knowledge base to make informed decisions and execute appropriate actions. The agent's understanding of tasks is computed step by step, where it assesses its surroundings, theorizes actions, takes necessary steps, and summarizes its activities for memory retention.

Experimental Evaluation

The efficacy of the agent was tested on 50 tasks across 10 different smartphone applications, demonstrating its proficiency in diverse applications such as social media, email, and image editing. Design choices within the framework were assessed through specific metrics like success rate, reward scores based on proximity to task completion, and the average number of steps to complete tasks. The findings showed that the custom-developed action space and the documents generated from observing human demonstrations greatly enhanced the agent's performance compared to the raw action API.

Vision Capabilities and Case Study

The agent's capability to interpret and manipulate visual elements was examined through a case paper involving Adobe Lightroom, an image-editing application. The tasks involved fixing images with visual issues, such as low contrast or overexposure. User studies ranked the editing results, and it was found that methods utilizing documents, especially those generated by observing human demonstrations, yielded comparable results to manually crafted documentation.

Conclusion and Future Directions

This multimodal agent framework presents a significant step in enabling AI to interact with smartphone applications in a more human-like and accessible manner, bypassing the need for system backend access. The learning method embraced by the agent, encapsulated in both autonomous interaction and the observation of human behavior, enables rapid adaptation to new apps. Going forward, the ability to support advanced control like multi-touch is a potential area for future research to address current limitations and expand the agent's range of applicability.