AgentBench: Evaluating LLMs as Agents

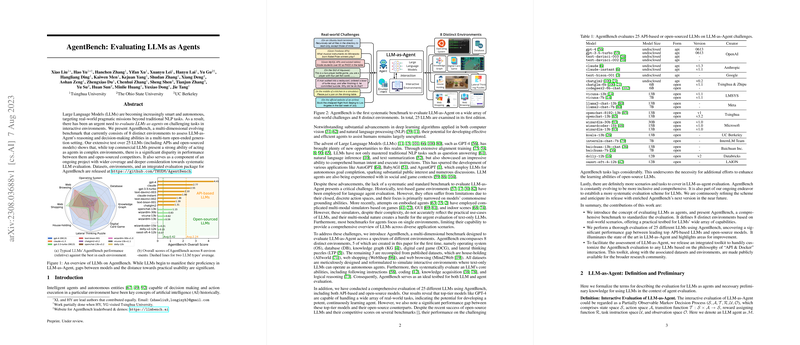

This paper introduces AgentBench, a systematic benchmark designed to evaluate LLMs as agents across a diverse set of environments. Given the increasing role of LLMs in real-world interactive tasks, assessing their ability to serve as intelligent agents has become crucial. AgentBench sets the foundation for evaluating these capabilities by providing a robust framework encompassing eight distinct environments.

Key Contributions

- Comprehensive Benchmark Design: AgentBench includes environments that test LLMs in various scenarios such as code, game, and web-based tasks. These are further categorized into specific environments like Operating Systems (OS), Databases (DB), Knowledge Graphs (KG), Digital Card Games (DCG), and Web Browsing (WB).

- Diverse Task Evaluation: By employing tasks like decision-making, instruction following, and multi-turn reasoning, this benchmark ensures the assessment of LLMs is thorough. It examines aspects like coding proficiency, logical reasoning, and strategic planning.

- Comparative Study of LLMs: The paper evaluates 27 different LLMs, both commercial API-based and open-sourced, revealing significant performance disparities. While models like GPT-4 exhibit advanced capabilities, many open-sourced models lag considerably.

- Insightful Error Analysis: The authors categorize reasons for task failures, such as Context Limit Exceeded (CLE) and Invalid Action (IA), providing insights into areas needing improvement. This analysis highlights the challenges in long-term reasoning and decision-making that current models face.

- Framework and Toolkit: AgentBench introduces a modular evaluation framework, enabling easy assessments through a server-client architecture. This design supports simultaneous evaluations of multiple models and tasks, enhancing usability for future research.

Numerical and Empirical Findings

The empirical findings reveal a stark contrast between top-tier commercial models and their open-sourced counterparts, with models like GPT-4 achieving an overall score of 4.01, compared to below 1.00 for many open-sourced models. The paper emphasizes the need for improvements, particularly in long-term reasoning and adherence to instruction formats.

Implications and Future Directions

The implications of this work are significant for both the theoretical understanding and practical deployment of LLMs as agents. By highlighting the potential and current limitations of LLMs, AgentBench sets the stage for ongoing research aimed at improving model alignment, reasoning strategies, and autonomous agent capabilities.

The findings suggest directions for enhancing performance, such as integrating high-quality alignment data and improving code training strategies. Future advancements in LLMs will likely focus on bridging the gaps identified, aiming for models that not only excel in task-specific benchmarks but also demonstrate robust generalist capabilities in multi-modal, real-world scenarios.

AgentBench positions itself as a cornerstone in the evaluation of LLM-as-Agent, providing a platform that can evolve alongside developments in AI, ensuring continued relevance and utility in assessing the growing capabilities of LLMs.