Eliciting Critical Reasoning in Retrieval-Augmented LLMs via Contrastive Explanations

The presented paper addresses a notable challenge in the domain of retrieval-augmented generation (RAG) employed within LLMs. Although RAG methodologies enhance factuality and extend the knowledge base of LLMs by incorporating external information during generation, they are susceptible to errors introduced by noisy in-context passages. This leads to potential biases, misinterpretations, and hallucinations by the models. To mitigate these shortcomings, the paper proposes Contrastive-RAG (C-RAG), a framework designed to introduce and leverage contrastive explanations to elicit critical reasoning in retrieval-augmented tasks.

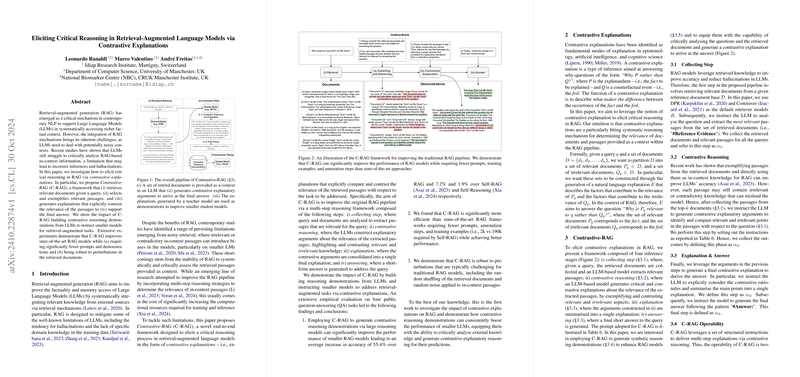

The core of the C-RAG framework is structured around four distinct phases: retrieval of relevant documents, extraction and reasoning of relevant passages, generation of contrastive explanations, and formulation of the final answer. By leveraging these sequential stages, C-RAG aims to not only improve the assessment of in-context document relevance but also significantly bolster the overall performance of RAG systems. Notably, the framework exemplifies the potential of building reasoning demonstrations from larger LLMs to instruct smaller models effectively.

One of the standout aspects of the C-RAG approach is its operational efficiency and robustness. The paper reports substantial improvements on several public QA benchmarks, showcasing C-RAG's ability to enhance model accuracy by 55.4% on average over standard RAG methods. Further, C-RAG maintains robustness to perturbations within retrieved documents — a common failing point of traditional retrieval mechanisms.

A particularly intriguing finding is that C-RAG requires substantially fewer training prompts and annotation steps compared to contemporary methodologies like Self-RAG or Self-Reasoning. Remarkably, C-RAG achieves its performance gains with approximately 2,000 training examples compared to the 190,000 examples required by some techniques. This highlights a promising direction towards efficient and scalable training protocols in NLP.

The paper also introduces the concept of contrastive explanations to the RAG pipeline. By systematically generating explanations that elucidate the differential relevance of retrieved passages, C-RAG enhances models' ability to critically reason with external information sources. This novel integration stands as the first exploration of contrastive reasoning within the confines of RAG, showcasing significant performance uplift across multiple tasks.

From an epistemological and cognitive science perspective, the paper situates contrastive explanations as fundamental mechanisms for enhanced comprehension in AI systems. By establishing a reasoning process that poses questions of the form "Why P rather than Q?", C-RAG effectively partitions retrieved documents into contrasting classes that facilitate clear and evidence-based decision making.

Despite its contributions, C-RAG predominantly relies on advanced LLMs like GPT-4 for generating high-quality contrastive reasoning demonstrations. While this dependence is presently feasible in research settings, the extension of such methodologies to a broader range of smaller and more accessible models remains a pending practical challenge.

Looking forward, the implications of this research are multi-fold. In practice, incorporating structured contrastive reasoning could significantly improve model transparency, interpretability, and accuracy in real-world applications like automated QA, fact verification, and beyond. Theoretically, it poses questions about the nature and limits of current NLP models' reasoning abilities, offering a pathway to deeper understanding and refinement. As AI technology continues to advance, the exploration initiated by C-RAG could pave the way for more nuanced and humanlike reasoning processes within machines.