- The paper introduces an LLM-driven analytics dashboard that empowers teachers with actionable insights into student-ChatGPT interactions and writing processes.

- The paper employs a human-centered co-design methodology and advanced NLP models to detect misuse patterns and align automated essay scoring with teacher criteria.

- The dashboard integrates ChatGPT feedback with iterative prototyping, reducing manual log reviews while enhancing instructional adaptation in EFL writing education.

LLM-Powered Learning Analytics Dashboard for Teachers: An Analysis

Introduction

The integration of LLMs such as GPT-4 into English as a Foreign Language (EFL) writing education is transforming how both students and instructors engage with the writing process. "LLM-Driven Learning Analytics Dashboard for Teachers in EFL Writing Education" (2410.15025) proposes and empirically investigates a teacher-centric dashboard that leverages LLMs and data analytics to provide actionable insights into student-ChatGPT interaction logs, writing processes, and instructional efficacy. This work foregrounds a human-centered methodology, incorporating iterative co-design with educational practitioners and contributions from NLP, HCI, and TESOL domains.

Human-Centered Methodology and Collaborative Design

The dashboard was developed through a multidisciplinary approach involving NLP and HCI experts collaborating with TESOL practitioners and EFL university faculty. The co-design process was bifurcated into two major stages: initial needs assessment via qualitative interviews, followed by iterative prototyping and participatory evaluation. Teachers articulated specific needs concerning ChatGPT integration, including rapid overviews of student-LLM interaction, detection of non-educational tool usage, in-depth analysis of writing and chat logs, and the means to personalize instructional prompts.

Figure 1: Relationships and progress between experts and potential users in education.

This collaborative and iterative process is visualized in (Figure 1), which illustrates the multidisciplinary links spanning technical, pedagogical, and user-centered design domains. This approach exemplifies human-centered learning analytics (HCLA), ensuring the resultant dashboard is grounded in authentic classroom utility and pedagogical alignment.

Dashboard Architecture and System Overview

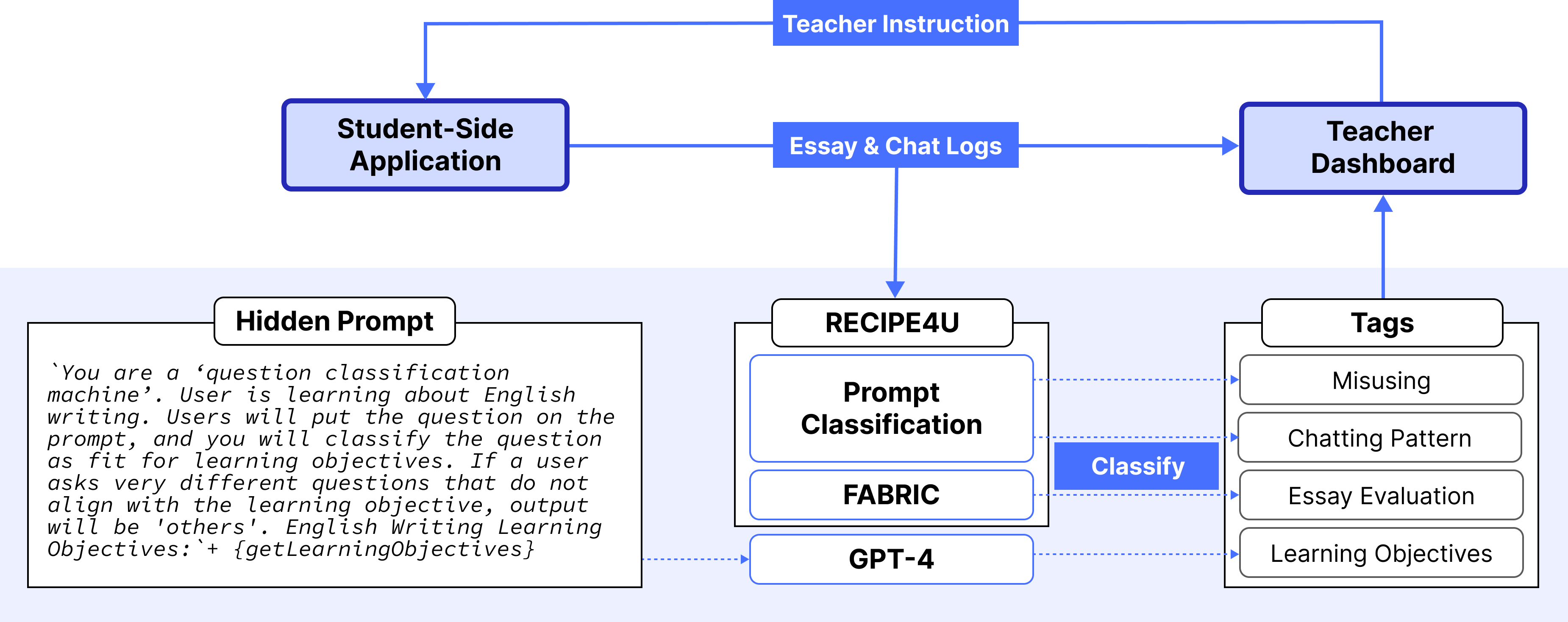

At the core of the dashboard system is the integration of student-side and teacher-side infrastructure. Students interact with an essay-writing platform ("RECIPE") that embeds ChatGPT for real-time feedback, logging detailed data on essay revisions, ChatGPT usage timestamps, chat content, and student satisfaction ratings. The teacher-facing dashboard processes these logs using a suite of LLM-powered and NLP models:

The architecture, depicted in (Figure 2), demonstrates an end-to-end analytics workflow connecting student inputs, automated model predictions, and multimodal dashboard visualizations.

Dashboard Features and Technical Advances

The system incorporates features aimed at addressing core design goals (DGs) identified through teacher feedback:

- DG1: Summarizing Student-ChatGPT Interactions. Teachers access interactive charts presenting frequency and typology of student-LLM interactions on a weekly basis, supporting efficient oversight.

- DG2: Detecting Misuse and Non-educational Usage. The dashboard highlights twelve identified chat patterns, allowing automated surfacing of undesirable behaviors such as essay outsourcing, indiscriminate paraphrasing, or off-topic requests.

- DG3: Integrating Essay and Chat Revision Logs. Longitudinal visualizations enable teachers to correlate writing process data with chat logs, aligned to curricular objectives, enabling nuanced analyses of student engagement and learning trajectories.

- DG4: Prompt Customization by Teachers. Teachers can customize guidance disseminated via ChatGPT, ensuring alignment with pedagogical objectives and allowing dynamic instructional adaptation.

The distinguishing technical contribution is the deployment of LLM-based semantic detection and prompt classification on top of classroom-scale real-world datasets, combined with a dashboard explicitly designed for expert human consumption rather than student-facing automation. The system demonstrates successful detection of non-educational interactions and provides robust, rubric-aligned essay evaluation directly interpretable by instructors.

Implications and Prospects for Future AI-Driven Education

Theoretical Implications: This study demonstrates that LLMs, when combined with human-centered design and authentic dataset calibration, can produce analytics and feedback interventions that retain pedagogical relevance. The inclusion of teacher agency—via custom prompt support and interpretive analytic outputs—contrasts sharply with prior approaches that risk displacing human instructional judgment with opaque automated assessments.

Practical Implications: Teachers benefit from substantial reduction in manual log review, targeted identification of problematic behaviors, and enhanced capacity for reflective practice and instructional adaptation. Feedback indicates that semantic and contextual analysis functionalities are highly valued, revealing a general preference for tools that augment, rather than automate away, classroom expertise.

Limitations: The dashboard's automated misuse detection is reliant on pre-specified pattern paradigms and is not yet generalizable to novel misuse behaviors outside the identified taxonomy. The alignment of LLM-derived learning objectives with actual pedagogical needs remains limited by the granularity of prompt engineering implemented.

Future Directions: The authors argue for further development into more dynamic, context-aware LLM pipelines capable of summarizing behavioral trends, predicting emerging patterns of misuse, and integrating multi-modal student data for richer formative assessment. They also emphasize the necessity of ongoing practitioner involvement and system adaptability in deploying next-generation AI-based educational support tools.

Conclusion

This paper delineates the design, technical implementation, and qualitative evaluation of an LLM-driven teacher dashboard addressing key analytic needs in EFL writing instruction. By integrating fine-tuned LLM analytics with robust HCLA methodologies, it sets a precedent for cross-disciplinary, practitioner-engaged AI deployments in education. Future development should target enhanced LLM explainability, broader pattern generalization, and continued participatory refinement to maximize instructional impact.