Evaluating Visual Understanding in Multimodal Models: The HumanEval-V Benchmark

The integration of visual perception with language processing in Large Multimodal Models (LMMs) presents a promising frontier in advancing AI capabilities. The paper "HumanEval-V: Evaluating Visual Understanding and Reasoning Abilities of Large Multimodal Models Through Coding Tasks" introduces a novel benchmark that specifically addresses this integration by focusing on visual understanding and reasoning in the context of code generation. This work is critical in identifying the current gaps and potential in LMMs by employing visually grounded coding tasks.

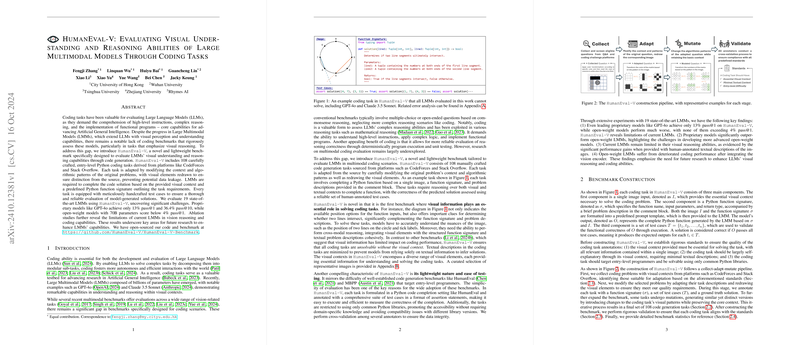

Benchmark Design

HumanEval-V is a lightweight benchmark comprising 108 meticulously curated Python coding tasks drawn from platforms like CodeForces and Stack Overflow. These tasks are modified to include essential visual elements, thus distinguishing the benchmark from conventional text-based evaluations. Each task asks LMMs to complete a Python function based on visual context accompanying a predefined function signature. The visual context and problem descriptions have been altered from their original versions to eliminate potential data leakage, ensuring independent assessment of LMM capabilities.

Evaluation and Findings

Nineteen state-of-the-art LMMs were subjected to this benchmark, including proprietary models like GPT-4o and Claude 3.5 Sonnet, along with various open-weight models. The evaluation revealed significant challenges in visual reasoning and coding among these models:

- Performance Disparity: Proprietary models demonstrated relatively better performance with pass@1 scores up to 18.5%, whereas open-weight models failed to exceed 4% pass@1. Notably, even the top-performing proprietary models scored below expectations on this benchmark.

- Hallucination Errors: The experimentation uncovered that models tended to hallucinate solutions by relying on memorized patterns from pre-existing data, rather than reasoning about the novel visual context presented by HumanEval-V.

- Visual Understanding: The use of image descriptions to guide models showed marked improvements in solving tasks, revealing current limitations in pure visual reasoning capabilities. This highlights the necessity for improved model training techniques to better integrate visual and textual data.

Implications for Future Research

The insights from HumanEval-V underscore several areas for future investigation. There is a critical need to enhance visual reasoning capabilities in LMMs, potentially through more robust training paradigms that accurately integrate image and text processing. Moreover, the observed degradation in coding abilities after integrating vision capabilities suggests that the existing architecture and training methodologies require optimization to prevent such performance drops.

Conclusion

HumanEval-V not only serves as a tool for assessing current LMM shortcomings but also as a guidepost for future developments in visual reasoning. This benchmark paves the way for an enriched understanding of how models process integrated visual and textual information and their effectiveness in coding tasks. The findings suggest an urgent need for evolving LMM architectures that can seamlessly synthesize visual and language inputs, thus propelling AI closer to robust, human-like reasoning and problem-solving abilities.

The open-sourcing of the HumanEval-V benchmark invites continuous community engagement, ensuring its relevance and applicability as a standard for forthcoming LMM evaluations. The future frontier lies in addressing the intricate balance between enhancing model accuracy in visual contexts and maintaining, if not improving, their inherent language processing capabilities.