Evaluating the Complexity and Robustness of Video Large Multi-modal Models

Introduction to Video-LMMs and Their Challenges

Video Large Multi-modal Models (Video-LMMs) are pushing the boundaries of AI by integrating vision with language capabilities, aiming to perform tasks that require understanding both video content and textual descriptions. They are increasingly influential in fields such as robotics, surveillance, and autonomous vehicles. It's essential, however, to challenge these models with tasks that demand a deep understanding of complex video content, contextual nuances, and robust responses to text queries. Existing benchmarks have often fallen short in demanding reasoning beyond simple video comprehension.

The CVRR-ES Benchmark: A Solution to Old Problems

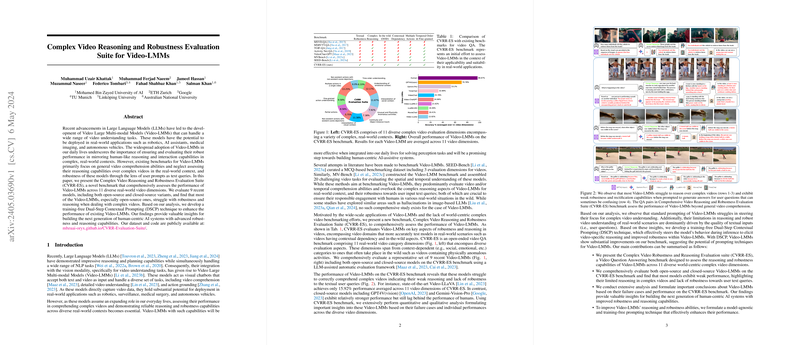

The Complex Video Reasoning and Robustness Evaluation Suite (CVRR-ES) emerges as a novel benchmark designed to thoroughly evaluate Video-LMMs across diverse and complex real-world video dimensions. It aims to address the gaps left by prior benchmarks which focused on basic comprehension rather than reasoning abilities or real-world applicability. The CVRR-ES consists of 11 video evaluation dimensions addressing different aspects of video understanding and interaction. These dimensions span scenarios from complex multi-action videos to understanding emotionally and socially rich contexts.

Evaluation Dimensions Overview:

- Multiple Actions in a Single Video: Tests if the model can identify and reason about various actions taken within the same snippet.

- Fine-Grained Action Understanding: Challenges the model's ability to discern subtly different actions.

- Partial Actions and Time Order Understanding: Examines if models can understand actions that are partially shown or need temporal context.

- Robustness to Confusing Queries: Includes evaluating responses to nonexistent actions or scenes, demanding high model precision and robust error handling.

- Context Sensitivity: Through unusual activities, emotional, and social contexts, models must infer the right information from complex, sometimes deceptive scenarios.

Key Findings from Testing Video-LMMs with CVRR-ES

Upon evaluating several contemporary Video-LMMs using the CVRR-ES, several crucial insights have come to light. Most notably, even advanced models struggled significantly across several challenging dimensions, particularly in handling partial actions and complex query scenarios. For instance, the state-of-the-art Video-LLaVA model only scored an average of 15.92% across various tasks, significantly lower than the human benchmark of over 95%.

Chain-of-thought prompting techniques, when repurposed from pure LLMs to Video-LMMs, showed potential but didn't fully bridge the gap in model performance. The CVRR-ES benchmarks also exposed a general over-affirmation bias in several models, where they tend to confirm the presence of actions or details not mentioned or contradicted by the video content.

Towards Future Improvements: Introducing Dual-Step Contextual Prompting (DSCP)

In response to these challenges, the research introduces a Dual-Step Contextual Prompting (DSCP) technique designed to enhance Video-LMMs' reasoning ability and robustness. This training-free approach focuses on refining the model's focus during inference by promoting more detailed video-specific reasoning and adaptability to complex queries.

The Two Steps of DSCP:

- Contextual Reasoning: Guides the model to deeply analyze the video content beyond surface-level details, prepping it to manage higher complexity in user queries.

- Robust Response Generation: Couples the detailed context with the user’s actual query to ensure responses are both accurate to the video content and robust to query variations.

What the Future Holds

The implications of the CVRR-ES and innovations like DSCP are vast for the deployment of AI in sensitive and everyday environments. By demanding and fostering advanced reasoning, context understanding, and robust interaction capabilities, we edge closer to deploying AI systems that truly understand and interact with the complexity of real-world environments. Future developments might see these benchmarks and techniques becoming standard tools in refining and evaluating AI systems across industries.