Video Instruction Tuning with Synthetic Data: A Comprehensive Overview

The paper "Video Instruction Tuning with Synthetic Data" addresses a significant challenge in the field of video large multimodal models (LMMs): the scarcity of high-quality, web-curated video data. To mitigate this, the authors propose an innovative approach—utilizing a synthetic dataset, LLaVA-Video-178K, specifically tailored for video instruction-following tasks such as detailed captioning and question-answering.

Key Contributions

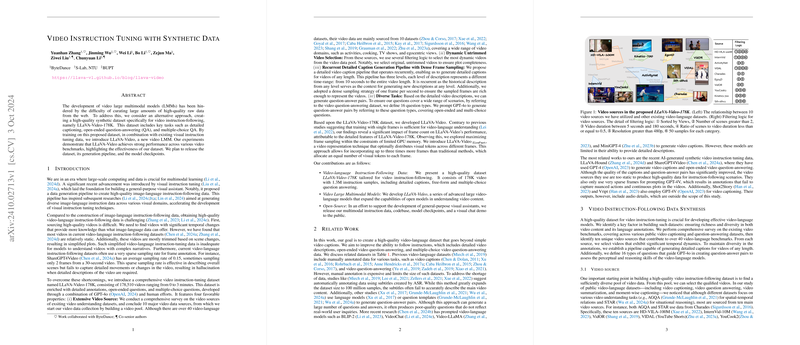

- Synthetic Dataset Creation: The development of LLaVA-Video-178K marks a crucial step in video LMMs. The dataset encompasses 178,510 videos, each enriched with detailed annotations and question-answer pairs. This synthetic dataset aims to overcome limitations found in existing collections, which often feature static videos with inadequate scene representation.

- Advanced Video Representation: The paper introduces LLaVA-Video, a new model building upon the proposed dataset. Unlike traditional methods that rely on single-frame representations, LLaVA-Video leverages multiple frames, optimizing GPU memory and enhancing the model's learning from dynamic, complex video narratives.

- Open Source Contributions: The authors highlight their commitment to encouraging further research and development by releasing the dataset, along with the model checkpoints and codebase, thereby fostering the growth of general-purpose visual assistants.

Detailed Methodology

The authors present a comprehensive pipeline for video detail description, which divides the annotation process into three temporal levels. This recurrent approach ensures that each segment of the video captures detailed narratives, leveraging dense sampling to enrich annotations. The dataset also introduces 16 question types to enhance AI models' perceptual and reasoning skills.

Experimental Validation

Empirical results demonstrate the superior performance of LLaVA-Video across diverse video benchmarks. Notably, the model excels in tasks that demand a thorough understanding of video content, such as complex narrative comprehension and detailed action recognition. The ablation studies further underline the importance of employing high-quality synthetic data to elevate model performance.

Implications and Future Prospects

The implications of this research are multi-fold. Practically, the development of LLaVA-Video-178K could significantly enhance AI systems requiring robust video understanding, catering to applications in autonomous systems, video analytics, and human-computer interaction. Theoretically, the findings offer fresh insights into the value of synthetic data in circumventing real-world data limitations.

Future investigations could explore integrating this synthetic framework with real data to improve the models' robustness further. Additionally, enhancing the diversity and complexity of synthetic datasets will be pivotal in pushing the boundaries of video LMMs.

In conclusion, the research provides compelling evidence supporting the potential of synthetic data in refining video LMMs, laying a robust foundation for future explorations in AI-driven video analysis.