Introduction to Long-Range Video Question-Answering

The field of AI has made considerable advancements in understanding short video clips, typically ranging from a few seconds to a minute. However, comprehending longer video sequences – which can span several minutes or even hours – introduces complex challenges. To enhance long-range video understanding, especially for question-answering tasks, researchers generally rely on intricate models equipped with advanced temporal reasoning capabilities. Traditional methods invest heavily in specialized video modeling designs, such as long-range feature banks and space-time graphs, which are costly and intricate.

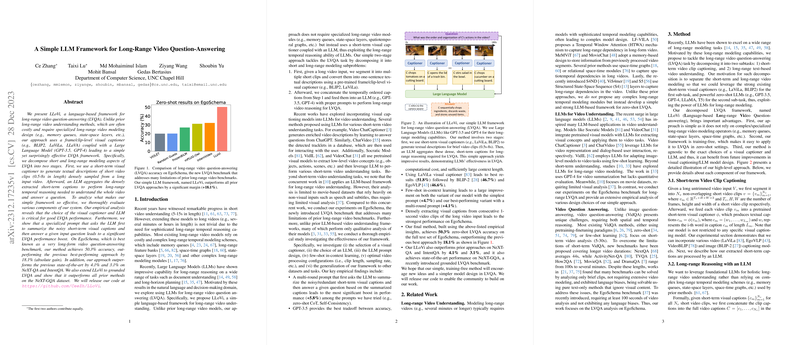

A Simplified Framework Using LLMs

A paper introduces a novel, language-based framework that simplifies the approach to long-range video question-answering (LVQA). Known as LLoVi, this framework uniquely combines a short-term visual captioner with a LLM like GPT-3.5 or GPT-4, successfully leveraging the LLM's powerful ability for long-range reasoning. Instead of incorporating complex video-specific techniques, LLoVi harnesses two stages: initially, it segments a long video into short clips, each described textually by a visual captioner. Subsequently, an LLM integrates these descriptions to perform comprehensive video reasoning and answer questions about the video content.

Crucial Factors and Methodology Insights

An extensive empirical paper within the paper highlights several critical components for effective LVQA performance. The choice of both the visual captioner and the LLM proved to be significant. It was further discovered that a specialized LLM prompt structure substantially elevates performance. This prompt instructs the LLM to first deliver a consolidated summary of the video captions, which simplifies the task of accurately responding to questions based on this synthesized narrative. Remarkably, this framework demonstrated superior accuracy on the EgoSchema benchmark, surpassing former leading techniques by considerable margins.

Generalization and Grounded Question-Answering

This streamlined framework proved its robustness across a variety of datasets, indicating its applicability to diverse LVQA scenarios. Moreover, the researchers extended the framework to 'grounded LVQA', where the model identifies and grounds the specific video segment relevant to a question. This extension led to the framework outperforming existing methods on a benchmark designed for this purpose.

Conclusion

The simplicity and zero-shot learning ability of LLoVi make it a promising direction for future development in video understanding. Details about the paper can be examined more closely in the literature, and the code implementation for this framework is openly available, which benefits the research community. By abstaining from complicated video-specific mechanisms, LLoVi empowers LLMs to tap into their innate long-range reasoning and does so with noteworthy efficiency and efficacy.