- The paper presents a machine learning approach using the Big Five traits to predict user preferences for various XAI methods.

- Experimental results show a 77.78% alignment with preferred XAI methods, emphasizing the impact of personalized explanations on decision-making.

- The study validates that customized XAI strategies improve usability and trust in gameplay, paving the way for adaptive, user-specific AI systems.

Exploring Personality-Driven Personalization in XAI: Enhancing User Trust in Gameplay

Introduction

The paper "Exploring Personality-Driven Personalization in XAI: Enhancing User Trust in Gameplay" explores the potential of tailoring Explainable AI (XAI) methods based on users' personality traits to improve trust in AI systems. By focusing on the interplay between personality-driven customization and human-computer interaction, the paper seeks to deepen our understanding of how personal characteristics influence preferences for various XAI methodologies, ultimately impacting user engagement and trust.

Experimental Design

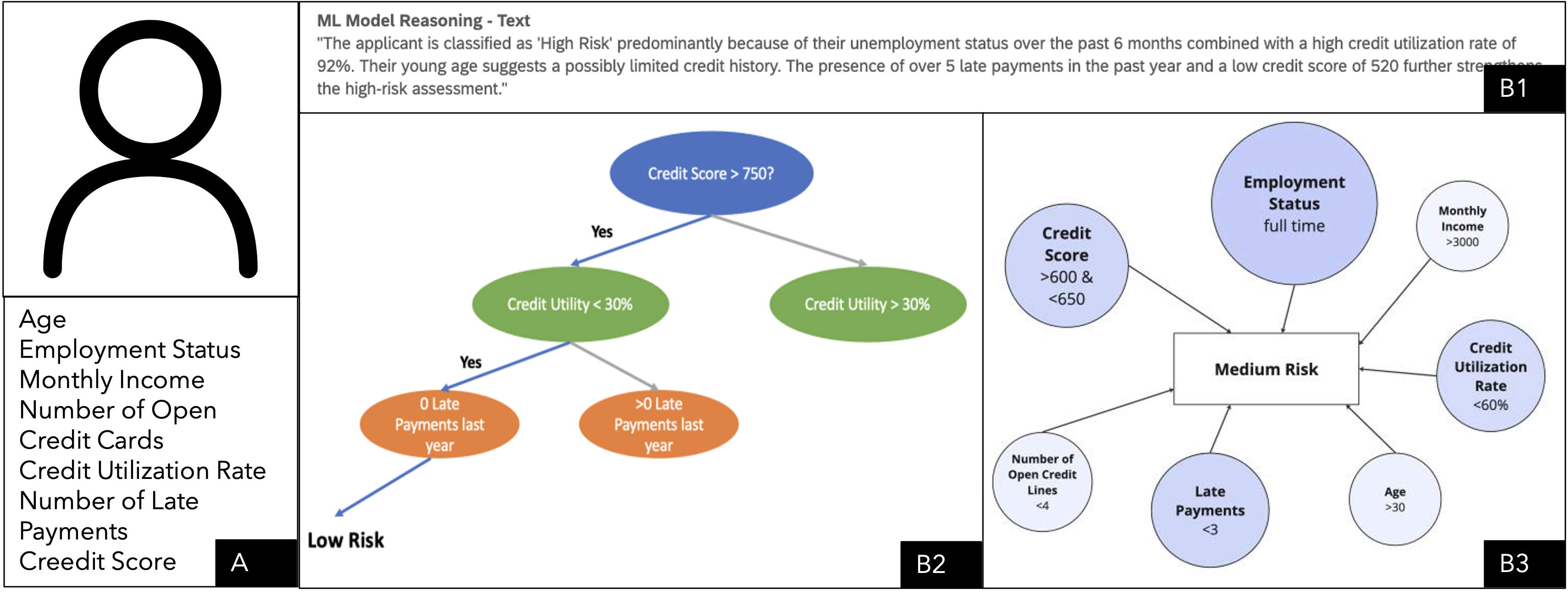

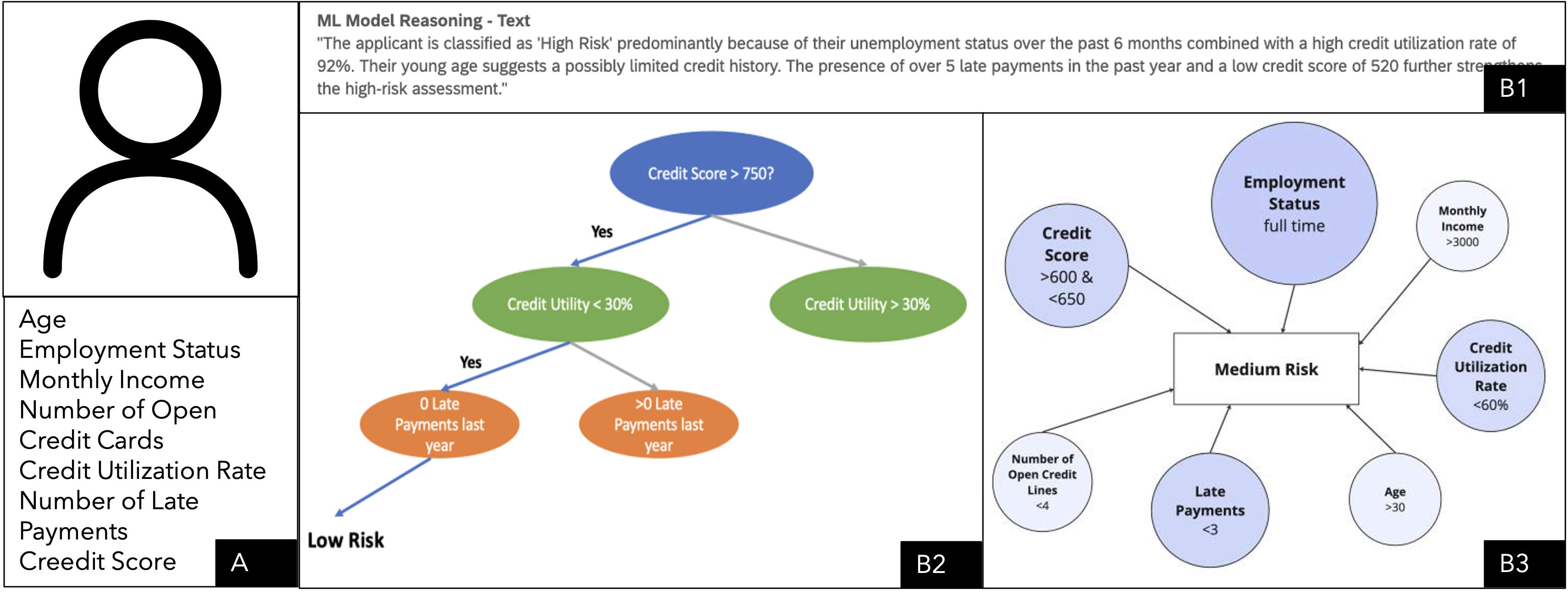

The research employs a comprehensive approach to understand the relationship between personality traits and user preferences for XAI methods. A survey was designed to gather data encompassing the Big Five Personality traits and user preferences among three XAI explanation methods: decision trees, textual explanations, and factor graphs.

In the experimental setup, six participants were engaged in a navigation game where the system presented XAI explanations regarding game navigation choices. By linking perceived risks with different XAI methods, the paper accurately captured the nuances of user preference dynamics.

Figure 1: Capturing XAI preferences in the Survey.

Results

Willingness to Follow XAI's Suggestion

Participants exhibited a higher tendency to align their decisions with the XAI agent's suggestions when the methods aligned with their personal preferences. Specifically, users aligned 77.78% of their decisions with their preferred XAI method compared to 44.44% when presented with less favored methods. This highlights the significance of matching XAI methods to user preferences to enhance trust and decision-making effectiveness.

Trust and Perceived Usability

Post-experiment surveys indicated that users experienced elevated trust levels when presented with preferred XAI methods, with reported trust levels significantly higher than when receiving less-preferred explanations. Moreover, participants found the game and XAI methods intuitive and easy to use, as evidenced by consistently positive usability feedback. These results underscore the need for personalized XAI strategies that support user characteristics and preferences.

Machine Learning Approach

A ML model was trained to predict XAI preferences using personality data collected via survey. Employing a multi-layer perceptron model, the system effectively mapped 20 personality trait dimensions to preference predictions for XAI methods. This model hashed out the framework for dynamic matching of XAI explanations to user profiles, a critical component of personalized AI systems.

Conclusion

The research presents a compelling case for personalizing XAI methods based on individual personality traits, demonstrating enhanced trust and engagement in AI systems. By predicting user preferences, developers can align XAI methods with personality traits to cultivate a favorable perception of AI systems. The paper's findings offer a path forward for creating intelligent systems capable of adapting to user-specific needs, fostering trust and improving usability in AI interfaces. Future explorations should consider broadening the scope to include diverse demographic variables and expanding participant samples to solidify these findings in larger contexts.