Better Sequence Knowledge Distillation Using Minimum Bayes Risk Decoding

In recent advancements in machine translation, the improvements in LLMs have been impressive. However, LLMs’ computational constraints necessitate efficient methods to distill their capabilities into smaller, more deployable models. The paper "Don't Throw Away Data: Better Sequence Knowledge Distillation" by Wang et al. proposes a novel approach for sequence-level knowledge distillation (SeqKD) by leveraging Minimum Bayes Risk (MBR) decoding. This essay provides an expert overview of their methods, results, and the wider implications of their findings.

Introduction

SeqKD, as introduced by Kim and Rush (2016), remains one of the fundamental techniques for reducing the model complexity in tasks like machine translation. By training a student model to replicate the outputs of a more complex teacher model, SeqKD addresses both computational efficiency and environmental concerns in deploying LLMs. While traditional methods utilize greedy or beam search decoding, Wang et al. set out to reassess the potential of integrating the richer and more diverse outputs provided by MBR decoding.

Methodological Innovations

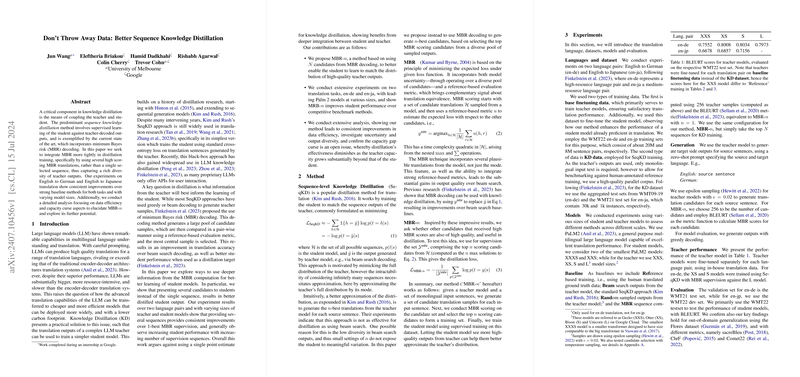

The core innovation presented in the paper is MBR-, an enhancement over the single sequence selection in traditional MBR decoding methods. Instead of selecting the single highest scoring candidate, MBR- incorporates multiple high-scoring translations ( best MBR sequences) to train the student model. This approach aims to capture a more comprehensive distribution of the teacher model outputs, thus aligning the student’s learning with a broader spectrum of high-quality predictions.

Experimentation and Results

Wang et al. conducted extensive experiments across two language pairs: English to German (en-de) and English to Japanese (en-ja). The experimental setup varied in both model sizes and training datasets to ensure robust validation of their approach. Key findings from their results include:

- Performance Improvement: The MBR- method consistently outperformed traditional SeqKD methods like beam search across different student and teacher model configurations. For instance, in the en-de translation task, an increase in the number of sequences () led to significant performance improvements, peaking at MBR-40.

- Data Efficiency: MBR- exhibited remarkable data efficiency. In scenarios with limited distillation data, MBR- achieved approximately three times more data efficiency than single-sequence MBR. This suggests a more optimal usage of training instances, which is critical when employing high-quality but sparse datasets.

- Capacity Curse: Echoing prior observations in the knowledge distillation literature, the paper confirms the "capacity gap curse." Distillation's effectiveness diminishes as the size disparity between the teacher and student models increases. Interestingly, smaller teacher models (e.g., PaLM2-XXS) often produced better distillation results, highlighting an upper limit to student performance benefits from more substantial teacher models.

Theoretical and Practical Implications

Implications for Future Research and Practice

This research introduces significant implications for the theoretical understanding and practical applications of knowledge distillation in NLP:

- Enhanced Representational Learning: Integrating multiple high-quality sequences in training potentially allows the student model to learn from a broader and richer set of translations. This diversified learning can lead to more robust and generalizable model performance, especially in complex language pairs or lesser-researched dialects.

- Application in Resource-Constrained Settings: The demonstrated data efficiency of MBR- is particularly promising for applications in resource-constrained environments where data and computational resources are limited. Deployable models that retain high performance using fewer resources will be crucial for expanding machine translation capabilities globally.

- Curriculum Learning: The exploration of staged training, moving from a weaker to a stronger teacher, presents an intriguing path to mitigate the capacity gap curse. This sequential or curriculum learning could be pivotal in scaling the benefits of MBR- across varying model architectures and sizes.

Conclusion

The work by Wang et al. contributes to our understanding of knowledge distillation in machine translation by presenting a compelling case for the MBR- approach. Their empirical evidence suggests that leveraging multiple high-scoring sequences in distillation can lead to better performance and efficiency. These insights are invaluable as they not only address practical deployment challenges but also push the boundaries of how sequence knowledge distillation can be optimized. Future research could explore further optimizations, new language pairs, and domain-specific applications, potentially leveraging MBR- for a wider array of neural generation tasks.