Sequence-Level Knowledge Distillation in Neural Machine Translation

The paper “Sequence-Level Knowledge Distillation” by Yoon Kim and Alexander M. Rush addresses a significant challenge in Neural Machine Translation (NMT): the need for extremely large models to achieve state-of-the-art performance. This work explores the application of knowledge distillation techniques to compress neural models, a method previously successful in other domains but underutilized in NMT.

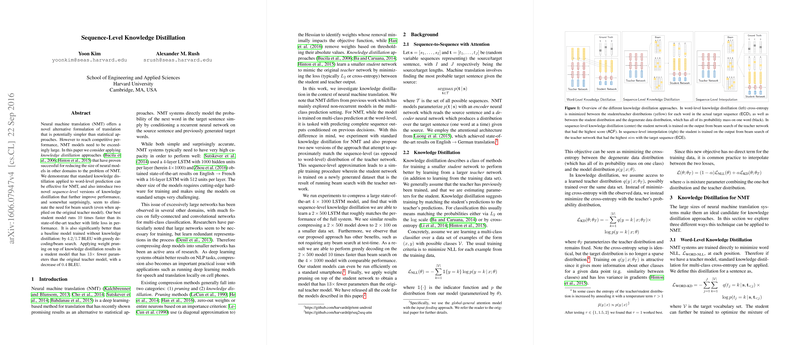

The authors' primary focus is on reducing the size of NMT models while maintaining competitive performance. They build upon standard knowledge distillation, wherein a smaller student model is trained to mimic a larger teacher model by minimizing the discrepancy between their outputs. Specifically, they propose novel sequence-level knowledge distillation approaches that aim to match the distributions of entire sequences generated by teacher models, rather than focusing solely on word-level predictions.

Background and Motivation

NMT has established itself as a powerful alternative to traditional statistical machine translation methods. Despite its accuracy and simplicity, top-performing NMT models require a high capacity to handle complex language patterns, necessitating models with numerous parameters and layers. The computational demands of these models make real-world applications, especially on limited hardware like mobile devices, challenging.

To address this issue, the authors investigate knowledge distillation, a process where a smaller "student" model learns to approximate the behavior of an expansive "teacher" model. This method has seen success in compressing non-recurrent models in domains such as image classification. However, given the sequential nature of NMT, distilling knowledge at the sequence level represents a novel extension of the approach.

Methodology

The paper presents three primary approaches to knowledge distillation in NMT:

1. Word-Level Knowledge Distillation (Word-KD)

This technique applies standard knowledge distillation at the word level. The student model is trained to minimize the cross-entropy loss between its predicted probability distribution and the distribution generated by the teacher model, which has shown moderate success in narrowing the knowledge gap between student and teacher models.

2. Sequence-Level Knowledge Distillation (Seq-KD)

This approach extends the standard method to the sequence level by training the student on sequences generated by the teacher model using beam search. This method aims to create a new training dataset by running beam search on the teacher model and using these sequences to train the student. Empirical results indicate that Seq-KD performs better than Word-KD, with notable improvements in BLEU scores.

3. Sequence-Level Interpolation (Seq-Inter)

The authors also explore integrating the original training data into the distillation process. Seq-Inter involves training the student model on sequences that strike a balance between the highest probability sequences generated by the teacher and those most similar to the original target sequences. They employ beam search to identify these sequences, maximizing sequence similarity via BLEU scores.

Experimental Setup

Experiments are conducted on two NMT tasks: high-resource English-to-German translation and low-resource Thai-to-English translation. The results demonstrate that sequence-level knowledge distillation yields significant efficiency gains:

- The model sizes were reduced from a LSTM to a LSTM, with a BLEU score drop of merely $0.2$.

- Further weight pruning on the student models resulted in up to $13x$ reduction in parameters with nominal performance loss ($0.4$ BLEU).

Results and Implications

The authors report that Seq-KD models can achieve performance on par with state-of-the-art models using beam search, while allowing tenfold faster greedy decoding. This has practical implications for deploying NMT systems on GPUs, CPUs, and even smartphones, where computational resources are limited.

Furthermore, the dual benefits of using both Seq-KD and Seq-Inter methods suggest a promising avenue for future research. The combination of these techniques leads to models that are both compact and efficient. Remarkably, the probabilistic mode of the student models aligns closely with the teacher's mode, thereby enhancing decoding efficiency.

Conclusion and Future Work

The work illustrates that compressing NMT models through sequence-level knowledge distillation paves the way for deploying high-performance models in resource-constrained environments. The demonstrated applicability to different size reductions and the combined use with pruning present a holistic approach to model compression.

Future research could extend these methods across broader applications in NLP and other sequence-to-sequence tasks like parsing, speech recognition, and even complex tasks integrating multimodal data, such as image captioning and video generation. Integrating advanced character-level information and exploring other sparsity-inducing techniques may further enhance these models' efficiency and broad utility.