LaFAM: Unsupervised Feature Attribution with Label-free Activation Maps

Introduction

The paper "LaFAM: Unsupervised Feature Attribution with Label-free Activation Maps" introduces a novel approach in the field of explainable AI, specifically targeting feature attribution within Convolutional Neural Networks (CNNs). Activation Maps (AMs), known for their ability to highlight saliency regions in CNNs, are central to many XAI methods. However, the literature has rarely explored the direct use of raw AMs for feature attribution. LaFAM is proposed as an efficient label-free alternative to traditional Class Activation Map (CAM) techniques, particularly suited for both self-supervised and supervised learning scenarios. By leveraging raw AMs without labels, LaFAM offers an enhanced method for generating saliency maps.

Methodology

LaFAM operates as a post hoc analytical tool that generates saliency maps by aggregating activations from a convolutional layer. It's designed to be computationally efficient, requiring a single forward pass to compute activations. This is achieved by averaging the AMs across channels within the convolutional layer, enabling the preservation of spatial coherence necessary for saliency mapping. The resultant map undergoes min-max normalization followed by upsampling to align with the input image size:

Aˉi,jl=K1k=1∑KAi,jl,k

The upsampled saliency map is thus obtained as:

MLaFAM=Up(N(Aˉl))

where N denotes min-max normalization, and Up represents upsampling.

Experimental Evaluation

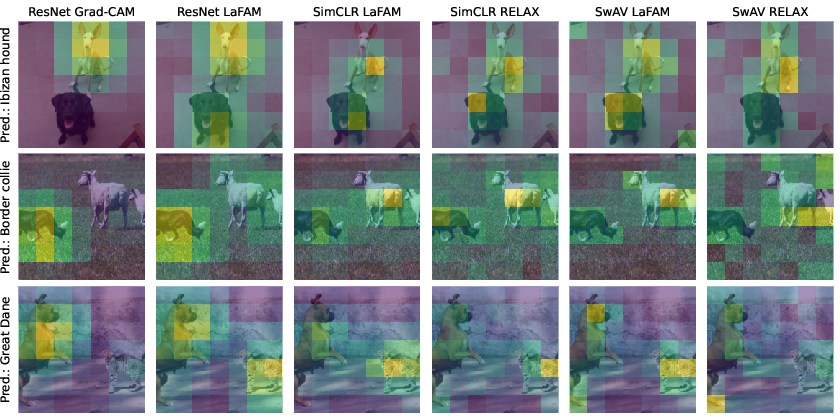

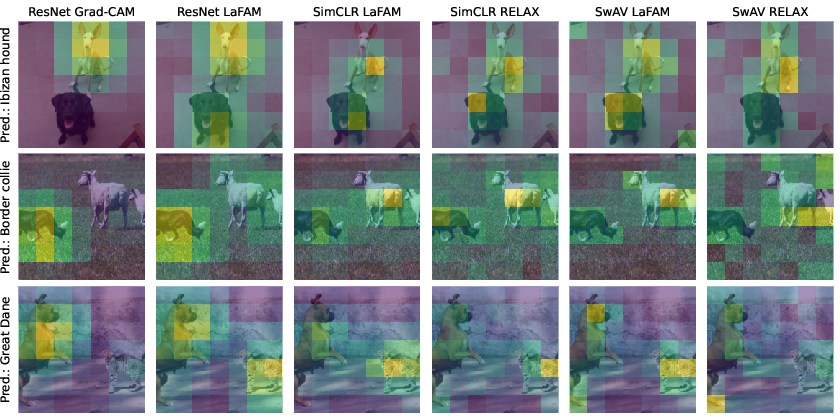

The method's efficacy was tested using SSL frameworks like SimCLR and SwAV, as well as a supervised ResNet50 framework on ImageNet-1k and PASCAL VOC 2012 datasets. The performance of LaFAM was compared with RELAX in SSL settings and Grad-CAM for supervised learning.

The results reveal that LaFAM consistently outperforms RELAX in the SSL setting, evidenced by superior scores across all evaluated metrics, such as Pointing-Game and Sparseness (Tables 1 and 2). These results suggest LaFAM's proficiency in producing precise and focused saliency maps, especially for scenes containing small objects.

Figure 1: Saliency maps comparison for scenes with two distinct objects. Left-hand labels indicate ImageNet labels predicted by ResNet50 classifier.

Compared to Grad-CAM in supervised scenarios, LaFAM remains competitive across several metrics. While Grad-CAM achieves a higher Sparseness score, indicating less scattered explanations, LaFAM's broader feature