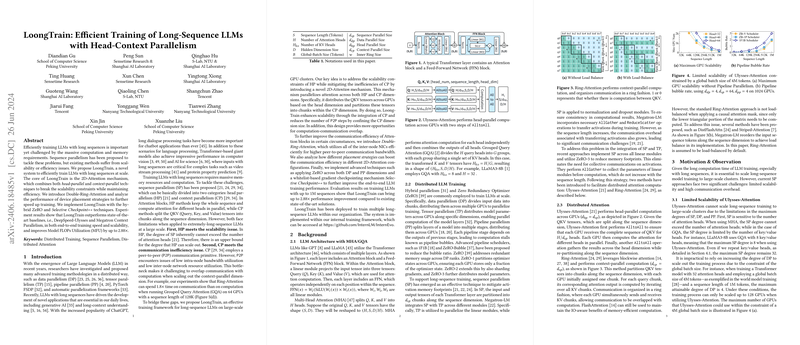

The paper introduces EpicSeq, a system designed for efficient training of LLMs (LLM) with long sequences on large-scale Graphics Processing Unit (GPU) clusters. It addresses limitations in existing sequence parallelism approaches, such as head parallelism and context parallelism, which encounter scalability and communication efficiency issues, respectively. EpicSeq's core innovation is the 2D-Attention mechanism, which combines head-parallel and context-parallel techniques to overcome scalability constraints while maintaining efficiency.

The limitations of existing sequence parallelism approaches are:

- Head parallelism's scalability is inherently limited by the number of attention heads.

- Context parallelism suffers from communication inefficiencies due to peer-to-peer communication, leading to low intra-node and inter-node bandwidth utilization.

EpicSeq proposes a hybrid approach to overcome these limitations.

2D-Attention Mechanism

The 2D-Attention mechanism parallelizes attention across both head and context dimensions. It distributes the query (Q), key (K), and value (V) tensors across GPUs based on the head dimension and partitions them into chunks within the context dimension. The number of GPUs, , is organized into a grid where:

- is the head parallel size

- is the context parallel size

In multi-head attention, the input tensors , , and are divided along the sequence dimension, where each segment is shaped as .

- is the number of attention heads

- is the sequence length

- is the hidden dimension size

The 2D-Attention computation involves three steps:

- A

SeqAlltoAllcommunication operation distributes the , , and tensors based on the head dimension across GPUs and re-partitions them along the sequence dimension across GPUs. - Each context parallel group independently performs Double-Ring-Attention, resulting in an output tensor of shape .

- Another

SeqAlltoAlloperation consolidates the attention outputs across the head dimension and re-partitions the sequence dimension, transforming the output tensor to .

To address the constraint of limited KV heads in Grouped Query Attention (GQA), EpicSeq uses KV replication. In the forward pass, the input KV tensors are shaped as . To align the number of KV heads with the head-parallel size, 2D-Attention replicates KV tensors, resulting in the shape of , where .

Double-Ring-Attention

To fully utilize available Network Interface Cards (NICs) for inter-node communication, the paper proposes Double-Ring-Attention, which partitions the GPUs into multiple inner rings. The Central Processing Units (CPUs) within each context parallel group form several inner rings, while the inner rings collectively form an outer ring. Assuming each inner ring consists of GPUs, a context parallel process group would have concurrent inner rings.

Device Placement Strategies

The paper discusses two device allocation strategies: head-first placement and context-first placement. Head-first placement prioritizes collocating GPUs of the same head parallel group on the same node, leveraging NVLink for SeqAlltoAll operations. Context-first placement prioritizes collocating GPUs of the same context parallel group on the same node, reducing inter-node traffic during Double-Ring-Attention.

Performance Analysis

The paper provides a performance analysis of 2D-Attention, including scalability, computation, peer-to-peer communication, SeqAlltoAll communication, and memory usage. The analysis considers factors such as sequence length, head and context parallelism degrees, inner ring size, Multi-Head Attention (MHA) vs. GQA, and device placement strategies. The goal is to minimize the communication time that cannot be overlapped with computation, which is formulated as:

- represents the

SeqAlltoAllcommunication time. - and represent the forward and backward execution time per inner ring

- is the context parallel size

- is the inner ring size

End-to-End System Implementation

The paper discusses the end-to-end system implementation of EpicSeq with two techniques: hybrid Zero Redundancy Optimizer (ZeRO) and selective checkpoint++. The hybrid ZeRO approach shards model states across both Data Parallelism (DP) and sequence parallelism dimensions, reducing redundant memory usage. Selective checkpoint++ adds attention modules to a whitelist. During the forward pass, the modified checkpoint function saves the outputs of these modules. During the backward pass, the checkpoint function retrieves the stored outputs and continues the computation graph.

Evaluation Results

The paper presents experimental results comparing EpicSeq with DeepSpeed-Ulysses and Megatron Context Parallelism. The results demonstrate that EpicSeq outperforms these baselines in both end-to-end training speed and scalability, improving Model FLOPs Utilization (MFU) by up to 2.88x. The evaluation includes training 7B-MHA and 7B-GQA models on 64 GPUs with various sequence lengths and configurations. The results highlight the benefits of 2D-Attention, Double-Ring-Attention, and Selective Checkpoint++.