An Analysis of LightSeq: Sequence Level Parallelism for Distributed Training of Long Context Transformers

The paper "LightSeq: Sequence Level Parallelism for Distributed Training of Long Context Transformers" introduces a novel approach to address the challenges associated with training LLMs with long contexts. The increasing demand for models with extended context lengths poses significant challenges due to the substantial memory requirements. Traditional methods, such as those employed by systems like Megatron-LM, partition attention heads across devices, which often results in inefficiencies, especially for models not divisible by popular parallelism degrees.

Key Contributions and Methodology

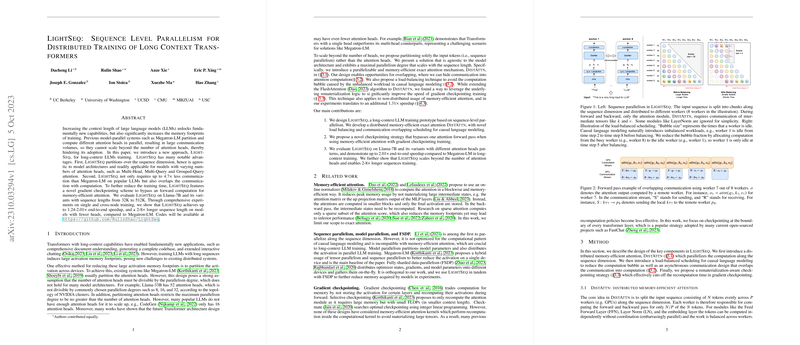

- Sequence Dimension Partitioning: LightSeq partitions sequences along the sequence dimension, making it indifferent to varying model architectures and providing scalability that is independent of the number of attention heads. This feature makes it suitable for various attention mechanisms, including Multi-Head, Multi-Query, and Grouped-Query attention.

- Reduced Communication Overhead: The approach reduces communication volume by up to 4.7 times compared to Megatron-LM. This reduction is achieved by overlapping communication and computation processes, particularly through a distributed implementation of memory-efficient attention called DistAttn.

- Gradient Checkpointing Strategy: A novel gradient checkpointing method is employed to bypass computational steps during the forward pass, further optimizing the memory efficiency of the training process. This rematerialization-aware technique significantly accelerates the process by reducing redundant computations.

Impressive Results and Implications

Experiments demonstrate that LightSeq achieves substantial speedups, up to 2.01 times, compared to Megatron-LM while also supporting sequences that are 2-8 times longer. These results showcase its effectiveness, particularly for models with fewer heads, where traditional methods struggle with scalability.

Practical and Theoretical Implications

The introduction of sequence-level parallelism provides a practical solution for efficiently training long-context LLMs across distributed systems, which is crucial for practical applications in comprehensive document understanding and extended interactive systems. Theoretically, this approach opens new avenues for research in parallelism strategies that transcend conventional attention head partitioning.

Speculation on Future Developments

The shift toward sequence dimension partitioning could inspire further exploration in hybrid parallel strategies that integrate sequence and data parallelism. Moreover, optimizing communication in P2P interactions and exploring topology-aware solutions could enhance performance for shorter sequence lengths and models with standard attention configurations, expanding the versatility and applicability of LightSeq.

In conclusion, LightSeq presents a well-rounded advancement in distributed transformer training, addressing core limitations of previous methods. Its contribution lies not only in immediate performance enhancements but also in setting a foundation for more adaptive parallel training strategies in neural network research.