- The paper introduces FLUID-LLM, a framework that integrates spatiotemporal embeddings with large language models to efficiently predict unsteady fluid dynamics.

- It employs a novel architecture that encodes fluid flow data into 2D grids and uses a GNN decoder to achieve superior RMSE performance on benchmark datasets like Airfoil.

- The approach demonstrates strong in-context and few-shot learning capabilities, paving the way for real-time CFD simulation and broader scientific applications.

FLUID-LLM: Learning Computational Fluid Dynamics with Spatiotemporal-aware LLMs

Introduction

The paper introduces FLUID-LLM, a novel approach to computational fluid dynamics (CFD) that leverages the capabilities of LLMs. Unlike traditional CFD methods that require intensive computational resources to solve Navier-Stokes equations, FLUID-LLM integrates LLMs with spatiotemporal-aware encoding to predict fluid dynamics efficiently. This framework capitalizes on the autoregressive abilities of LLMs, supplemented with spatial-aware layers, to enhance prediction accuracy of unsteady fluid flows.

Methodology

FLUID-LLM employs a sophisticated model architecture that encapsulates spatiotemporal dynamics within a LLM's framework. At its core, the model uses a fine-tuned LLM to make predictions based on historical fluid state sequences:

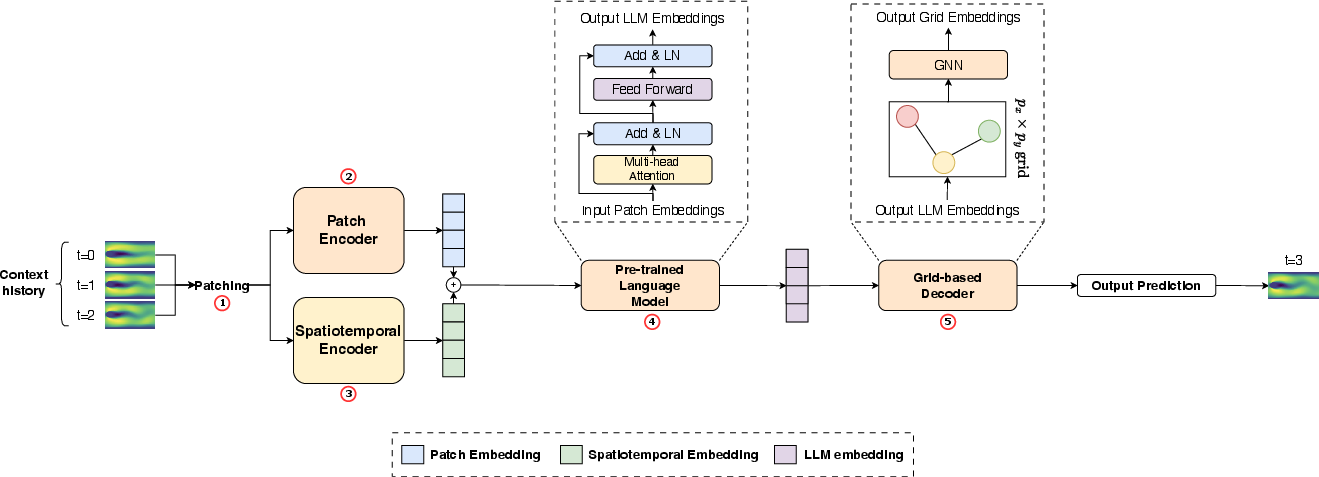

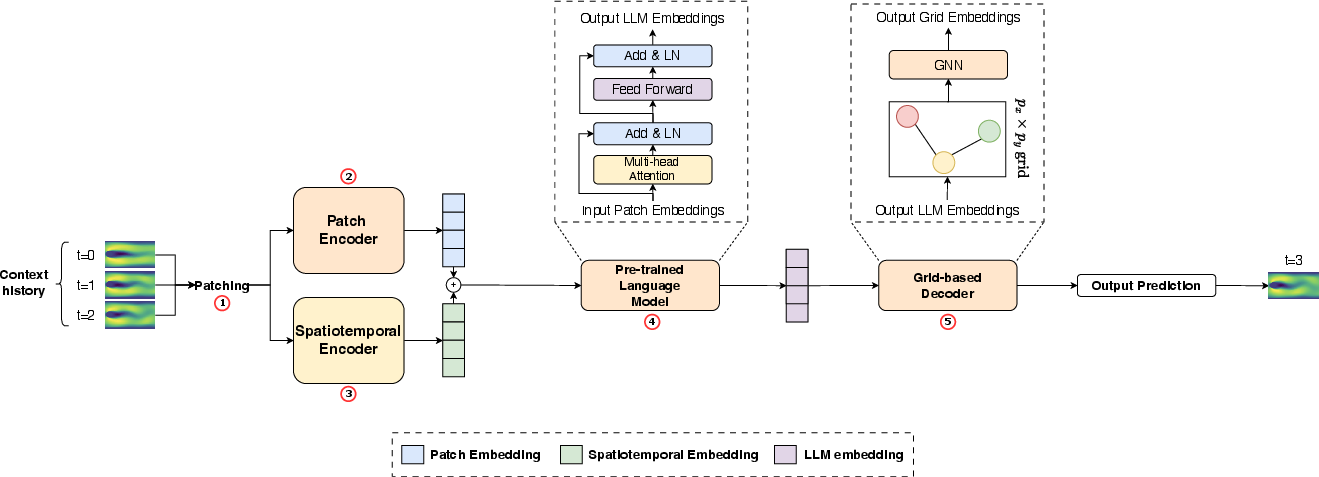

Figure 1: High-level overview of the FLUID-LLM framework showcasing the integration of state embedding with GNN and LLM for fluid state prediction.

Model Structure

- Model Inputs: The model converts fluid flow data into a 2D regular grid and encodes it into patches for processing. This transformation ensures compatibility with the LLM's feature space.

- Spatiotemporal Embedding: It uses learned embeddings for spatial and temporal aspects, enhancing the LLM's ability to discern location and time dependencies.

- LLM Embedding and Decoding: A pre-trained LLM processes these enriched embeddings. The processed outputs are decoded using a GNN to predict subsequent fluid states, effectively capturing both local and global flow dynamics.

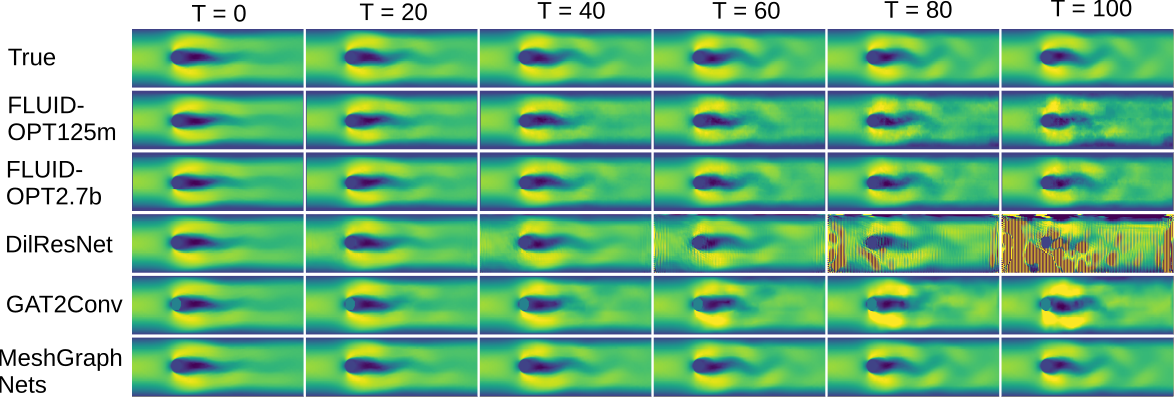

Experiments and Results

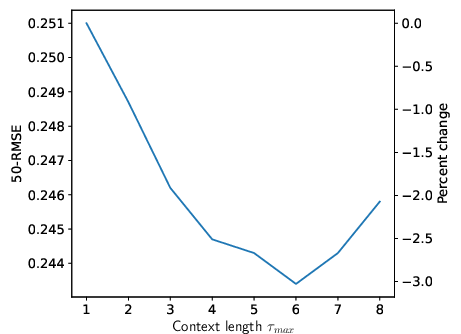

FLUID-LLM's performance was evaluated using standard CFD datasets, demonstrating its capability to predict complex fluid dynamics accurately:

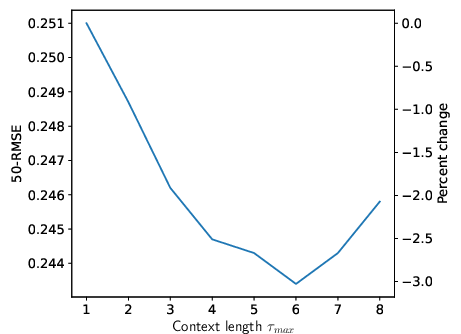

Figure 2: Predicted RMSE after 50 steps on the Airfoil dataset, illustrating the efficacy of the FLUID-LLM model compared to baselines.

Implications and Future Directions

FLUID-LLM illustrates the potential of integrating LLMs in domains beyond natural language processing, specifically in simulating complex physical systems like fluid dynamics. This approach not only reduces the computational overhead typically associated with traditional CFD methods but also opens avenues for further research into leveraging LLMs for other scientific simulations.

Future work may explore enlarging model contexts and incorporating advanced state-of-the-art LLM architectures to further enhance predictive accuracy and computational efficiency. Such advancements could significantly contribute to real-time applications in engineering design and environmental modeling.

Conclusions

FLUID-LLM represents a compelling convergence of language modeling and computational physics, exhibiting clear benefits in both predictive accuracy and resource efficiency. Through comprehensive evaluations, the study validates the integration of spatiotemporal-aware LLMs as a formidable approach to tackling the challenges of CFD prediction tasks.