Overview of "Transformers are SSMs: Generalized Models and Efficient Algorithms Through Structured State Space Duality"

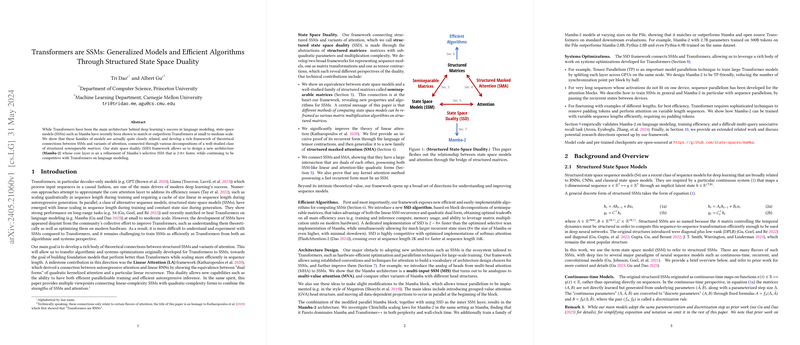

The paper "Transformers are SSMs: Generalized Models and Efficient Algorithms Through Structured State Space Duality" by Tri Dao and Albert Gu introduces a novel theoretical framework that bridges the conceptual gap between State Space Models (SSMs) and Transformer architectures through the lens of structured matrices. This work formalizes the connections between these two families of models, providing a rich theoretical backdrop for their unification and improvement.

Core Contributions

- Theoretical Connections:

- The authors establish that SSMs and Transformer variants can be understood and analyzed through the domain of structured matrices, specifically semiseparable matrices. This is highlighted by the equivalence between state space models and sequentially semiseparable matrices (\cref{thm:ssm-sss}).

- The concept of State Space Duality (SSD) is introduced, revealing that SSMs and Transformers share dual representations: one in the recurrent (linear) form and the other in the explicit quadratic (attention-like) form.

- Efficient Algorithms:

- The paper proposes a new hardware-efficient algorithm for computing SSMs by exploiting block decompositions of semiseparable matrices (\cref{sec:ssd}). This method leverages matrix multiplication units on modern hardware, significantly increasing computational efficiency.

- A critical insight is that SSDs are adaptable to both training and inference scenarios, with practicality in long-sequence handling due to their reduced-complexity computations.

- Empirical Validation:

- The authors validate their theoretical constructs through empirical experiments demonstrating the numerical efficiency and performance advantages of SSD-based models in LLMing and synthetic recall tasks.

- Architectural Insights:

- The paper translates architectural designs and optimizations from Transformers to SSMs, resulting in the iterative refinement of the Mamba model to Mamba-2, incorporating efficient projections and normalization strategies.

Implications and Future Directions

Practical Applications

The introduction of the SSD framework provides several practical benefits:

- Computational Efficiency:

- By recasting SSMs within the structured matrix framework, the authors unlock performance improvements by utilizing efficient matrix multiplication hardware. This has significant implications for the deployment of large-scale models, especially in resource-constrained environments.

- Scalable Sequence Models:

- SSD allows for scalable sequence models that handle long-range dependencies more efficiently than traditional Transformers, making them suitable for tasks in natural language processing where long sequences are common.

- Adaptability:

- The duality of representation — recurrent and quadratic forms — permits flexibility in choosing the most efficient computation mode depending on the specific task and hardware constraints, thereby enhancing the adaptability of these models.

Theoretical Contributions

Unified View of Sequence Models

This work synthesizes a unified view of sequence models, showing that key components of attention mechanisms and SSMs can be expressed through structured matrix transformations. It posits that:

- Attention is SSM:

- The core operations in attention mechanisms can be mirrored through appropriately-structured SSM recurrences.

- Semiseparable Matrices:

- Semiseparable matrices form the backbone of this duality, able to encapsulate the complexity of sequence transformations efficiently.

- Algorithmic Equivalence:

- The different forms (linear vs. quadratic) of computing SSMs correspond to matrix multiplication algorithms operating on structured representations, thereby allowing the authors to derive optimal algorithms for sequence-to-sequence transformations.

Future Developments in AI

The framework presented opens new avenues for exploring further convergence between different model architectures in AI. Potential directions include:

- Hybrid Architectures:

- Combining elements of SSMs and attention mechanisms in hybrid models could exploit the strengths of both, leading to more robust and efficient architectures.

- Expansion in Applications:

- Extending this framework to other domains such as time series analysis, signal processing, and beyond NLP tasks, where structured sequence transformations are beneficial.

- Further Optimization:

- Leveraging more advanced structured matrix techniques from scientific computing to improve the efficiency and scalability of SSM-based models further.

- Interpretability:

- Understanding the interpretability of these dual models and whether insights from one can aid in interpreting the behavior of the other, particularly in understanding deep network behavior.

Conclusion

The paper by Dao and Gu represents a significant step towards unifying recurrent and attention-based models through structured matrix theory. By establishing theoretical linkages and demonstrating practical improvements, it opens pathways for developing more efficient and scalable AI models. The implications for both theoretical advancements and real-world applications are profound, promising a fertile ground for future research and innovation in deep learning architectures.