Exploring the Potential of Multimodal AI in Medicine with Med-Gemini Models

Introduction

The integration of AI in medicine has progressively moved from theory to application, significantly impacting how medical data is understood and utilized. The advent of multimodal AI solutions, which can process diverse data types including medical images and genetic information, begins to reflect the multifaceted nature of human health.

Unlocking Multimodal Capabilities in Medical AI

Advanced Multimodal Models: The recent development of large multimodal models (LMMs) like Gemini has demonstrated superb capabilities in handling complex data including text, images, and more. This technological leap holds profound implications for personalized medicine, where multifaceted data is paramount.

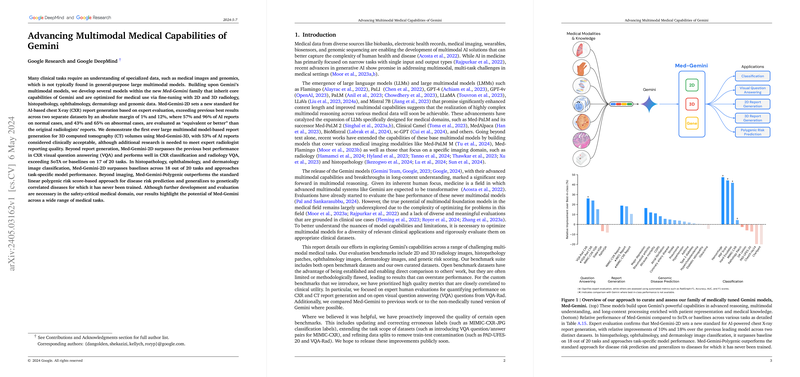

Med-Gemini Family Introduction: Building on the foundation provided by Gemini models, the Med-Gemini family was specifically tailored for medical applications. By integrating varied medical data types—radiology, pathology, genomics, and beyond—these models aim to approach the complexity of clinical diagnostics and patient treatment planning.

Deep Dive into Med-Gemini's Performance

Versatile Medical Task Handling: Med-Gemini models have shown promise across several key areas in healthcare AI, from generating medical reports based on imaging to answering complex clinical questions regarding patient data visuals.

- Radiology Reports: Notably, Med-Gemini excels in generating interpretative reports from both 2D and 3D medical imaging, such as chest X-rays and head/neck CT scans. These capabilities extend beyond generating text to actually understanding and summarizing critical medical findings.

- Disease Prediction Using Genetic Data: Leaping into genomics, Med-Gemini applies a novel approach by translating genetic risk information into a visual format which the model can then interpret, predicting potential disease risks with notable accuracy.

- Diagnostic Assistance Through QA: In visual question answering (VQA) tasks, Med-Gemini efficiently handles queries related to medical imagery, allowing it to support healthcare professionals by providing immediate insights into patient data.

Implications and Future Directions

Broadening the AI Application in Medicine: The results indicate that Med-Gemini can serve as a robust auxiliary tool for various medical specialists, from radiologists needing quick report generation to geneticists assessing disease susceptibility.

Future Enhancements: Despite its current capabilities, there are still several areas requiring improvement and careful consideration before full clinical deployment. These include validating AI performance in real-world settings and ensuring the models generalize well across different patient demographics and conditions.

Clinical Integration and Safety Evaluations: Before these models can be fully integrated into clinical workflows, extensive testing and validation are needed to address any potential safety issues, ensuring that the AI's recommendations are reliable and enhance patient care.

Conclusion

The introduction of Med-Gemini signifies a crucial step forward in applying AI within the medical field. By efficiently processing and interpreting complex multimodal medical data, these models hint at a future where AI not only supports but enhances clinical decision-making processes. As development continues, the focus will remain on refining these models to ensure they meet the stringent requirements of medical application, aiming for a future where AI and healthcare professionals work hand in hand to improve patient outcomes.