Advancements and Implications of Med-Gemini: A Multimodal Medical Model Family Built on Gemini

Introduction to Med-Gemini

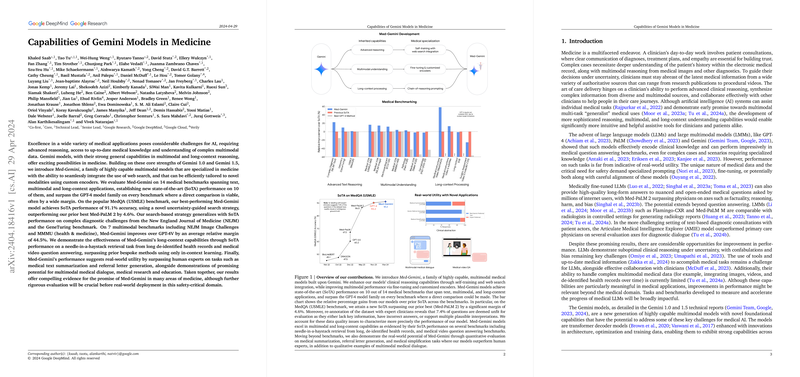

The development of Med-Gemini signifies an important step forward in the field of AI-assisted medical reasoning and diagnosis, building upon the foundational Gemini model architecture. Key enhancements include self-training mechanisms, integration of web search during inference, and significant multimodal fine-tuning to tailor performance for medical applications. Med-Gemini exhibits state-of-the-art performance across a broad set of benchmarks covering clinical reasoning, medical knowledge application, and handling of multimodal medical data.

Core Enhancements and Benchmark Performance

Med-Gemini's architecture benefits significantly from advancements specific to medical data handling, particularly in clinical reasoning and multimodal data integration:

- Clinical Reasoning Enhancement:

- The addition of uncertainty-guided web search strategies has realized a new state-of-the-art accuracy on the MedQA (USMLE) benchmark, at 91.1%, surpassing previously leading models including Med-PALM 2 and GPT-4 augmented systems.

- A thorough re-annotation of the MedQA dataset by clinical experts exposed certain data quality issues, indicating room for further refinement in future benchmarks to better align with real-world clinical complexities.

- Multimodal Performance Tuning:

- Through targeted fine-tuning and the introduction of specialized encoders, Med-Gemini managed to achieve leading scores (SoTA) on several multimodal benchmarks like Path-VQA and ECG-QA.

- Real-world multimodal dialogue applications showed promising results, particularly in nuanced conversational contexts involving diagnostic reasoning based on image and text interplay.

- Long-Context Capabilities:

- Enhanced long-context efficiencies are evident in the model's ability to navigate extensive electronic health records (EHR) and lengthy instructional medical videos.

- This capability was demonstrated through rigorous testing in scenarios such as the "needle-in-a-haystack" task, which involved locating specific medical information within voluminous datasets.

Speculations on Future Developments

Generalization and Application Scalability

The consistent theme of adaptability across various modalities, paired with the ability to handle long-context challenges, suggests that future developments could focus on broader generalist capabilities within specialized domains, particularly in integrating real-time data feeds from clinical and biomedical sensors.

Greater Integration of Ethical AI Practices

While considerable advancements have been made, the integration of rigorous ethical review mechanisms during the model training and deployment stages is crucial, especially to address issues related to data biases, privacy, and equity in AI-assisted medical diagnostics.

Regulatory and Clinical Validation

Future iterations of Med-Gemini-like models will benefit from closer collaborations with regulatory bodies and clinical testing environments to ensure that these AI systems align with safety standards and efficacy requirements crucial in healthcare settings.

Conclusion

Med-Gemini sets a new benchmark in the integration of deep learning models into medical applications, showcasing extensive capabilities across text, image, and long-form data handling. However, this also underscores the need for continuous improvement in ethical AI practice, stringent validation processes, and a careful examination of real-world clinical utility and safety before these models can be routinely implemented in medical practice.