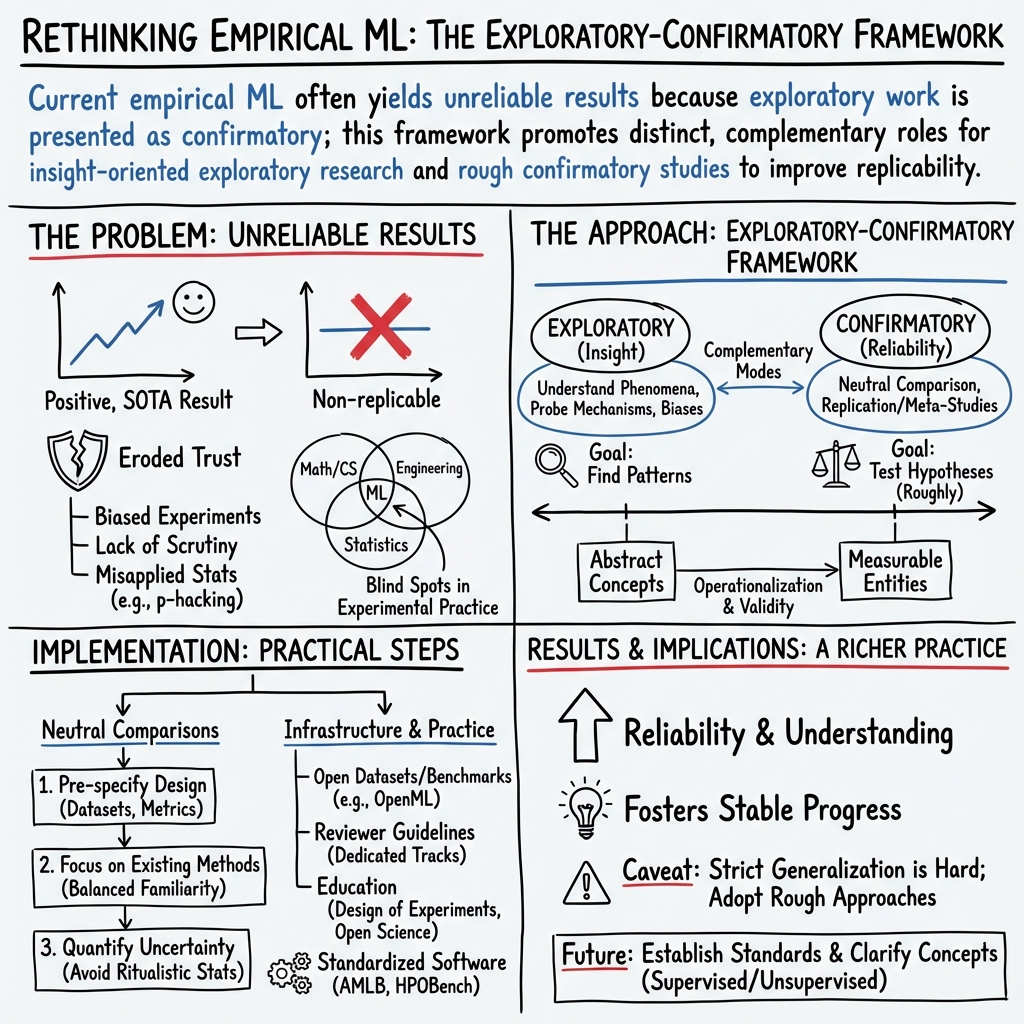

- The paper reveals that many ML studies misclassify exploratory research as confirmatory, leading to significant replicability challenges.

- It highlights the epistemic gap between mathematical rigor and practical application, raising concerns over ML's reliability in critical fields.

- The authors advocate for robust methodological frameworks and transparent research practices to enhance the credibility and innovation of ML research.

Examining Experimentation in Machine Learning: Insights on Epistemic and Methodological Concerns

The Issue with Non-Replicable ML Research

The paper discusses a pressing issue in the world of ML research: the non-replicability of many studies. This challenge isn't just a statistical annoyance—it jeopardizes the reliability of scientific findings and undermines confidence in ML applications across various fields, including medicine. The inability to consistently replicate results could lead to a significant setback in the adoption and trust in ML technologies, particularly in sensitive domains where the stakes are high.

The Root of the Problem

Epistemic Gaps: At its core, the issues stem from a deep epistemic divide; there's an alarming discontinuity between the theory-heavy, mathematically rigorous nature of ML and its application in the noisy, unpredictable real world. This dichotomy often leads to results that hold up under specific, controlled experimental conditions but falter when applied more broadly.

Misaligned Research Approaches: Moreover, the paper argues that much of what is considered confirmatory research in ML should actually be classified as exploratory. This mislabeling leads to a methodology where results are presented as conclusive and generalizable when they should be tentative and hypothesis-generating.

Practical and Theoretical Implications

Substantial evidence, including various cited studies, shows that many pivotal findings in ML fail to hold up when subjected to rigorous replication attempts. This discrepancy highlights a need for:

- Robust Methodological Frameworks: An improvement in the design, execution, and analysis of ML experiments is essential to foster replicability.

- Shifting Research Paradigms: Recognizing when research is exploratory rather than confirmatory could adjust expectations and research designs accordingly, promoting a healthier scientific process in ML.

Future Directions and Speculations

The paper is a call to action for more meticulous and thoughtful empirical research in ML. By firmly acknowledging the exploratory nature of much ML research, the community can better align its methods and expectations, improving both the reliability of results and public trust in ML applications.

Looking ahead, the paper suggests that an increase in transparency about the limits of generalizability and replicability in ML studies could foster more realistic expectations and constructive skepticism. It advocates for methodological pluralism, where different approaches to empirical research are recognized and valued for the diverse insights they offer.

Conclusion: Towards a More Reflective Machine Learning Practice

In essence, this paper sheds light on the methodological and epistemological cracks in the foundation of machine learning research, suggesting that much of the current practice mistakenly wears the guise of confirmatory science when it is inherently exploratory. Addressing this might not only enhance the robustness of research findings but also pave the way for innovations in how ML research is conducted, ultimately supporting the ongoing maturation of this dynamic field. If machine learning can embrace its identity as a maturing, inherently empirical science, it stands to gain in credibility, applicability, and innovative capacity.