Evaluating LLMs with a Panel of Smaller Models: A Cost-Effective and Less Biased Approach

Introduction to PoLL

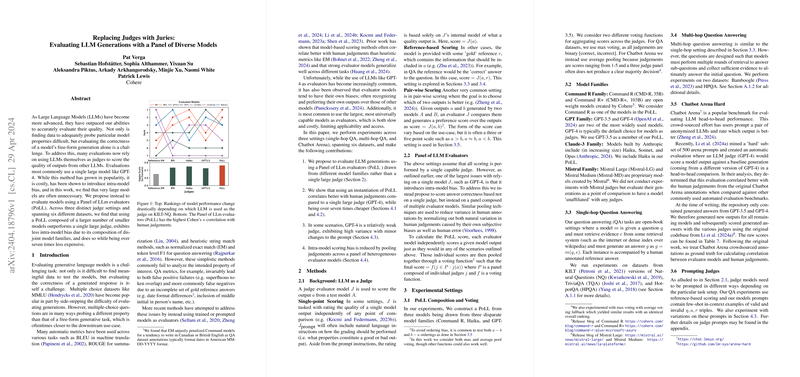

Recent research introduces the Panel of LLM evaluators (PoLL), which utilizes a collective of smaller LLMs to evaluate outputs instead of relying on a single larger model like GPT-4. This approach not only seeks to reduce the costs associated with the use of large models but also to diminish the inherent intra-model bias, making it a noteworthy innovation in the LLM evaluation landscape.

Methodological Innovations

The paper employs multiple LLMs from different model families to create a diverse evaluating panel. The models included in PoLL are from the Command R, GPT-3.5, and Haiku families. This mix aims to harness varied capacities and reduce single-model bias. PoLL's scoring process employs either max or average voting mechanisms to aggregate scores from individual models, depending on the context of the evaluation task.

Experimental Setup

The experiments span several datasets and tasks:

- Single-hop and Multi-hop QA: Utilizes datasets like Natural Questions and Bamboogle.

- Chatbot Arena: A comparison scenario where models are evaluated on dialogue tasks.

In each of these settings, outputs from test models are evaluated against 'gold standard' references or through pairwise comparisons with other model outputs.

Key Findings

Correlation with Human Judgments

The paper quantifies evaluator performance using Cohen's . Analysis reveals that PoLL consistently achieves higher correlation with human judgments across most datasets tested, indicative of its robustness and reliability as an evaluation framework.

Cost and Efficiency

The cost analysis provided demonstrates that PoLL is significantly cheaper—over seven times less than using a high-capacity model like GPT-4. This cost-effectiveness does not sacrifice evaluation quality but enhances accessibility and scalability of model testing.

Bias and Variance

Comparative analysis of intra-model scoring bias indicates that PoLL exhibits less bias across different datasets and tasks. By pooling judgments from a diverse set of models, PoLL normalizes out idiosyncratic model biases, leading to more objective evaluations.

Implications and Future Directions

Theoretical Implications

This work contributes to our understanding of LLM evaluation by highlighting the limitations of using single, large models as evaluators. By demonstrating that smaller models can collectively achieve similar or superior performance, it challenges existing paradigms and suggests a shift towards more democratic and distributed forms of model evaluation.

Practical Implications

The introduction of PoLL suggests a scalable and cost-effective model evaluation strategy that could be particularly beneficial for organizations and researchers without access to top-tier computational resources. This democratization of the evaluation process could accelerate innovation and inclusivity in AI research.

Speculations on Future AI Developments

While PoLL has shown promising results, its adaptability to other AI domains like mathematical reasoning or highly specialized tasks remains untested. Future research could explore optimal configurations of evaluators within PoLL for various domains, potentially leading to tailored evaluation panels for different sectors of AI research.

Conclusion

The introduction of a Panel of LLM evaluators in evaluating LLMs marks a significant shift towards more efficient, unbiased, and cost-effective model assessments. Crucially, this approach democratizes the capabilities of AI evaluations, making advanced assessments accessible to a broader range of developers and researchers in the AI community.