Blended RAG: Improving RAG (Retriever-Augmented Generation) Accuracy with Semantic Search and Hybrid Query-Based Retrievers

The paper "Blended RAG: Improving RAG (Retriever-Augmented Generation) Accuracy with Semantic Search and Hybrid Query-Based Retrievers" by Kunal Sawarkar, Abhilasha Mangal, and Shivam Raj Solanki addresses the limitations of conventional Retrieval-Augmented Generation (RAG) systems as the corpus of documents scales. The authors propose the 'Blended RAG' method, which leverages semantic search techniques and hybrid query strategies to enhance the accuracy of document retrieval within RAG systems.

Introduction

RAG combines generative models with retrievers that sift through external knowledge bases to provide contextually relevant information to the generation component. The accuracy of RAG systems predominantly hinges on the efficiency of the retriever. Traditional methods, which rely heavily on keyword and similarity-based searches, often fall short when dealing with large and complex datasets. This paper proposes a more nuanced approach by blending dense vector indexes and sparse encoder indexes with hybrid query strategies.

Related Work

Historically, the BM25 algorithm has been central to information retrieval (IR), exploiting Term Frequency (TF), Inverse Document Frequency (IDF), and document length to compute relevance scores. However, dense vector models using KNN algorithms have shown superiority in capturing deep semantic relationships. Sparse encoder-based models offer an efficient alternative with better precision in high-dimensional data representation.

Limitations of Current RAG Systems

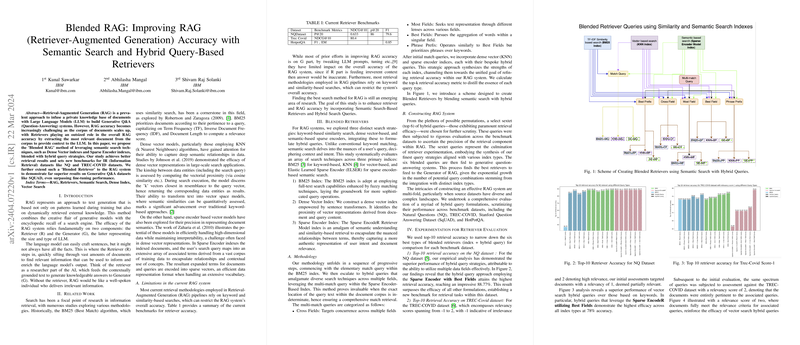

Current RAG systems struggle with accuracy due to over-reliance on keyword and similarity-based searches. As shown in Table 1 of the paper, benchmarks such as NDCG@10 and F1 scores illustrate the limitations of retriever accuracy. Typically, efforts to improve RAG systems focus on fine-tuning the generator, yet this does not address the critical issue of retrieving relevant documents.

Methodology: Blended Retrievers

The authors explored three distinct search strategies: BM25 for keyword-based, KNN for dense vector-based, and Elastic Learned Sparse Encoder (ELSER) for sparse encoder-based semantic search. Figure 1 in the paper outlines a systematic evaluation approach across these indices with hybrid queries categorized into cross-fields, most fields, best fields, and phrase prefix types.

Experimentation and Results

Retriever Evaluation

The evaluation focused on top-k retrieval accuracy metrics across datasets like NQ, TREC-COVID, SQUAD, and HotPotQA. Notably, the Sparse Encoder with Best Fields hybrid query consistently outperformed other methods. For instance, on the NQ dataset, it achieved an 88.77% retrieval accuracy. Similarly, on the TREC-COVID dataset, it reached an impressive 98% top-10 retrieval accuracy when documents were highly relevant.

RAG System Evaluation

Using FLAN-T5-XXL, the Blended RAG system surpassed previous benchmarks without dataset-specific fine-tuning. On the SQuAD dataset, it achieved 68.4% in F1 scores and 57.63% in Exact Match (EM). For the NQ dataset, it reached an EM score of 42.63, outperforming other models by significant margins.

Implications and Future Work

The main implication of this research is the demonstrated efficacy of integrating advanced retrievers with hybrid query formulations over simply scaling LLMs. This approach suggests potential improvements in various applications, from enterprise search systems to conversational AI.

Future research could explore more intricate hybrid queries and evaluate the Blended RAG system across additional datasets. Additionally, the development of better metrics beyond NDCG@10 and F1, which more closely align with human judgment, remains an open area.

Conclusion

The Blended RAG approach significantly optimizes both retriever and RAG system accuracy by integrating semantic search and hybrid queries. This method establishes a new standard in IR benchmarks, emphasizing the importance of sophisticated retrievers in enhancing generative Q&A systems.

The paper lays a strong foundation for further explorations into synergizing dense and sparse indexing approaches, setting the stage for improvements in retrieval-augmented generative architectures that can extend across diverse informational contexts.