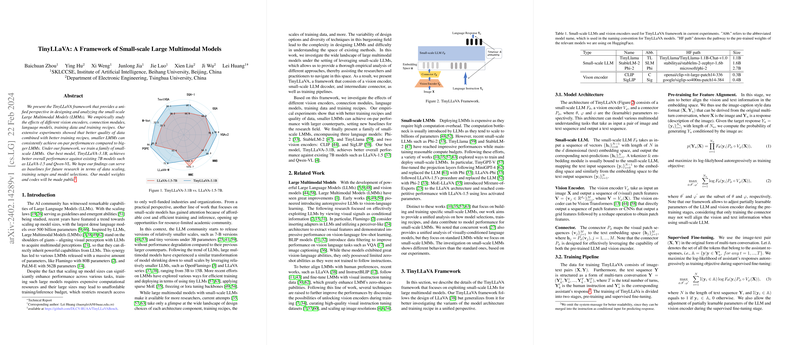

An Overview of TinyLLaVA: A Framework of Small-scale Large Multimodal Models

The paper introduces TinyLLaVA, a comprehensive framework designed for the development and examination of small-scale large multimodal models (LMMs). This research focuses on evaluating the effects of various components, such as vision encoders, connection modules, LLMs, training data, and recipes, within multimodal architectures. TinyLLaVA seeks to demonstrate that smaller LMMs can perform comparably to their larger counterparts when optimized with high-quality data and appropriate training techniques.

Model Architecture and Experimental Setup

TinyLLaVA incorporates three main components: a small-scale LLM, a vision encoder, and a connector. The researchers selected three representative small-scale LLMs: TinyLlama, StableLM-2, and Phi-2, with parameters ranging from 1.1B to 2.7B. For vision encoders, CLIP-Large and SigLIP were chosen, supported by a two-layer MLP with GELU activation as the connector. These components offer a diverse spectrum of implementations within the framework, which aids in understanding the variability and performance of different configurations.

Extensive experiments were conducted using two primary training datasets: LLaVA-1.5 and ShareGPT4V, each providing distinct benefits and challenges. The training recipes, termed base and share, differ in their approach to pre-training and fine-tuning, including varying the number of trainable parameters.

Key Findings

- Impact of Model Architecture: The experiments revealed that larger LLMs tend to enhance overall performance. Phi-2 based models, despite being more parameter-intensive, consistently outperformed others, especially in domains requiring rich comprehension like ScienceQA-IMG. SigLIP, when used as a vision encoder, provided substantial improvements over CLIP, attributed to its higher resolution and more visual tokens.

- Role of Training Data and Recipes: A significant point of analysis was the effect of the training dataset and the configuration of training recipes. Training on the ShareGPT4V dataset, which is larger and more diverse, generally led to superior performance. Fine-tuning, especially when fine-tuning part of the vision encoder, improved outcomes for smaller LLMs. However, this introduced more hallucinations for models with larger LLMs, suggesting a nuanced trade-off between trainability and performance fidelity.

- Comparison Against State-of-the-art Models: TinyLLaVA-3.1B demonstrated noteworthy results on benchmark tests, outperforming existing 7B models like LLaVA-1.5 and Qwen-VL in several categories. This achievement underscores the framework's potential in designing efficient and powerful small-scale models.

Implications and Future Directions

TinyLLaVA's experimental results contribute valuable insights for the design of resource-efficient LMMs, opening avenues for wider accessibility in research environments with limited computational capacity. The findings suggest that optimization strategies focusing on data quality and selective fine-tuning can mitigate the parameter disadvantage of smaller LMMs.

Future research directions could explore the integration of dynamic training strategies that adaptively allocate resources based on model architecture and data properties. Another intriguing direction would be enhanced connector designs that further improve the interplay between vision and language modalities, possibly incorporating more sophisticated architectures or learning techniques.

In conclusion, the TinyLLaVA framework offers a robust baseline for future exploration in the scaling down of multimodal models without compromising on performance, thereby contributing significantly to the fields of AI and machine learning.