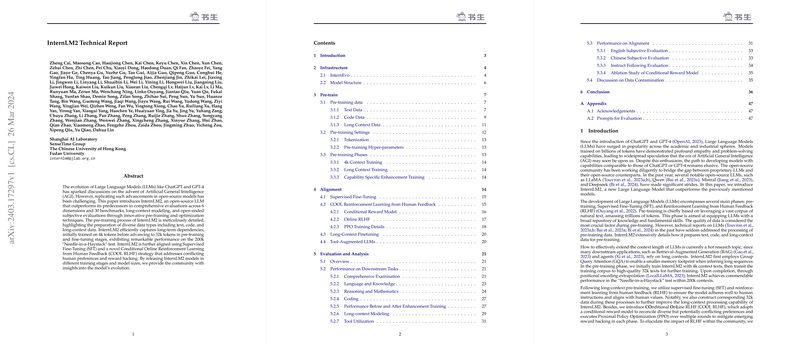

InternLM2: A Comprehensive Overview on Pre-Training and Alignment Strategies

Pre-training Process and Data Preparation

The development of InternLM2, an open-source LLM, involves meticulous pre-training on a diverse mixture of text, code, and long-context data. The pre-training corpus is significant, amassing trillions of tokens from various sources including web pages, academic papers, and publicly available text resources. Special attention is paid to the quality of pre-training data, ensuring that it is high-quality, relevant, and encompasses a wide knowledge base.

Text Data

Text data is collected from multiple sources and rigorously processed through steps including standardization, deduplication, and safety filtering to ensure not only the diversity but also the safety and quality of the pre-training corpus.

Code Data

Given the increasing importance of programming and coding skills in LLMs, InternLM2's pre-training data notably includes a significant amount of code data. This corpus is carefully curated to cover a wide range of programming languages and domains, enhancing the model's coding capabilities.

Long Context Data

InternLM2 stands out for its effective incorporation of long-context data during pre-training. This innovative step enables the model to efficiently handle long-context scenarios, vastly expanding its application potentials. The long-context data preparation involves additional filtering and quality checks to ensure its relevance and utility in training.

Innovative Pre-training and Optimization Techniques

InternLM2 pre-training consists of three distinct phases, focusing on models that efficiently capture long-term dependencies. The innovative Group Query Attention (GQA) mechanism is introduced to decrease memory requirements during inference, making long-sequence processing more feasible.

Conditional Online RLHF and Alignment Strategies

InternLM2's alignment phase employs a novel Conditional Online Reinforcement Learning from Human Feedback (COOL RLHF) strategy. This involves the use of a conditional reward model to harmonize conflicting human preferences and multi-round RLHF to address emergent reward hacking behaviors. The conditional reward model dynamically adjusts its priorities based on the given conditions, maintaining consistent performance across varied tasks.

Comprehensive Evaluation and Analysis

InternLM2 is evaluated across several benchmarks covering a wide array of tasks and capabilities including comprehensive examinations, knowledge tasks, coding problems, reasoning, mathematics, and long-context modeling. InternLM2 exhibits strong numerical results and significant improvements in performance post alignment training, demonstrating its effectiveness in aligning with human preferences and extending its utility in real-world applications.

InternLM2's performance in coding tasks, specifically in Python and multiple programming languages, showcases its robust coding capabilities. Similarly, in long-context modeling tasks, InternLM2 demonstrates exceptional performance, marking it as a versatile model capable of handling intricate tasks requiring extensive contextual understanding.

Implications and Future Developments

InternLM2's comprehensive development strategy, focusing on diverse pre-training data, innovative optimization techniques, and strategic alignment training, outlines a promising approach to advancing LLM capabilities. The release of pre-training checkpoints offers the community valuable insights into the evolution of LLMs. Looking ahead, the continual refinement of alignment strategies and expansion of pre-training data can further enhance LLMs' effectiveness, broadening their applicability across numerous domains.