Introduction

LLMs are transforming the landscape of AI, empowering systems to handle tasks ranging from text summarization to complex code completion. Their development, largely based on decoder-only Transformers, leverages massive datasets for self-supervised pre-training followed by processes like supervised fine-tuning and reward modeling to better align with user intentions. Open-source models, albeit with substantial progress, still explore the extents of scaling these LLMs to better meet or exceed the performances of closed, proprietary systems.

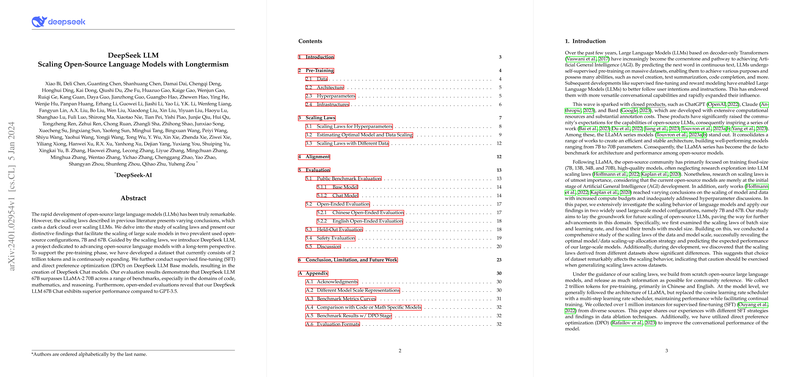

Pre-Training and Architecture Insight

DeepSeek LLM unfolds as an open-source endeavor designed for the meticulous scaling of LLMs, a project born to fulfill the long-term objectives surrounding such models. The team developed a dataset containing a staggering 2 trillion tokens primarily in English and Chinese, targeting diversity and informational density. They adopted a robust architecture largely reflective of existing successful designs but added their insights, such as using a multi-step learning rate scheduler for efficient and optimized continued training. With model configurations set to 7B and 67B parameters, the infrastructure prioritizes effective communication and computation overlap to enhance resource utilization.

Scaling Laws and Model Optimization

A key contribution of this paper lies in the examination of scaling laws for LLMs. The researchers propose a new empirical framework for identifying optimal hyperparameters such as batch size and learning rate, necessary for near-optimal performance across varying compute budgets. The paper introduces a refined scaling-up strategy, emphasizing the significance of non-embedding FLOPs per token as a precise indicator of model scale. They discovered that the data quality significantly influences model scaling, with high-quality datasets encouraging the allocation of increased compute resources towards model size expansion. This insight compels the community to look beyond mere enlargement towards a strategic computational allocation based on data caliber.

Evaluation and Fine-Tuning

DeepSeek LLM's evaluation showcases its prowess across a broad spectrum of benchmarks, with the 67B model excelling in coding, mathematics, and reasoning. Their evaluation strategy also includes a safety assessment, ensuring the model's responses adhere to ethical standards. Further, the paper details the team's approach to fine-tuning, employing a dual-stage process to balance the model's specialized knowledge against its conversational abilities. The subsequent direct preference optimization solidifies the DeepSeek Chat models' effectiveness, making it a formidable competitor in the open-ended and help-oriented response generation.

Reflection and Future Work

While DeepSeek LLM carves a promising path in the open-source landscape of AI, it acknowledges inherent limitations, such as static knowledge post-training and the potential for generating unreliable content. The team is committed to continual advancement, with further improvements in dataset quality, language diversity, and alignment methodologies on the horizon. Their efforts signal a commitment not merely to enhance model capabilities but to ensure these AI systems serve the greater good responsibly and effectively while remaining accessible to the wider community.