The paper "Parameter-Efficient Fine-Tuning for Large Models: A Comprehensive Survey" (Han et al., 21 Mar 2024 ) provides an extensive overview of Parameter-Efficient Fine-Tuning (PEFT) methods, which have emerged as a crucial technique for adapting large pre-trained models to downstream tasks while mitigating the significant computational costs associated with full model fine-tuning.

The core problem PEFT addresses is the immense scale of large models, often containing billions of parameters. Fine-tuning these models by updating all parameters requires vast computational resources, including high-end hardware, significant memory, and extensive training time, making it impractical for many researchers and practitioners. PEFT offers a practical solution by modifying only a small fraction of the model's parameters or introducing a minimal number of new trainable parameters. This approach substantially reduces computational overhead, memory requirements, and storage for task-specific models.

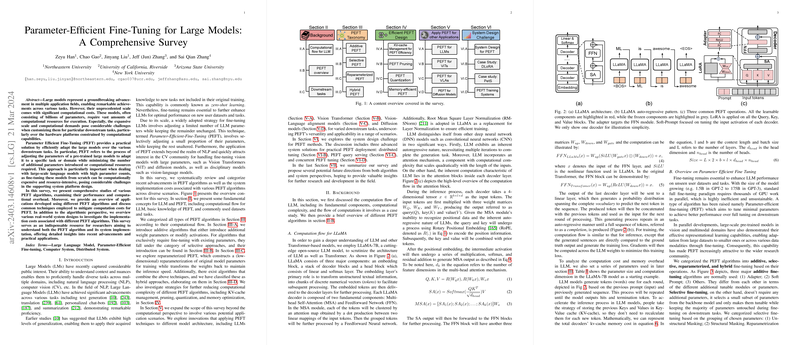

The survey categorizes PEFT algorithms into four main types based on their operational mechanisms:

- Additive PEFT: These methods introduce new, trainable parameters while keeping the original pre-trained model frozen. The additional parameters are strategically placed within the model architecture.

- Adapters: Small bottleneck feed-forward networks inserted within Transformer blocks (e.g., after attention or FFN layers). Only the adapter weights are trained. Variations include Serial Adapters, Parallel Adapters, and methods like AdapterFusion and AdaMix for multi-task adaptation.

- Soft Prompts: Learnable vectors prepended to the input embeddings or inserted into intermediate layers of the Transformer. Prompt tuning and Prefix-tuning are examples, where continuous prompt embeddings are optimized. Only the prompt parameters are trained.

- Others: Techniques like and SSF introduce learnable scaling and shifting vectors applied to activations. These can often be merged into the original model weights after training, incurring no inference overhead.

- Selective PEFT: This category involves fine-tuning only a subset of the existing parameters of the pre-trained model, keeping the majority frozen.

- Unstructured Masking: A binary mask is applied to individual parameters, selecting which ones to train. Methods like Diff pruning, PaFi, and FishMask use different criteria (magnitude, Fisher information) to determine the mask.

- Structural Masking: Parameter selection is organized in regular patterns (e.g., per layer, per module, or based on weight groups) to enhance hardware efficiency. Examples include structured variants of Diff pruning and methods like Bitfit (tuning only biases) and Xattn Tuning (tuning only cross-attention layers).

- Reparameterized PEFT: This approach constructs a low-dimensional reparameterization of the original model parameters during training, which can then be equivalently transformed back into the original parameter space for inference, ideally without added latency.

- Low-rank Decomposition: The most prominent example is LoRA (Low-Rank Adaptation), which represents the update to a weight matrix () as the product of two low-rank matrices (). Only and are trained. After training, is added to the original weight matrix.

- LoRA Derivatives: Methods like DyLoRA and AdaLoRA dynamically adjust the rank during training. Others like Laplace-LoRA and LoRA+ propose training improvements (Bayesian approach, differential learning rates). Multiple LoRA methods like LoRAHub and MOELoRA compose or select from multiple LoRA modules for different tasks or instances. Other reparameterized methods include Compacter and KronA (using Kronecker products), HiWi (applying adapter to weights), VeRA (using shared frozen matrices and trainable vectors), and DoRA (decomposing weights into magnitude and direction).

- Hybrid PEFT: These methods combine techniques from multiple categories to leverage their respective advantages. Examples include UniPELT (integrating LoRA, prefix-tuning, and adapters with a gating mechanism) and methods that use Neural Architecture Search (NAS) like NOAH and AUTOPEFT to find optimal combinations of PEFT techniques for specific tasks.

Beyond algorithmic design, the survey discusses practical considerations for efficient PEFT implementation, particularly focusing on memory and computational bottlenecks. KV-cache management is crucial for efficient autoregressive inference in LLMs. The paper also highlights the application of model compression techniques on PEFT methods or in conjunction with PEFT:

- PEFT Pruning: Techniques like AdapterDrop and SparseAdapter prune the parameters of the PEFT modules themselves (e.g., removing adapters or sparsifying LoRA weights) to reduce computational and memory overhead.

- PEFT Quantization: Quantizing the weights of PEFT modules (e.g., BI-Adapter, PEQA) or the base model weights during PEFT training (e.g., QLoRA, LoftQ, LQ-LoRA, QA-LoRA, BitDelta) significantly reduces memory footprint and can accelerate computation on hardware supporting low-precision arithmetic.

- Memory-efficient PEFT: Methods like Side-Tuning, LST, Res-Tuning, and MEFT focus on reducing the memory required during training, often by minimizing or eliminating the need to store gradients for the entire pre-trained backbone model. Techniques like LoRA-FA optimize memory by freezing certain low-rank components. Other methods like HyperTuning and MeZO explore training without full backpropagation on the large model.

The survey also demonstrates the versatility of PEFT by reviewing its applications beyond traditional NLP tasks and models:

- PEFT for LLMs -- Beyond the Basics: Applications include visual instruction following (e.g., VL-Adapter, LLaMA-Adapter), continual learning (e.g., AdapterCL, CPT, O-LoRA), and context window extension (e.g., LongLoRA, LongQLoRA, LLoCO) where PEFT helps adapt models to new data, tasks, or longer sequences efficiently.

- PEFT for ViTs: Applying PEFT methods like Adapters (e.g., AdaptFormer, ST-Adapter, AIM) and Visual Prompt Tuning (VPT) to Vision Transformers for tasks like image classification and video recognition.

- PEFT for VLAs: Using PEFT (e.g., prompt tuning like CoOp, CoCoOp, MaPLe, TPT; or adapters like CLIP-Adapter, Tip-Adapter) to adapt Vision-Language Alignment models like CLIP for tasks such as open-vocabulary image classification.

- PEFT for Diffusion Models: Employing PEFT techniques (e.g., gated layers like GLIGEN, fine-tuning copies like ControlNet, LoRA like Concept Sliders, adapters like T2I-Adapter, or soft prompts like Textual Inversion) to adapt pre-trained diffusion models for additional input control or customized content generation.

Finally, the paper addresses system design challenges for deploying and training PEFT models in real-world scenarios.

- Centralized PEFT Query Serving: Cloud providers serving multiple users require efficient systems to handle diverse PEFT requests on a single large model instance. This involves challenges in batching and scheduling. PetS [pets] is presented as a case paper for managing multi-PEFT inference requests through coordinated batching and macro-batch streaming, leveraging kernel fusion for efficiency.

- Distributed System for PEFT: Training on sensitive user data necessitates distributed approaches where the base model stays on the server and PEFT modules are trained on user devices. DLoRA [gao2024dlora] is an example of a distributed PEFT framework.

- Multi-PEFT Training: Simultaneously training multiple PEFT instances (possibly for different users or tasks) on shared infrastructure requires efficient memory management, gradient handling, and batching strategies. Frameworks like S-LoRA [slora] and Punica [punica] tackle these challenges by optimizing kernel design and multi-tenant serving for LoRA modules. Offsite-Tuning [offsite-tuning] is another case paper focusing on privacy-preserving distributed tuning using a learnable adapter and a compressed emulator.

The survey concludes by highlighting future research directions, including the simplification of hyperparameter tuning for PEFT methods, the establishment of unified benchmarks for fair comparison, further enhancing training efficiency through better memory management and compression integration, exploring scaling laws for PEFT with increasingly large models, expanding PEFT applications to new model architectures and tasks, enhancing data privacy in PEFT systems, and investigating the interplay between model compression techniques and PEFT performance on hardware.