Parameter-Efficient Fine-Tuning for Pre-Trained Vision Models: A Survey

The survey paper titled "Parameter-Efficient Fine-Tuning for Pre-Trained Vision Models" offers a meticulous examination of parameter-efficient fine-tuning (PEFT) techniques within the context of pre-trained vision models (PVMs). With the increasing size of state-of-the-art PVMs, traditional full fine-tuning methods are becoming computationally infeasible. This paper provides a structured analysis of approaches that address this challenge by enabling efficient adaptation of these models to various downstream tasks with minimal computational cost.

Key Contributions

The authors begin by formalizing the concept of PEFT, delineating its significance in contemporary model adaptation. As PVMs grow in size, PEFT has emerged as a crucial mechanism to leverage their capabilities without incurring prohibitive resource demands.

Methodological Categorization

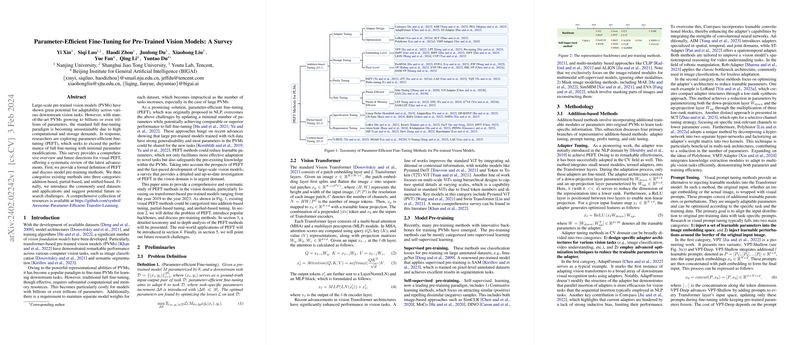

The survey classifies PEFT methods into three broad categories: addition-based, partial-based, and unified-based tuning. Each category addresses different aspects of model adaptation:

- Addition-Based Methods: These methods add tunable parameters to the model, with variations such as adapter tuning, prompt tuning, prefix tuning, and side tuning. The survey explores the architectural nuances of these methods, highlighting innovations like AdaptFormer and VPT-Deep, which effectively balance parameter efficiency and task-specific performance.

- Partial-Based Methods: Focusing on modifying only select parameters within the model, these methods include specification tuning and reparameter tuning. Techniques like BitFit optimize biases while keeping the bulk of parameters fixed, exemplifying effective trade-offs between simplicity and adaptability.

- Unified-Based Tuning: By integrating various tuning strategies within a cohesive framework, unified-based tuning aims to streamline the adaptation process. For instance, NOAH employs neural architecture search to optimize tuning designs across tasks, presenting a holistic approach to PEFT.

Practical Applications and Dataset Evaluation

The application of PEFT extends across various domains, with the survey highlighting key datasets used for benchmark evaluations, such as FGVC for image classification and Kinetics-400 for video recognition. The authors detail how PEFT methods have been employed successfully in these contexts, hinting at potential further application in generative and multimodal models.

Insights and Future Directions

This paper underscores the importance of developing efficient model tuning techniques as deep learning models continue to scale. It anticipates a growing need for enhancing the explainability of PEFT methods, particularly in understanding how visual prompts can be interpreted within model structures. Additionally, there is an opportunity to expand PEFT applications to generative tasks and multimodal domains, offering avenues for future research advancements.

In conclusion, this survey serves as a comprehensive resource for researchers exploring parameter efficiency in vision models. By analyzing current methodologies and identifying areas for future exploration, it contributes significantly to the understanding and evolution of fine-tuning practices in the field of computer vision.