Parameter-Efficient Fine-Tuning Methods for Pretrained LLMs: A Critical Review and Assessment

The paper "Parameter-Efficient Fine-Tuning Methods for Pretrained LLMs: A Critical Review and Assessment" provides a comprehensive exploration of Parameter Efficient Fine-Tuning (PEFT) methodologies developed to enhance large-scale pretrained LLMs (PLMs) with reduced computational costs. With the rapid expansion of parameter volume in transformer-based LLMs, exemplified by LLMs running into hundreds of billions of parameters, the challenges of computational expense and memory constraints have become critical for adapting these models to specific tasks. The paper offers an extensive review of PEFT methods, their applications, and evolution, and presents quantitative experiments to evaluate their performance in terms of parameter efficiency and memory usage.

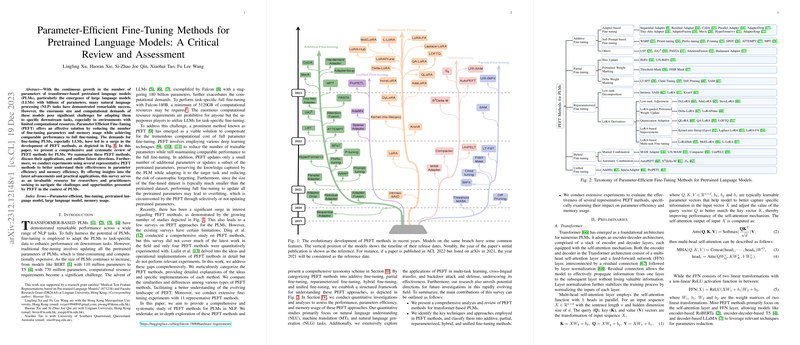

Overview of PEFT Methods

The authors categorize the PEFT strategies into five core fine-tuning paradigms: additive fine-tuning, partial fine-tuning, reparameterized fine-tuning, hybrid fine-tuning, and unified fine-tuning. This classification provides a structured understanding while highlighting shared mechanisms and innovations within each category.

- Additive Fine-tuning: Techniques like adapters and soft prompts fall into this category. They add supplemental trainable parameters to the network, optimizing only these parameters while keeping the vast majority of the PLM's parameters intact.

- Partial Fine-tuning: Bias updates and masking approaches, such as BitFit, involve updating a subset of weights or parameters, ensuring computational and memory savings.

- Reparameterized Fine-tuning: This category leverages low-rank transformations, as seen in LoRA, to reparameterize weight updates, effectively balancing between parameter savings and maintaining model flexibility.

- Hybrid and Unified Fine-tuning: These methodologies merge various existing techniques to leverage their respective advantages. Hybrid methods manually or automatically combine different PEFT strategies, while unified approaches aim to standardize the adaptation process.

Empirical Evaluation

The experiments detailed in the paper involve fine-tuning representative PEFT methods on transformer architectures like RoBERTa and T5 across well-known NLP benchmarks, such as the GLUE benchmark and the WMT16 En-Ro dataset. The results evidentially show that most PEFT methods achieve performance comparable to full fine-tuning with significantly fewer trainable parameters. For instance, the ProPELT adapter yielded superior average performance on GLUE when fine-tuning RoBERTa with a fraction of the parameters compared to full tuning. Similarly, memory efficiency is prominently enhanced, with QLoRA notably reducing memory requirements while maintaining accuracy on tasks, making LLMs more accessible in resource-constrained environments.

Practical and Theoretical Implications

The implications of these findings underscore the utility of PEFT for scalable and efficient deployment of LLMs. They present meaningful avenues for integrating LLMs into applications where computational resources are limited, or where rapid adaptation to new tasks is essential. Theoretically, the paper's structured categorization and analysis of PEFT methods provides key insights into the mechanisms enabling efficient fine-tuning. It points to possible enhancements in algorithmic design for optimizing sparsity, low-rank usage, and hybrid adaptation methods.

Future Directions

The paper concludes with potential avenues for further exploration, such as developing lighter hybrid PEFT methods, improving LoRA-derived techniques, integrating more PEFT methods into existing libraries, and furthering understanding through in-depth explanation studies. Furthermore, the possible applications of PEFT methods in computer vision and multimodal domains represent a fertile area for research and development.

In summary, the paper presents a rigorous and insightful survey of PEFT methods, offering both practical guidance for leveraging these techniques and theoretical foundations to inspire future research. The work highlights the evolving landscape of transformer fine-tuning and its relevance to modern AI development. The investigation into PEFT methods demonstrates a critical pathway to democratizing access to cutting-edge LLMs by alleviating the necessity for substantial computational resources.