Beyond Text: Frozen LLMs in Visual Signal Comprehension

The paper "Beyond Text: Frozen LLMs in Visual Signal Comprehension" by Lei Zhu, Fangyun Wei, and Yanye Lu, explores the innovative notion that LLMs can be leveraged to comprehend visual signals without the need for extensive fine-tuning on multi-modal datasets. The authors introduce a novel framework which treats images as linguistic entities, translating visual inputs into discrete words within the LLM’s vocabulary. The core component of this methodology is the Vision-to-Language (V2L) Tokenizer, which facilitates the transformation of images into token sequences recognizable by an LLM, enabling tasks traditionally requiring dedicated vision models.

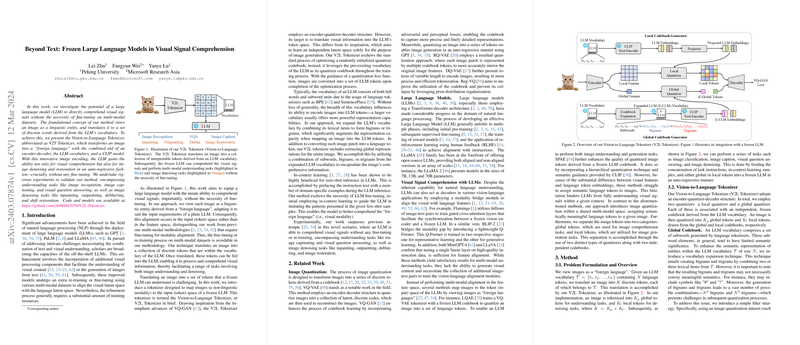

Methodology

The V2L Tokenizer forms the cornerstone of this approach. It utilizes an encoder-decoder structure alongside a CLIP model to translate images into interpretable tokens:

- Encoder: This consists of a CNN to extract local features and a CLIP-vision-encoder to capture global image representations.

- Tokenizer: Images are translated into a set of discrete tokens derived from a frozen LLM’s vocabulary. Global and local tokens are generated via distinct quantizers, mapping visual features into the LLM’s token space.

- Decoder: The decoder reconstructs the image from the tokens, utilizing a cross-attention mechanism to incorporate global information seamlessly.

Visual Signal Tasks

With the V2L Tokenizer, LLMs can tackle a breadth of visual signal tasks purely through token manipulation, bypassing the need for traditional feature alignment:

- Image Understanding: Tasks such as image recognition, image captioning, and visual question answering are approached by providing the LLM with global tokens and in-context learning samples.

- Image Denoising: Activities like inpainting, outpainting, deblurring, and shift restoration are managed using local tokens, leveraging LLMs’ in-context learning to predict masked tokens and reconstruct images incrementally.

Experimental Validation

The efficacy of the V2L Tokenizer is demonstrated through rigorous experimentation:

- Few-shot Classification: On the Mini-ImageNet benchmark, the proposed method outperforms existing approaches, achieving superior accuracy across various N-way K-shot scenarios. Notably, the method excels even without extensive vocabulary size or LLMs of extreme scale, highlighting its efficiency.

- Semantic Interpretation: The vocabulary expansion strategy enhances the semantic quality of tokens, translating to higher performance in image captioning and visual question answering tasks. Tokens generated using this method align closely with the semantic content of images, validated through elevated CLIP and CLIP-R scores.

- Image Reconstruction and Denoising: Quantitative evaluations exhibit that the V2L tokenizer surpasses previous models in image restoration tasks, achieving lower FID and LPIPS scores. This affirms the technique’s capability in precise image reconstruction and effective denoising.

Implications

This research posits significant implications for both practical applications and theoretical frameworks within AI:

- Practical Applications: The approach offers a streamlined path to integrate visual comprehension capabilities into LLMs without retraining on multi-modal datasets. This could facilitate a broader range of applications from automated image annotation to advanced human-machine interaction systems.

- Theoretical Underpinnings: The success of viewing images as “foreign language” entities may inspire further exploration into modality-agnostic token strategies. It blurs traditional divisions between visual and linguistic processing, potentially reshaping future multi-modal AI architecture design.

Future Directions

Speculations on future developments encompass:

- Tokenization Improvements: Refining the vocabulary expansion and tokenization techniques further to capture richer semantic nuances.

- Scalability: Adapting and scaling the approach for larger, more diverse datasets to validate robustness and utility in real-world applications.

- Integration: Developing seamless integrations with other AI systems to leverage frozen LLMs’ capabilities across diverse multi-modal tasks efficiently.

Conclusion

The paper effectively demonstrates that frozen LLMs can be adeptly employed for visual signal comprehension through a novel token-based approach. The V2L Tokenizer bridges visual and linguistic domains without extensive re-training, underscoring a significant step forward in broadening the functional scope of LLMs. Such advancements promise to foster more versatile, resource-efficient artificial intelligence systems, spurring new possibilities in the intersection of vision and language understanding.